How Much Value is there in a Software Operational Qualification?

Answers to common questions about operational qualification (OQ) software

Software operational qualification (OQ) is considered a mandatory item in a computerized system validation project for a regulated laboratory. But we should ask two questions: Is OQ really essential to a validation project for this type of software? How much value does a software OQ for commercially available software actually provide to a validation project?

Validation of computerized systems requires that a regulated organization working under good laboratory practice (GLP) or good manufacturing practice (GMP) requirements implement or develop a system that follows a predefined life cycle and generates documented evidence of the work performed. The extent of work undertaken on each system depends on the nature of the software used to automate the process and the impact that the records generated by it have (1–3). In essence, application software can be classified into one of three software categories using the approach described in the ISPE's Good Automated Manufacturing Practice (GAMP) Guidelines, version 5 ("GAMP 5") (4):

- commercially available nonconfigured product (category 3)

- commercially available configured product (category 4)

- custom software and modules (category 5).

A justified and documented risk assessment is the key to ensuring that the validation work can be defended in an inspection or audit. Therefore, the work that a laboratory needs to perform will increase with the increasing complexity of the software (5). This discussion focuses on commercially available software, or GAMP software categories 3 and 4 (5,6). The arguments presented here are not intended to be applied for custom software applications or modules (such as macros or custom code add-ons to commercial software), which require a different approach. Nor will I consider the role of a supplier audit in leveraging the testing carried out during product development and release into a laboratory's validation efforts.

Verification Stages of a Life Cycle

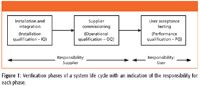

Terminology is all important to avoid misunderstandings. We are looking at the verification stage of a life cycle in which the purchased system and its components are installed and checked out by the supplier (installation qualification [IQ] and operational qualification [OQ]) and then user acceptance testing is carried out against the requirements in the user requirement specification (URS) to demonstrate that the system is fit for intended use (performance qualification [PQ]).

Figure 1: Verification phases of a system life cycle with an indication of the responsibility for each phase.

In Figure 1, we see three phases of verification, together with the allocation of responsibility for each phase. Each phase is described as follows:

- Installation and integration (IQ) — In essence, this asks the following questions: Do you have all of the items that you ordered? Have they been installed correctly? Have the components been connected together correctly?

- Supplier commissioning (OQ) — Does the system work as the supplier expects? Typically, this is performed on a clean installation of the software, rather than configuring specifically for the OQ.

Not covered in Figure 1 is the user task of configuring the application software. For a category 3 application this may be limited to the setup of the user roles, the associated access privileges, and allocation of these to individual users. Additional steps for configurable applications include turning functions on or off, setting calculations to be used for specific methods, and selecting report templates to be generated. This turns the installed software into the as-configured application for your laboratory, which the users are responsible for.

User acceptance testing (PQ) — Does the system work as the user expects against the requirements in the URS? This will be performed on the configured application if you are using a category 4 application.

Following the successful completion of the user acceptance tests and writing of the validation summary report, the system is released for operational use. Please note that the above descriptions of IQ, OQ, and PQ apply only to software and are not the same as outlined in the United States Pharmacopeia (USP) Chapter <1058> on analytical instrument qualification (AIQ) (7).

In this column, I want to focus on the supplier commissioning or OQ and ask the first of the two questions I raised at the start.

Is OQ Essential to a Validation Project?

Before I answer this question, let me put the question in the heading into context of category 3 and category 4 commercially available software. When looking at the validation of such applications we need to consider two system life cycles that will occur during the project. The first is the development of the new version of the product by the supplier, and the second is that of the user laboratory carried out to validate the software for the intended use. This is discussed in the GAMP 5 section on life-cycle models (4).

The main deliverable, from the perspective of the laboratory, is the CD or DVD with the application program and associated goodies on it for installation to a user's computer. This optical disk is the direct link between the two life cycles. As shown in Figure 2, it is the medium on which the released application is transferred to your laboratory computer system. It is one of the inputs to the installation and commissioning phase of the laboratory validation life cycle where the IQ and OQ will be performed.

Figure 2: Integration of the system life cycles of the supplier and laboratory.

Looked at from a software perspective, what are some of the problems that could occur in the transfer of the application program from the supplier's development process to the optical and disk and then installation onto a laboratory's computer system? These include

- missing or incomplete transfer of the whole program to the optical disk

- optical disk is corrupted

- installation on the laboratory computer system fails

- incomplete installation on the laboratory computer system.

Typically, most of these problems would be picked up by the supplier's service engineer during the installation of the software and integration with the rest of the system components, especially if there were a utility to check that the correct software executables had been installed in the correct directories by the installation program. Hence, the importance of verifying that the installation is correct (that is, executing an installation qualification).

Now we come to the second stage of the verification process: supplier commissioning or the operational qualification. This is necessary because successful execution of this phase of the validation is equivalent to saying that the system works from the supplier's perspective. However, there are varying degrees of OQ offered from suppliers, ranging from the sublime to the ridiculous (and expensive).

The aim of the software OQ is to demonstrate that the system works from the supplier's perspective, which will allow a laboratory to configure the application and then carry out user acceptance testing, or PQ. In an earlier installment (8) I discussed the quality of supplier instrument IQ and OQ documentation, the same principle applies to software OQs. Quite simply, you as the end user of the system are responsible for the work carried out by the supplier; this includes the quality of the documentation, the quality of the tests, and the quality of the work performed by the supplier's engineer. This is not my opinion, it is the law. In Europe, this is now very explicit. The new version of Annex 11 to the European Union's GMP regulations that was issued last year states that suppliers have to be assessed and that there need to be contracts in place for any work carried out (9,10).

In the US, 21CFR 211.160(a) (11) requires that

The establishment of any specifications, standards, sampling plans, test procedures, or other laboratory control mechanisms required by this subpart, including any change in such specification, standards, sampling plans, test procedures, or other laboratory control mechanisms, shall be drafted by the appropriate organizational unit and reviewed and approved by the quality control unit.

Therefore, any supplier of IQ and OQ documents needs to be approved by the quality unit before execution, which does not always occur. Post execution, the results must also be reviewed and approved by internal quality unit personnel. Failure to comply with this regulation can result in a starring appearance on the Food and Drug Administration's (FDA) wall of shame or warning letters section of their web site, as Spolana found out (12): "Furthermore, calibration data and results provided by an outside contractor were not checked, reviewed and approved by a responsible Q.C. or Q.A. official."

Therefore, never accept IQ or OQ documentation from a supplier without evaluating and approving it before execution. Check not only coverage of testing, but also that test results are quantified (that is, have supporting evidence) rather than solely relying on qualified terms (such as pass or fail). Quantified results allow for subsequent review and independent evaluation of the test results. Furthermore, ensure personnel involved with IQ and OQ work from the vendor are trained appropriately by checking documented evidence of such training — for example, check that training certificates to execute the OQ are current before the work is carried out.

An OQ Case Study

During an audit of a supplier of a configurable software system, it was discovered that the software OQ package was the supplier's complete internal test suite for the release of the product. This is offered for sale at $N (where N is a very large number) or free if the supplier executes the protocol which would cost $N × 3, plus expenses (naturally!). During execution of the OQ, the application software is configured with security settings and systems according to the vendor's rather than the user's requirements. Following completion of the OQ, the laboratory would have to change the configuration of user types and access privileges, linked equipment, and other software configuration settings to those actually required before starting the user acceptance testing.

So let me pose the question: What is the value of this OQ that is repeating the execution of the supplier's complete internal test suite?

Zero? My thoughts exactly. However, let me raise another question: How many laboratories would purchase and execute this protocol just because it is a general expectation that they do so?

Let us look in more detail at Figure 2. Typically, the supplier will take the released software and burn it onto a master optical disk that is used to make the disks sold commercially. We have one copy stage that will be checked to ensure the copying is correct. The application is purchased by a laboratory, as part of the purchase they have paid for an IQ to be performed by the supplier. When the IQ is completed successfully, then the software has been correctly transferred from the commercial disk to the laboratory's computer system and is the same as that released and tested by the supplier. Therefore, why do they need to repeat the whole internal test suite on the customer's site? Where is the value to the validation project?

Do You Believe in Risk Management?

The problem with the pharmaceutical industry is that it is ultraconservative. Even when laboratories want to introduce new ideas and approaches, the conservative nature of quality assurance tends to hold them back. However, as resources are squeezed by the economic situation, industry must become leaner and embrace effective risk management to place resources where they can be used most effectively. Moreover, clause 1 of the EU's Annex 11 (9) requires that risk management principles be applied throughout the life cycle. In light of this, let's look at doing just that for an OQ.

Let's look at the OQ study above. We have a situation in which there is a repeat of the internal test suite on the customer's site. Is this justified? Not in my opinion. What benefit would be gained from executing the OQ? Not very much, because the configuration tested is that of the supplier's own devising and not the customer's. Therefore, why waste time and money on an OQ that provides no benefit to the overall validation? What will you achieve by this? You would generate a pile of very expensive paper with little benefit other than ticking a box in the validation project. It is at this point that you realize that GMP actually stands for "Great Mountains of Paper."

The risk management approach taken with the project described above was to document in the validation plan of the system that a separate OQ was not going to be performed because of the reasons outlined above. In fact, the OQ would be combined with the PQ or user acceptance testing that was going to be undertaken. Can this be justified? Yes, but don't take my word for it. Have a look at Annex 15 (13) of the EU GMP regulations, entitled Qualification and Validation. Clause 18 states "Although PQ is described as a separate activity, it may in some cases be appropriate to perform it in conjunction with OQ."

However, you will need to document and justify the approach taken as noted in Annex 11 clause 4.1 (9): "The validation documentation and reports should cover the relevant steps of the life cycle. Manufacturers should be able to justify their standards, protocols, acceptance criteria, procedures and records based on their risk assessment."

Presenting a document to read will be far easier than squirming in front of an auditor or inspector, won't it?

One Size Fits All?

Although the case study discussed above is an extreme case, can the approach to dispense with an OQ be taken for all category 3 and category 4 software? Well, to quote that classic response of consultants, "It depends . . . ."

Let us start with category 3 software, which is nonconfigurable commercially available software; the business process automated by the application cannot be altered. In the life-cycle model proposed by GAMP 5 (4,5) for this category of software there are not separate OQ and PQ phases but just a single task of verification against the predefined user requirements. Does this mean we don't need a supplier OQ? Yes and no. You may think that this is a strange reply, but let's think it through.

If a supplier OQ exists, does it test your user requirements in sufficient detail? If it does then you don't need to conduct your own user acceptance testing (PQ), but you will need to document the rationale in the validation plan. This comes back to the Annex 15 requirement quoted above that it is permissible to combine the OQ and PQ phases (13). However, can a supplier protocol cover all of your requirements such as user roles and the corresponding access privileges and backup and recovery? It may be possible that the protocol, if written well, can cover these aspects and you will not need to undertake any testing yourself as the supplier OQ protocol will meet your needs. This will mean a thorough review of the testing against your requirements to see if the OQ is worth the cost of purchase. If the OQ meets the majority of your requirements, then you can perform a smaller user acceptance test on those requirements not tested in the supplier OQ. The goal here is to leverage as much of the supplier's offering as possible, providing that it meets your requirements. If the OQ does not meet your requirements, then you will need to write your own user acceptance tests and forget the supplier approach.

OQ for Configurable Software?

Let's return now to consider our approach to category 4 software, which has tools provided by the supplier to change the way the application automates a business process. There are a wide range of mechanisms to configure software in this category ranging from the simplest approach in which a user selects an option from a fixed selection (for example, number of decimal places for reporting a result, if a software function is turned on or off, or a value is entered into a field such as password length or expiry) to the most complex approach in which supplier language is provided to configure the application. In the latter situation, this is akin to writing custom code and it should be treated as category 5 software (4).

When considering the value of a supplier OQ, consider how extensively you will be configuring the application. This requires knowledge of the application, but is crucial when evaluating if there is value in purchasing a supplier OQ for your application.

My general principle is that the farther away from the installed software you intend to configure the application the lower the value of an extensive supplier OQ becomes. In these cases, you should look for a simple supplier OQ that provides confidence that the system operates in the default configuration after which you will spend time configuring the system to meet your business requirements. In my opinion, extensive protocols that do not provide value to you by testing your user requirements should be avoided.

Summary

A supplier OQ can offer a regulated laboratory value if the tests carried out match most, if not all, of the user requirements, meaning that the user acceptance testing (PQ) can be reduced. If the application is extensively configured then the value of an extensive supplier OQ falls because typically the OQ will be run on a default configuration or one of the supplier's devising which will not usually be the same as the laboratory's. In this case, use a risk assessment to document that the supplier OQ is not useful and integrate an OQ with the user acceptance testing (PQ) to complete the verification phase of the system validation.

References

(1) GAMP Good Practice Guide A Risk-Based Approach to Compliant Laboratory Computerized Systems, Second Edition (International Society of Pharmaceutical Engineers, Tampa, Florida, 2012).

(2) R.D. McDowall, Quality Assurance Journal 9, 196–227 (2005).

(3) R.D. McDowall, Quality Assurance Journal 12, 64–78 (2009).

(4) Good Automated Manufacturing Practice (GAMP) Guidelines, version 5 (International Society of Pharmaceutical Engineers, Tampa, Florida, 2008).

(5) R.D. McDowall, Spectroscopy 25(4), 22–31 (2010).

(6) R.D. McDowall, Spectroscopy 24(5), 22–31 (2009).

(7) United States Pharmacopeia General Chapter <1058> "Analytical Instrument Qualification" (United States Pharmacopeial Convention, Rockville, Maryland),

(8) R.D. McDowall, Spectroscopy 25(9), 22–31 (2010).

(9) European Commission Health and Consumers Directorate-General, EudraLex: The Rules Governing Medicinal Products in the European Union. Volume 4, Good Manufacturing Practice Medicinal Products for Human and Veterinary Use. Annex 11: Computerised Systems (Brussels, Belgium, 2011).

(10) R.D. McDowall, Spectroscopy 26(4), 24–33 (2011).

(11) Food and Drug Administration, Current Good Manufacturing Practice regulations for finished pharmaceutical products (21CFR 211.160[a]).

(12) Spolana FDA warning letter, October 2000.

(13) European Commission Health and Consumers Directorate-General, EudraLex: The Rules Governing Medicinal Products in the European Union. Volume 4, Good Manufacturing Practice, Medicinal Products for Human and Veterinary Use. Annex 15: Qualification and Validation (Brussels, Belgium, 2001).

R.D. McDowall is the principal of McDowall Consulting and the director of R.D. McDowall Limited, and the editor of the "Questions of Quality" column for LCGC Europe, Spectroscopy's sister magazine. Direct correspondence to: spectroscopyedit@advanstar.com

R.D. McDowall