Deep Learning Classification of Grassland Desertification in China via Low-Altitude UAV Hyperspectral Remote Sensing

Efficiency and accuracy are major bottlenecks in conducting ecological surveys and acquiring statistical data concerning grassland desertification. Traditional manual ground-based surveys are inefficient, and aerospace-based remote sensing surveys are limited by low spatial resolution and accuracy. In this study, we propose a low-altitude unmanned aerial vehicle (UAV) hyperspectral visible near-infrared (vis-NIR) remote sensing hardware platform, which combines efficiency and accuracy for high-precision remote sensing-based ecological surveys and statistical data collection on grassland desertification. We use the classical deep learning network models VGG and ResNet and their corresponding improved 3D convolutional kernels: 3D-VGG and 3D-ResNet, respectively, to classify the collected data into features. The results show that the two classical models yield good results for vegetation and bare soil in desertified grasslands, and the 3D models yield superior classification results for small sample features. Our results can serve as benchmarks for hardware integration and data analysis for remote sensing-based grassland desertification research and lay the foundation for further finer classifications and more accurate statistics of features.

Desertification is a major global environmental problem directly affecting approximately a quarter of the global population, with an estimated total of 10 million hectares of arable land being lost to desertification annually (1). According to the results of the fifth national monitoring of desertification and sandy land conducted in 2019, China’s desertified land area had reached 261 million hectares, accounting for 27.1% of the country’s total land area. Of that 261 million, 61 million hectares, or 23.3% of the desertified land area, lies in Inner Mongolia, which has the highest proportion in the country (2,3). This region serves as an important ecological security barrier and a major base for traditional livestock farming in the northern part of China. Thus, desertification in Inner Mongolia poses a serious threat to the local ecological environment, economic development, and safety of life (4,5). Grassland ecosystems have a simple community structure and are the most desertification-prone landscape type, with nearly 98.5% of desertified land in Inner Mongolia resulting from retrograde succession in grassland ecosystems (6).

Desertification of grasslands varies based on the structure of the vegetation community, the reduction of number of vegetation species, soil and wind erosion, salinization, and the bare land area. The vegetation community and soil are important monitoring indicators of desertification, and high-precision monitoring and statistics of both factors are crucial to grassland desertification research. Moreover, these parameters serve as important bases for hierarchical governance of grassland desertification (7,8). Traditional manual field surveys require significant human and material resources, cover limited areas, and are inefficient and time-consuming. Furthermore, the monitoring accuracy is affected as real-time and large-area monitoring is not possible in manual surveys (9). With the development of aerospace remote sensing technologies over the past 60 years, the use of satellites, aircraft, and other spacecraft carrying optical sensors has yielded remote sensing data with wide spectral bands and large spatial scales. These data are widely used in the fields of hydrology, meteorology, forestry, and natural disaster monitoring (10–13). In agriculture, remote sensing data are mainly useful for growth monitoring and yield estimation (19,20) of large fields of crops with relatively regular planting blocks such as wheat (14), maize (15), rice (16), cotton (17), and sorghum (18). However, aerospace remote sensing is limited by insufficient spatial resolution, and the characteristics of grassland vegetation, such as small vegetation and irregular plantation blocks affect the results. Thus, low-altitude remote sensing platforms with higher spatial resolution and scale are desired for studies on grassland desertification.

Unmanned aerial vehicles (UAVs) have attracted significant attention as aerial remote sensing platforms owing to their advantages, such as speed, mobility, and cost-effectiveness. Moreover, advanced sensor technologies, such as low-weight high-definition digital cameras (21,22) and spectrometers (23,24) in low-altitude UAVs enhance the spatial scale and resolution of remote sensing data enabling better performance of models used in shrub grassland recognition (25), plant diseases and insect pests of fruit trees monitoring (26), forest species identification (27) and in other fields. Combining a high-definition hyperspectral imager digital camera with a spectrometer can provide more information from richer regions of the spectrum, higher spectral resolution, and enhanced classification of smaller features. Hyperspectral remote sensing has been used previously in UAVs to monitor diseases and pests of fruit trees, in livestock monitoring (28), soybean (29), and leaf area index inversion of wheat (30), yielding improved results. However, because of the heavy mass of the hyperspectral imager and the positive correlation between the imaging quality and the spectrometer weight, higher requirements are put forward for the load capacity and stability of UAV.

In this study, we used a high spectral resolution hyperspectral imager in combination with a low-altitude drone to realize a hyperspectral remote sensing system with high spatial resolution, flexibility, and efficiency to monitor grassland desertification. Real-time monitoring and high precision statistics provide a hardware foundation. We acquired hyperspectral remote sensing images of desertified steppes in the experimental area using the UAV hyperspectral remote sensing system and performed data preprocessing and band selection. Two classical deep learning models and their improved convolution kernel models were used to classify the vegetation, soil, and other fine features in the hyperspectral remote sensing images. Thus, we realized a complete process from data acquisition to data analysis of desertification of steppes using the hyperspectral remote sensing system, which is a promising hardware and software platform for high precision statistics for use in the inversion of steppe desertification. Our results will aid in improving remote sensing surveys and classification concerning grassland desertification.

Materials and Methods

Construction of the UAV Hyperspectral Remote Sensing System

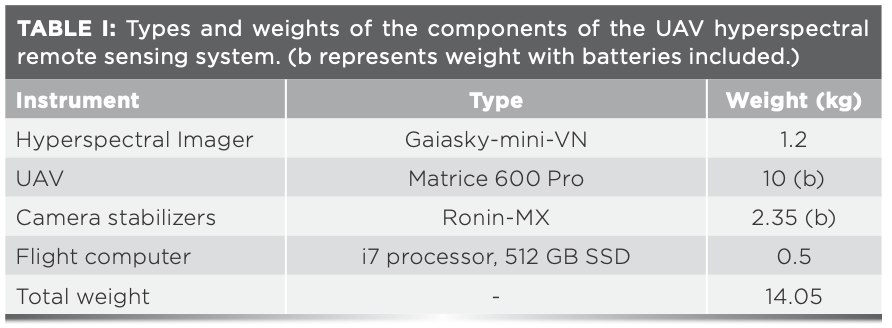

The UAV hyperspectral remote sensing system is comprised of a six-rotor UAV, a hyperspectral imager, a camera stabilizer, a flight computer, and other instruments (Figure 1).

FIGURE 1: Image of UAV hyperspectral remote sensing system in flight.

We used the Gaiasky-mini-VN hyperspectrometer developed by Dualix Spectral Imaging Co., Ltd. This spectrometer is capable of acquiring spectra in the 400–1000 nm range with a spectral resolution of 2.4 nm, 256 bands, and 1392 x 1040 pixels in full frame. The scanning method is hovering and built-in scanning, using a Sony ICX285 lens with a focal length of 17 mm, 33° field of view, and 1.2 kg weight.

The six-rotor UAV is a Matrice 600 Pro from SZ DJI Technology Co., Ltd., with a professional-grade A3 Pro flight control system, three sets of IMUs, and a high-precision D-RTK GNSS module with a maximum diameter of 1668 mm, an approved maximum take-off weight of 15.5 kg, and a full flight endurance of 18 min. To stabilize the high-precision spectrometers, we used the Ronin-mx camera stabilizer, assessing the stabilizing angle as 0.02° for a maximum load weight of 4.5 kg. The onboard computer was equipped with a I7-7567U CPU and a 512 GB solid state disk. The total weight of the UAV hyperspectral remote sensing system was 14.05 kg, and the weight of each part is shown in Table I.

Overview of the Study Area

The experimental area, Gegen Tara Grassland (41°75’36 “N, 111°86’48 “E), is located in Siziwang Banner in the central part of the Inner Mongolia Autonomous Region. It is a combined agricultural (18.3%) and pastoral area (81.7%), with animal husbandry as the dominant industry. The altitude in this area varies between 1200–2200 m, with sparse rivers and annual precipitation of 260 mm in 2019. Water resources are scarce (31), summer sunshine is abundant and hot. The remaining three seasons are windy, with an average annual wind speed of 4.6 m/s. The soil type is light chestnut calcium, which shows high wind erosion, high sand content, and low organic matter content (32). The vegetation is sparse and lacks variation among species, with most species showing long and narrow leaves and well-developed root systems. The average height of the fruiting period is less than 8 cm. The constructive species is Stipa breviflora, the dominant species is Artemisia frigida, and the main companion species are Salsola collina, Neopallasia pectinate, and Convolvulus ammannii (33).

Combining the climatic characteristics of the grassland and the characteristics of the forage growing season in 2019, we collected data between the fruiting and yellowing periods, (August 24–26, 2019) between 10:00 and 14:00 when there are no breezes (wind speed 0–3.4 m/s) and none, or less than 2%, cloud cover.

Data Collection

In this study, the UAV hovering method was used to collect hyperspectral remote sensing images of objects in the experimental area. The net weight of the UAV hyperspectral remote sensing system was 14.05 kg, safe endurance time 20 min, spectral range 400–1000 nm, frame size 1392 x 1040 pixels, number of spectral channels 256, flight height of the UAV 30 m, and spectral–spatial resolution was 2.6 cm/pixel. To ensure the quality of acquisition, two hyperspectral remote sensing images were acquired at each hovering point, with a single image acquisition time of 7 s, including the flight time between the hovering points. Under the condition of 50% overlap, 1.36 hm2 remote sensing images could be collected in one sortie. As the amount of light varied with cloud movement during the acquisition time, a standard reference whiteboard calibration was performed before and after each takeoff.

Data Preprocessing

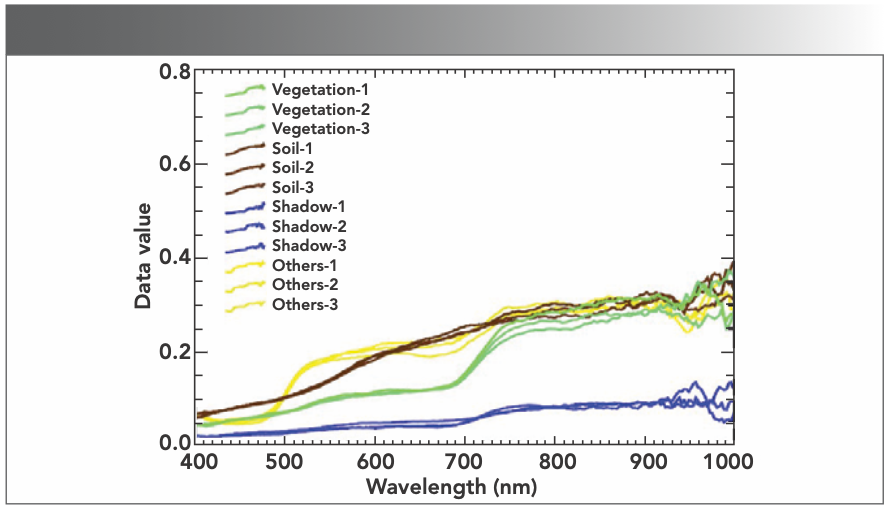

Poor remote sensing images (because of light variations and wind gusts) were removed by manual inspection, and a set of remote sensing images with the best image quality was selected. A professional spectral software (Spectra view) was then used to correct the reflectance and identify the features of interest. The four spectral profiles of ground objects, vegetation, soil, shadows, and others, are shown in Figure 2.

FIGURE 2: Measured vis-NIR spectra of ground objects.

The spectral reflectance curves of the four ground objects are significantly different as can be seen in Figure 2. Because of the high chlorophyll content of healthy vegetation, the spectral reflectance curve of vegetation (Figure 2, Vegetation-1, 2, and 3) has a distinct red light absorption band between wavelengths of 660–680 nm, with a significant increase in reflectance between the wavelengths of 680–760 nm. The spectral reflectance curves of soil (Figure 2, Soil-1, 2, and 3) show a clear upward trend in the visible band with a large slope and a smaller increase in the NIR band with a lower slope than in the visible band. The spectral reflectance curves of shadow (Figure 2, Shadow -1, 2, and 3) have a strong absorption of visible light and therefore a low reflectance between a wavelength of 0–760 nm and a slight increase in reflectance in the NIR bands between wavelengths 760–1000 nm. The spectral reflectance curves of others (Figure 2, Others-1, 2, and 3) are higher than those of the three ground objects mentioned above at wavelengths of 500–580 nm.

Results

Band Selection

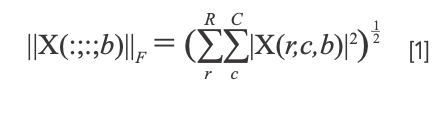

The hyperspectral images acquired in this experiment have 256 bands, with a spectral resolution of 2.4 nm and spatial resolution of 2.6 cm/pixel. A radiometrically corrected image occupies about 1.2 GB of storage space and includes rich spectral information of features, thus enabling highly accurate object classification. As can be seen in Figure 2, vegetation, soil, and others have small differences and high similarities in the 740–1000 nm range. However, the region of 925–1000 nm is heavily influenced by noise, resulting in poor data separability. We used the Frobenius norm2 (34) (F-norm2) to reduce the dimensionality of the data, as shown in equation 1:

where X is a tensor, r represents the number of rows of the tensor, c represents the number of columns of the tensor, and b represents the dimension of the tensor, respectively, corresponding to samples, lines, and bands in the hyperspectral image. The F-norm2 value corresponding to the image represents the energy of the corresponding band. If the value is too small, it means too little information is contained. If the value is too large, it means serious noise interference. After dimensional reduction, 180 bands are retained, and the storage space of a single image is reduced to about 0.83 GB, which effectively improves the efficiency of post-processing of data.

Classification of Features

With the rapid development of computer hardware, computer vision and deep learning have advanced rapidly. Computer vision has been applied to object detection, object localization, object detection from video, scene classification, and other fields. The error rate of some data sets is lower than that of the human eye, which is remarkable. Deep learning classification methods have also been gradually introduced to remote sensing image classification, from the early spectral feature classification models based on remote sensing data with fewer dimensions to a significant increase in sensor dimensions in recent years. Classification models with combined spectral-spatial information inspired by video sequence object detection have been gradually introduced in the analysis of high-dimensional remote sensing data (35).

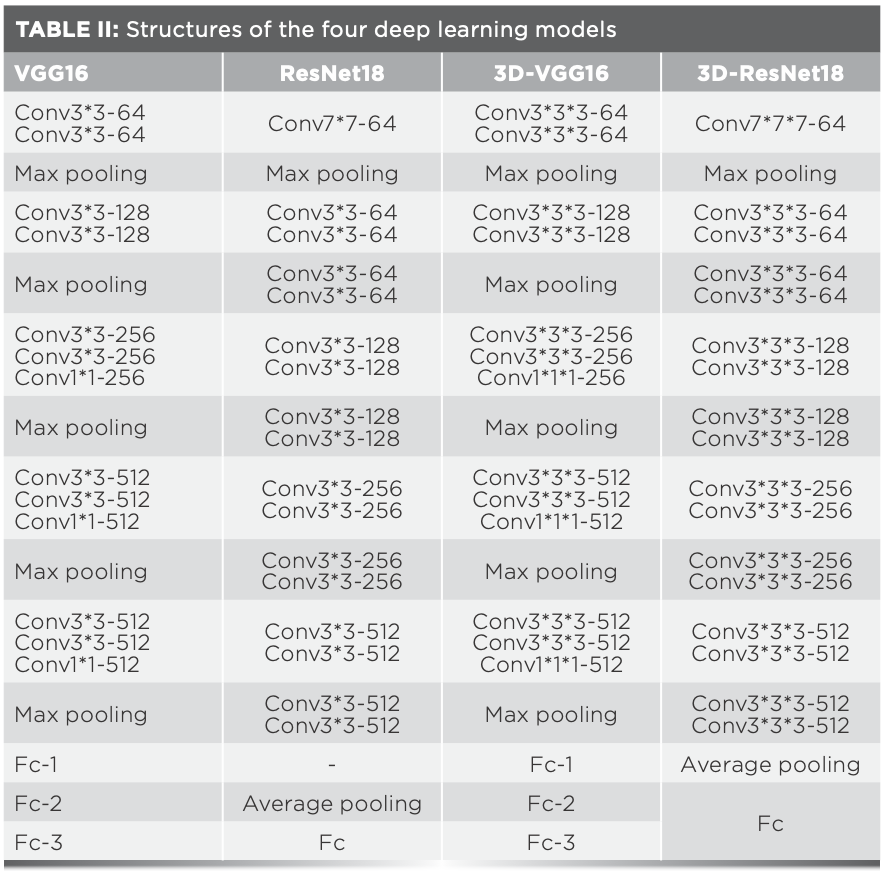

In this study, the F-norm2 is used to reduce noise interference and the dimensionality of hyperspectral data. Based on this, two typical deep learning models, VGG16 (36) and ResNet18 (37), are used and the 2D-convolution kernels of the two classical models are replaced by 3D convolution kernels, 3D-VGG16 and 3D-ResNet18 to process the hyperspectral data in 180 bands after the F-norm2 dimensionality reduction. The structure of the deep learning model is shown in Table II.

VGG16 consists of 13 convolutional layers (2D convolutional kernels are denoted by Conv1*1-XXX, Conv3*3-XXX; XXX is the number of convolutional kernels), three fully connected layers (denoted by Fc-X), and five pooling layers (denoted by Max pooling). ResNet18 consists of 17 convolutional layers (denoted by conv7*7-XXX, conv3*3-XXX; XXX being the number of convolutional cores), one fully connected layer (denoted by Fc), and two pooling layers (denoted by Max pooling, Average pooling). The improved 3D-VGG16 and 3D-ResNet18 models have the same depth as the above two models, and the 2D convolutional kernels in the convolutional layers are improved to 3D convolutional kernels (38), which are denoted by Conv1*1*1-XXX, Conv3*3*3-XXX, and Conv7*7*7-XXX in Table II.

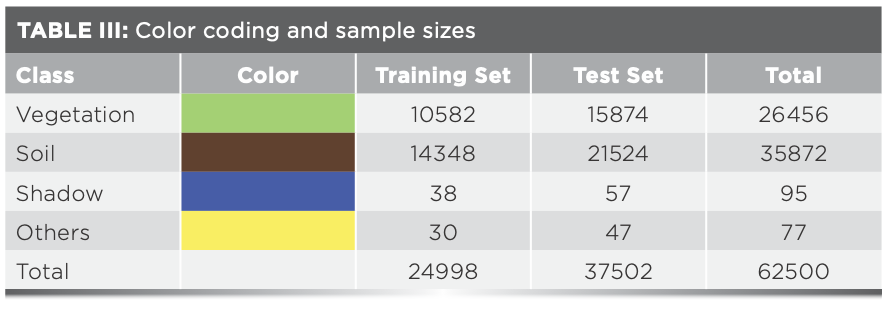

To increase the processing speed, our procedure captures a photograph and crops it randomly to 250 rows x 250 samples x 180 bands for a total of 62,500 pixels. To identify features, 40% of the labeled samples were randomly selected as training data and the rest as test data to match the color of vegetation, soil, and other features. These quantities are summarized in Table III.

Classification Results

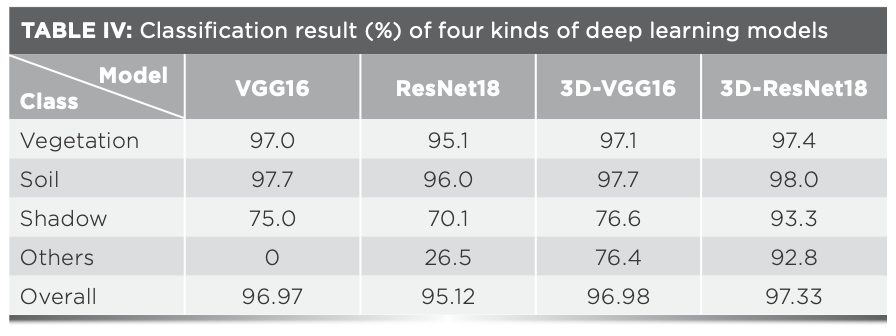

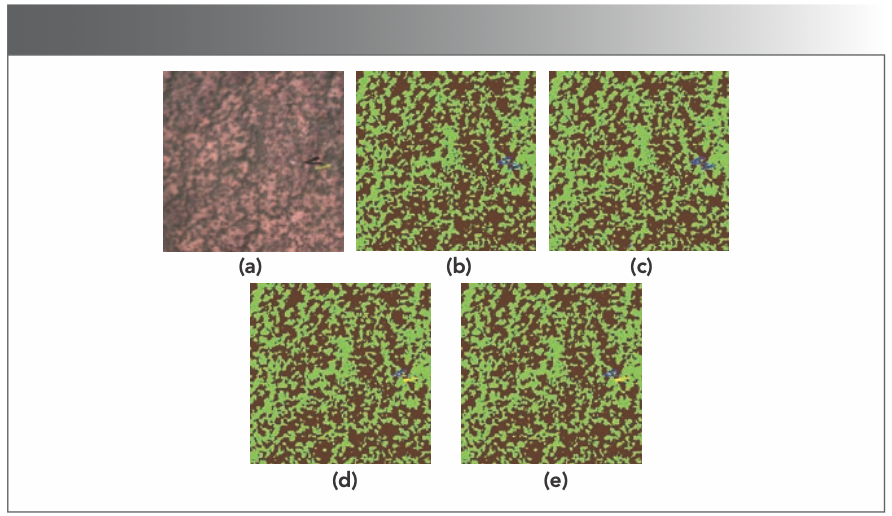

The classification accuracy and overall precision of the four deep learning models VGG16, ResNet18, 3D-VGG16, and 3D-ResNet18 are shown in Table III. The accuracy values in the table are averages obtained from five iterations of the model. From Table IV, it can be seen that the VGG16 model has better classification accuracy for vegetation and soil, with classification accuracies of 97% and 97.7%, respectively. However, it exhibits poorer classification performance for small samples (shadow, others), and does not have the ability to classify others; it has an overall classification accuracy of 96.97%. ResNet18 also has better classification accuracy for vegetation and soil, with 95.1% and 96.0%, respectively. Poorer classification performance is observed for small sample features (shadow, others), and poor separability for others, with a 26.5% classification accuracy. The VGG16 and ResNet18 models, which improved the 2D convolutional kernels to 3D convolutional kernels, not only have higher overall classification accuracy, but also significantly improve the classification performance for small target features. The classification accuracy of 3D-VGG16 for vegetation and soil improved slightly at 97.1% and 97.7% respectively, and the classification performance for small samples (shadow, others) improved significantly at 76.6% and 76.6%, respectively. 3D-ResNet18 had the best classification performance, with 97.4% and 98.0% for vegetation and soil, respectively, and a significant improvement for small samples (shadow, others), with 93.3% and 92.8%, respectively, and an overall accuracy of 97.33%. The classification results obtained by the four deep learning models are shown in Figure 3.

FIGURE 3: (a) False color image, (b) VGG16, (c) ResNet18, (d) 3D-VGG16, and (e) 3D-ResNet18. The x- and y-axes are the X- and Y- spatial dimensions.

The following conclusions can be drawn from Figure 3 and Table III: (1) All four models have good classification performance for vegetation and soil in hyperspectral images. Compared with the original models, the classification accuracy of 3D-VGG16 and 3D-ResNet18 improved as the 3D convolution kernels are slightly improved (1–2%). The 3D-ResNet18 model has the best classification accuracy. (2) The classification performance of the four models for small samples of features (shadow, others) in hyperspectral images varied greatly. Compared with the original model, the classification accuracy of the 3D-VGG16 and 3D-ResNet18 models were greatly improved. The 3D-ResNet18 model exhibited the best classification accuracy. (3) In terms of overall classification performance, the ResNet18 model is superior to VGG16, which is because of the residual block structure and a larger network depth of ResNet18. This improves both network models to 3D convolutional kernels, which still show the same classification performance pattern.

The 3D convolution kernel network models show better classification performance not only for small sample features (shadow, others), but also for various local content (vegetation, soil). Scattered distribution in part has better classification performance. Because 3D convolution kernels can extract three dimensions of spectral information in the hyperspectral data, compared with 2D convolution kernels to extract the X at the same time, Y two dimensions of spectral information, 3D convolutional kernels are more capable of extracting fine features.

Conclusions

The low-altitude UAV hyperspectral remote sensing system proposed in this study can collect vis-NIR (400–1000 nm) spectral information of grassland desertification features with a spectral resolution of 2.4 nm, and a spectral channel number of 256. The spatial resolution of the hyperspectral data is 2.6 cm/pixel at a flight altitude of 30 m, and a single sortie of 20 min can collect 1.36 hm2 of remote sensing data. The obtained data combine rich waveband information, high spectral resolution, high spatial resolution, and high efficiency, which enriches the spatial scale of grassland desertification remote sensing research, providing a hardware-software integration approach for grassland desertification remote sensing.

The two classical deep learning network models, VGG16 and ResNet18, used in this experiment achieved good classification results for vegetation and soil in remote sensing images, while the 3D-VGG16 and 3D-ResNet18 models improved by 3D convolution kernels achieved better classification potential for fragmented parts of vegetation, soil, and small sample features that were difficult to identify. Our results can act as benchmarks in the identification, classification, and inversion of fine-scale features such as plant species in remote sensing images of grassland desertification, laying the foundation for holistic ecosystem studies of desertified grasslands. Our approach is effective at a depth modeling approach to realize fine classification of desertified grassland features based on hyperspectral remote sensing.

Acknowledgments

This study was financially supported by the National Natural Science Foundation of China [No. 31660137] and Research Program of Science and Technology at Universities of Inner Mongolia Autonomous Region [No. NJZY21518].

Author Contributions

Yanbin Zhang was the primary contributor to this manuscript. He was mainly responsible for data collection and analysis and wrote the first draft of the manuscript. Weiqiang Pi made substantial contributions to the design of the experimental scheme and the use of equipment. Xinchao Gao helped design the four deep learning models. Yuan Wang contributed to the editing of the manuscript and improved the illustrations.

References

(1) H.Q. Li, J. For. Res. 17(1), 11–14 (2004).

(2) R.P. Zhou, J. Geogr. Inf. Sci. 21(5), 675–687 (2019).

(3) L. Yi, Z.X. Zhang, X. Wang, and B. Liu, Trans. Chin. Soc. Agric. Eng. 29(6), 1–12 (2013).

(4) N. Meyer, J. For. 104(6), 329–331 (2006).

(5) P. Li, J.C. Hadrich, B.E. Robinson, and Y.L. Hou, Rangeland J. 40(1), 77–90 (2018).

(6) Y.Q. Li, X.H. Yang, and H.Y. Cai, Land Degrad Dev. 31(14), 50–63 (2020).

(7) G.D. Ding, T.N. Zhao, J.Y. Fan, and H. Du, J. Beijing For. Univ. 26(1), 92–96 (2004).

(8) F.F. Cao, J.X. Li, X. Fu, and G. Wu, Ecosyst. Health Sustainability 6(1), 654–671 (2020).

(9) Y.Y. Zhao, G.L. Gao, S.G. Qin, M.H. Yu, and G.D. Ding, J. Arid Land 33(5), 81–87 (2019).

(10) G.Y. Ebrahim, K.G. Villholth, and M. Boulos, Hydrogeol. J. 27(6), 965–981 (2019).

(11) M. Reddy, A. Mitra, I. Momin, and D. Pai, Int. J. Remote Sens. 40(12), 4577–4603 (2019).

(12) S. De Petris, P. Boccardo, and E. Borgogno, Int. J. Remote Sens. 40(19), 7297–7311 (2019).

(13) A. Sajjad, J.Z. Lu, X.L. Chen, C. Chisega, and S. Mahmood, Appl. Ecol. Environ. Res. 17(6), 14121–14142 (2019).

(14) W. Yin, W.G. Li, S.H. Shen, and Y. Huang, J. Triticeae Crops 38(1), 50–57 (2018).

(15) A. Yagci, L. Di, and M.X. Deng, GISci. Remote Sens. 52(3), 290–317 (2015).

(16) Supriatna, Rokhmatuloh, A. Wibowo, I. Shidiq, G. Pratama, and L. Gandharum, Int. J. Geomate. 17(62), 101–106 (2019).

(17) Q.X. Yi, A. Bao, Q. Wang, and J. Zhao, Comput. Electron. Agric. 90(6), 144–151 (2013).

(18) C. Yang and J. Everitt, Precis. Agric. 12(1), 62–75 (2012).

(19) Y.P. Cai, K.Y. Guan, D. Lobell, A. Potgieter, S. Wang, J. Peng, and T.F. Xu, Agric. For. Meteorol. 274(8), 144–159 (2019).

(20) I. Ahmad, U. Saeed, M. Fahad, A. Ullah, M. Ramman, A. Ahmad, and J. Judge, J. Indian Soc. Remote Sens. 46(10), 1701–1711 (2018).

(21) C. Feduck, G.J. Mcdermid, and G. Castilla, Forests 9(7), 66–75 (2018).

(22) Q. Yang, L.S. Shi, J.Y. Han, Y.Y. Zha, and P.H. Zhu, Field Crops Res. 235(4), 142–153 (2019).

(23) H. Wang, A.K. Mortensen, P.S. Mao, B. Boelt, and R. Gislum, Int. J. Remote Sens. 40(7), 2467–2482 (2019).

(24) J.C. Tian, Z.F. Yang, K.P. Feng, and X.J. Ding, Trans. Chin. Soc. Agric. Mach. 51(8), 178–188 (2020).

(25) S. Zhang, Y.J. Zhao, Y.F. Bai, L. Yang, and Z.Y. Sun, Tropical Geography. 39(4), 515–520 (2019).

(26) X.Y. Dong, Z.C. Zhang, R.Y. Yu, Q.J. Tian, and X.C. Zhu, Remote Sens. 12(1), 1–15 (2020).

(27) X.Y. Dong, J.G. Li, H.Y. Chen, and L. Zhao, J. Remote Sens. 23(6), 1269–1280 (2019).

(28) E. Brunori, M. Maesano, F. Moresi, and A. Antolini, J. Sci. Food Agric. 100(12), 4531–4539 (2020).

(29) X.Y. Zhang, J.M. Zhao, G.J. Yang, and J.G. Liu, Remote Sens. 11(23), 232–243 (2019).

(30) J.B. Yue, G.J. Yang, Q.J. Tian, and H.K. Feng, ISPRS SPRS J. Photogramm. Remote Sens. 150(4), 226–244 (2019).

(31) A.M. Zhu, G.D. Han, J. Kang, K. Zhao, and Y. Zhu, Acta Agrestia Sinica. 27(6), 1459–1466 (2019).

(32) Z.Z. Wang, X.H. Song, Y. Wang, and Z.W. Wang, Chin. J. Grassl. 42(1), 111–116 (2019).

(33) L.X. Xu, R. Meng, and Z.J. Mu, J. Inner Mongolia Agric. Univ. 29(2), 64–67 (2008).

(34) W. Zhao and H. Zhang, Proceedings of 2012 Int. Conf. Computer Science and Electronics Engineering (ICCSEE2012), 23–25 (2012).

(35) W.Q. Pi, Y.G. Bi, J.M. Du, X.P. Zhang, Y.C. Kang, and H.Y. Yang, Spectroscopy 35(3), 31–35 (2020).

(36) X.Y. Zhang, J.H. Zou, K.M. He, and J. Sun, IEEE Trans. Pattern Anal. Mach. Intell. 38(10), 1943–1955 (2016).

(37) K.M. He, X.Y. Zhang, A.Q. Ren, and J. Sun, 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 770–778 (2016).

(38) X.F. Liu, Q.Q. Sun, Y. Meng, M. Fu, and S. Bourennane, Remote Sens. 10(9), 31–35, (2018).

Yanbin Zhang, Jianmin Du, Weiqiang Pi, Xinchao Gao, and Yuan Wang are with the Mechanical and Electrical Engineering College at Inner Mongolia Agricultural University, in Hohhot, China. Zhang is also with the Vocational and Technical College at Inner Mongolia Agricultural University in Baotou, China. Direct correspondence to: jwc202@imau.edu.cn ●

New Study Reveals Insights into Phenol’s Behavior in Ice

April 16th 2025A new study published in Spectrochimica Acta Part A by Dominik Heger and colleagues at Masaryk University reveals that phenol's photophysical properties change significantly when frozen, potentially enabling its breakdown by sunlight in icy environments.

New Raman Spectroscopy Method Enhances Real-Time Monitoring Across Fermentation Processes

April 15th 2025Researchers at Delft University of Technology have developed a novel method using single compound spectra to enhance the transferability and accuracy of Raman spectroscopy models for real-time fermentation monitoring.