Validation of Spectrometry Software: Critique of the GAMP Good Practice Guide for Validation of Laboratory Computerized Systems

This month's "Focus on Quality" presents a critique of the GAMP Good Practice Guide. And while columnist Bob McDowall finds much to recommend in the guide, he finds much more cause for concern.

In this column over the past few years, I have not mentioned in any great detail guidance documents on computer validation but started the discussion on a specific topic from the regulations themselves. This is due to the fact that most guidance has concentrated to a large extent on manufacturing and corporate computerized systems rather than laboratory systems including spectrometers.

This has changed with the publication of the Good Automated Manufacturing Practice (GAMP) Forum's Good Practice Guide (GPG) on Validation of Laboratory Computerized Systems (1). However, this publication needs to be compared and contrasted with the AAPS publication on Qualification of Analytical Instruments (AIQ) (2). Both publications have been written by a combination of representatives from the pharmaceutical industry, regulators, equipment vendors, and consultants.

Overview of the Guide

Published in 2005, the stated aim of the GPG is to develop a rational approach for computerized system validation in the laboratory and provide guidance for strategic and tactical issues in the area. Section 5 of the GPG also notes that: ". . . the focus should be on the risk to data integrity and the risk to business continuity. The Guide assumes that these two factors are of equal importance" (1).

However, the GPG notes that companies must establish their own policies and procedures based upon their risk own management approaches. Of interest, the inside page of the GPG states that if companies manage their laboratory systems with the principles in the guide there is no guarantee that they will pass an inspection, and therefore: caveat emptor!

The guide consists of a number of chapters and appendices as shown in Table I. As you can see, the order of some of the chapters is a little strange. For example, why is the validation plan written so late in a life cycle, or why is the chapter on training of personnel positioned after the validation report has been written? However, at least the main computer validation subjects are covered in the whole life cycle, including system retirement. The GPG also cross references the main GAMP version 4 publication for a number of topic areas for further information wherever it is appropriate (3).

Table I: Contents of the GAMP GPG on validation of laboratory computerized systems

One major criticism is that the nine references cited in Appendix 5 are very selective and therefore, the GPG ignores some key publications in this area:

- Furman and colleagues (4) on the debate of holistic (or system) versus modular validation or qualification. This paper was written by FDA personnel on the validation of computerized chromatographic equipment; ignoring it is not an option as it provides a scientific rationale for this two-level approach.

- PDA Technical Report 18 (5) on validation of computer-related systems that contains a much more specific computer validation definition than the FDA process validation definition quoted in Section 3.1 of the GPG (6).

- AAPS Analytical Instrument Qualification (2) white paper published in 2004, which was the outcome of a joint FDA-AAPS conference from 2003.

This biases the approach that this guide has taken and is a fatal flaw, as we shall discuss later in this column.

Overall, the problem with this GPG is that you have to cherry-pick the good bits from the bad. As with any performance appraisal system, let's start with the good news first and work our way downhill afterwards.

The Good News

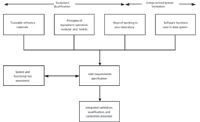

Life Cycle Models for Development and Implementation: The best parts of the GAMP laboratory system GPG are the life cycle models for both development and implementation of computerized laboratory systems (1). The writers of the guide are to be congratulated for producing life cycle models for development and implementation that reflect computerized systems rather than manufacturing and process equipment presented in the original GAMP guide (3). The latter V model life cycle is totally inappropriate for computerized and laboratory systems as it bears little comparison with reality. The problem with the GAMP V model was that immediately after programming the system, it undergoes installation qualification (IQ). Unit, module, and integration or system testing is conveniently forgotten, ignored totally, or implied rather than explicitly stated. The two models illustrated in the laboratory GPG are shown in Figure 1. The lefthand side shows the system development life cycle that is intended for more complex systems, and the righthand side shows the system implementation life cycle (SILC) for simpler systems.

Figure 1: System development and system implementation life cycles (adapted from the GAMP Laboratory GPG). Note that the life cycle phase is only applicable to a commercially available configurable software application.

This reflects the fact that we can purchase a system, install it, and then operate it as shown on the righthand of Figure 1. The vast majority of equipment and systems in our laboratories are similar to this.

BUT . . .

Consider the question: has the SILC oversimplified the implementation process for all spectroscopy and other laboratory computer systems?

The Argument for the SILC: For most spectrometers in a post-Part 11 world, you will need to add user types and users to the system, which will need to be documented for regulatory reasons (for example, authorized users and access levels required by both predicate rules and 21 CFR 11). However, after doing this for simpler spectrometers, the system can go into the performance qualification stage; this is not mentioned specifically in the SILC.

The Argument Against the SILC: The software used in some spectrometers and other computerized systems might need to be configured — this is a term for either selecting an option in the software to alter its function within limits set by the vendor. This can be as simple as selecting which function will be used from two or three options or, in more complex systems such as a laboratory information management system (LIMS), using a language to set up a laboratory procedure or method. This factor is not accommodated specifically in either of the life cycle models. However, regardless of the approach taken, the system configuration must be documented partly for your business to allow reconfiguration of the system in case of disaster, but also for performing the validation.

In my view, the concept of the SILC is good but the scope and extent of it can be taken further than the GPG suggests. Furthermore, it also can be aligned with the existing software categories contained in Appendix M4 of the current GAMP Guide. The rationale is that many laboratory systems are configured rather than customized, therefore, we need more flexibility than the simple SILC presented in the GPG. This is where the GAMP software categories, outlined in Version 4 Appendix M4, come in and where the GPG makes computer validation more complex than it needs to be.

Figure 2 is my attempt to take the SILC principles further than the GPG and align them with the existing GAMP software categories. A user requirements specification is the entry point for all variations illustrated in Figure 2, the exit from this figure is the route to the qualification and configuration of the system.

Figure 2: Modified system implementation life cycle options and macro development.

GAMP 3 Software: This is commercial, off-the-shelf software (COTS). The SILC for this type of software is shown on the righthand side of Figure 2. In essence, this is a modification of the GPG implementation cycle in which the documentation of security, access control, and any other small software configurations for run time operation are substituted for the design qualification.

GAMP 4 Software: This is configurable, commercial, off-the-shelf software (configurable COTS). Once the software functions have been understood, an application configuration specification can be written that will state what functions in the software will be used, turned on, turned off, or modified. After the software has been installed and undergone the IQ, and OQ has been performed, then the software can be configured according to the configuration specification documents.

GAMP 5 Software: This is usually a unique and custom application. However, in the context of spectrometry software, this is typically a custom calculation, a macro or custom program that is written to perform a specific function. Therefore, both GAMP 4 and GAMP 5 software can exist in the same system and the GAMP 5 aspects are typically an addition to the normal functionality of the spectrometry software rather an a substitute.

Therefore, the life cycles for a spectrometer in this category will be the GAMP 4 software (central flow in Figure 2) plus the additional steps for GAMP 5 macros or calculations (pictured on the lefthand side of the same diagram). Here, there must be a specification for the macro (name plus version number), the calculation or the programming or recording of the macro. Against this will be formal testing to ensure that the functionality works as specified. Once this has been performed, then the macro is installed with the application, and it is tested under the PQ phase of validation as an integral part of the overall system.

The Bad News

Here, in my view, is where the GPG creates problems rather than solves them. My rationale is that computer validation is considered difficult by some people, and therefore, conceptual simplicity is a key issue for communication and understanding to ensure that we do not do more than is necessary, dependent upon the risk posed by the data generated by a spectrometer. In the words of Albert Einstein: "Make it as simple as possible — but no simpler." Don't look for simplicity in certain sections of this guide, as it's not there.

Do We Validate or Qualify?

There is always a debate in the laboratory between qualification or validation of laboratory equipment and computerized systems. Section 3.1 of the GPG discusses the qualify-equipment-or-validate-computer-systems debate; it proposes to simplify the approach by classifying all equipment and systems under the single topic of "validation." However, this goes against how the rest of the organization works; it is important to emphasize that laboratories are not unique islands inside an organization. Rather, they are an integral component of it.

The inclusion of any item of laboratory equipment with a computer chip from a pH meter (GAMP Category 2 software) upwards as a "computerized laboratory system" is wrong, in my view, as it will create much confusion, especially as it goes against the advice of the GAMP Guide in Appendix M4, which states that the validation approaches for Category 2 systems consist of qualification steps or activities. Therefore, we now have conflicting guidance from the same organization on the same subject — you can't make this stuff up!

The GPG is doubly wrong following the publication of the draft general chapter <1058> entitled "Analytical Equipment Qualification for the United States Pharmacopoeia," as it kills dead the rationale for including everything under the term validation. I will discuss this publication under the AAPS AIQ later in this column.

Therefore, let us get the terminology right.

We qualify: Instruments and Equipment.

We validate: Systems, Processes, Methods.

We also calibrate: Instruments and Equipment.

These simple principles are easy to grasp and allow any laboratory full flexibility to be made of the risk-based approaches to regulatory compliance. You do not usually need to do as much work to qualify an instrument for intended purpose as you would to validate a computerized system. In overview, the reason is that, typically, you'll need to qualify the instrument as well as validate the software, which implies more work as it's usually a more complex system.

BUT . . .

Is this separation of "qualify equipment" and "validate systems" too simplistic? Yes, for two reasons:

- Do we have clear and agreed definitions of "equipment" and "system"? No.

- Have we forgotten that most spectrometers have both the instrument (equipment) and system components? Yes.

You can't operate the equipment without the system and vice-versa. Therefore, we need an integrated approach to these two issues, and this will be discussed at the end of this column.

The debate also is clouded by the lack of a suitable definition of "qualification," as it is a difficult word to define because it is used in a variety of ways, for example, in Design, Installation, Operational, and Performance Qualification. A definition for qualification is found in ICH Q7A GMP for active pharmaceutical ingredients as:

Action of proving and documenting that equipment or ancillary systems are properly installed, work correctly, and actually lead to the expected results. Qualification is part of validation, but the individual qualification steps alone do not constitute process validation. (10)

The first part of the definition is fine for equipment but the qualifying (sorry!) sentence means that qualification is inextricably linked to validation here. So we have a problem. However, a PIC/S guidance document with the snappy title of Validation Master Plan, Installation and Operational Qualification, Non-Sterile Process Validation, Cleaning Validation (13) has some thoughts on the qualification versus validation debate:

2.5.2 The concept of equipment qualification is not a new one. Many suppliers have always performed equipment checks to confirm functionality of their equipment to defined specifications, both prior to and after installation. (13)

So for the purposes of our discussion. we can start to tease out what a qualification process actually is:

- Equipment is specified by the laboratory

- Installation is properly carried out

- Equipment works correctly

Of course, all stages are associated with appropriate documentation.

We must also consider the requirements of the GMP regulations under §211.160(b) for scientific soundness:

Laboratory controls shall include the establishment of scientifically sound and appropriate specifications, standards, sampling plans, and test procedures designed to assure that components, drug product containers, closures, in-process materials, labelling, and drug products conform to appropriate standards of identity, strength, quality and purity. (7)

Therefore, the specifications for equipment and computerized systems and the tests used to qualify or validate them should be grounded in good science and include, where necessary, the use of traceable reference standards.

And don't forget the impact of calibration — either on a formal basis against traceable standards (typically after a spectrometer has been serviced) as well on a regular basis before a system is being used to make a measurement (for example, check the mass-to-charge ratio for a known compound for a mass spectrometer). This all adds up to scientific-based control of the system, the spectrometer, and potentially, also a method.

Categorization of Laboratory Systems

Section 2 of the Lab GPG (1) notes: In GAMP 4, systems are viewed as a combination of individually categorized software and hardware elements. The proposed approach in this guide is that Laboratory Computerized Systems can be assigned a single classification based upon the technical complexity of the system as a whole, and risk to data integrity.

In Appendix M4 of the GAMP Guide is a classification of software into five categories from operating systems (Category 1) to custom or bespoke software (Category 5). This is shown in Figure 3 on the lefthand side. Note, as we have discussed earlier, that more than one class of software can exist in a system; for example, GAMP Categories 1 and 3 for a basic UV–vis spectrometer commercial off-the-shelf package running on a PC plus Category 2 firmware within the spectrometer.

In an attempt to be all encompassing for laboratory systems, the GPG has included all instruments, equipment, or system with software of any description. Instead of five categories of software, we now have seven (Categories A to G). The categories that have been devised for the Laboratory GPG are based upon four principles.

Configuration: The software used in the system varies from firmware that cannot be modified, to parameterization of firmware operating functions, proprietary configurable elements up to bespoke software (these are encompassed in GAMP version 4 software categories 2–5).

Interfaces: From standalone instruments, to a single interface, to another system, and through to multiple interfaces to the system.

Data Processing: From conversion of analogue to digital signals to postacquisition processing.

Results and Data Storage: From no data generated to methods, electronic records, and postacquisition processing results.

However, the approach outlined in the GPG is wrong again, as it separates and isolates the laboratory from the rest of the organization when in reality, it is an integral part of any regulated operation from R&D to manufacturing. We cannot have an interpreter at the door of the laboratory who interprets the GAMP categories used in the rest of an organization to Lablish (laboratory computerized system validation English). There must be a single unified approach to computerized system validation throughout an organization at a high level that acknowledges that there will be differences in approach as one gets closer to the individual quality systems (for example, GMP, GLP, and so forth) and the individual computer systems. To do otherwise is sheer stupidity.

Do You Really Want to Validate a Dishwasher?

Some of the typical systems classified by the GPG are shown in Figure 3 on the righthand side. In contrast, the lefthand side and center columns show how systems from the traditional GAMP software categories map to the new GPG categories. You'll also note that a system can be classified in more than one GPG class depending on the software functions. In devising this classification system, the GPG proposes to include balances, pH meters, centrifuges, and glass washers as "laboratory computerized systems." Strictly speaking, this is correct — the equipment mentioned earlier all has firmware or ROM chips that allow the system to function.

Figure 3: Classification of laboratory systems by GAMP Main Guide and the Laboratory GPG.

According to the main GAMP Guide, all these items of equipment would be classified as Category 2 and "qualified" as fit for intended use. Under the GPG, they are split into two classes (A and B) and are "validated" as fit for purpose. The comparison of the GAMP Guide and the GPG software classifications are shown in Figure 3 on the righthand side of the diagram and the arrows in the middle indicate how the two classification systems are mapped and are compared with each other.

The horror that some of you might be experiencing now around the suggestion to validate a balance, pH meter, or centrifuge is more about terminology used rather than the work that you would do. Moreover, as we get to more complex laboratory systems such as LIMS, the GPG suggests that GAMP 4 categories might be more suitable! It really depends upon the functions that the equipment or system does and how critical it is.

Table II shows the comparison between the GAMP guide and the GPG for classifying typical laboratory systems. In the latter case, the same instrument appears in two or three categories — for example, the near-infrared (NIR) spectrometer. Looking at most of today's NIR systems, it is difficult to imagine that some can fit into Lab Category C and D (equivalent to GAMP Category 3) as shown in Table II, especially when used for identification of raw materials via user developed libraries, as this is specific to an individual organization. The same can be applied to an nuclear magnetic resonance (NMR) instrument, which is Lab category E (again GAMP Category 3), in which a user can develop custom macros to perform specific functions.

Table II: Comparison of system classification by GAMP and the laboratory GPG

Risk-Assessment Methodology

Ok, if you managed to get this far, we now have the finishing touch: the risk-assessment methodology. GAMP 4 uses a modified Failure Mode Effect Analysis (FMEA) risk-assessment methodology, as outlined in Appendix M3 (3), which also has been adapted for laboratory systems in the GPG. Why this overly complex methodology was selected for laboratory systems is not discussed, although I suspect that it is aimed at consistency throughout the GAMP series of publications. The overall process flow for the risk assessment is shown in Figure 4, with the first three steps at the system level, and the last two at the individual requirement level.

Figure 4: GAMP GPG risk-management process.

FMEA originally was developed for risk assessment for new aeroplane designs in the late 1940s and has been adapted over time to encompass new designs and processes. However, as the majority of laboratory equipment and software used in laboratories is available commercially and is purchased rather than built from scratch, why is this inappropriate methodology being applied? Commercially available instruments and systems already have been tested by the vendors, which can be verified by audits. Therefore, why should a risk-analysis methodology that is very effective for new designs and processes be dumped on laboratories using mainly commercial systems? There are alternative and simpler risk analysis approaches that can be used for the commercial off-the-shelf and configurable COTS software applications used throughout laboratories. For example, there are also:

- Hazard Analysis and Critical Control Points (HACCP)

- Functional Risk Assessment (FRA): A detailed discussion of risk management is outside the scope of this column, but I have written a recent paper on the subject that some of you might find useful, as it compares the various methodologies available (8).

The GPG uses a Boston grid for determining system impact, which is outlined in Appendix 1 of the document (1). However, as there are seven classes of laboratory instrumentation and five classes of business impact, this requires a 7 × 5 Boston grid. This overcomplicates the issue and is not easily manageable. Moreover, as some systems can be classified in a number of laboratory categories, there is a possibility that the impact of a system can be underestimated.

Testing Approach Versus Intended Purpose

Throughout the GPG, there appears to be an emphasis on managing regulatory risk. This is in contrast to the introductory statements in the GPG mentioned at the start of this column. From my perspective, this is wrong and emphasis should be placed on defining the intended purpose of the system and hence, the functions of the instrument and software that are required first and foremost. Only then will you be able to assess the risk for the system based on the intended functions of the system.

The testing approach outlined in Sections 10 (Qualification, Testing and Release) and Appendix 2 should be viewed critically. Section 10 notes that for testing or verifying the operation of the PQ against user requirements, the following are usually performed:

- Verification of user SOPs

- Capacity testing (as required)

- Processes (between input and output)

- Testing of the system's backup and restore (as required)

- Security

- Actual application of the system in the production environment (for example, sample analysis)

Appendix 2 covering the testing priority is a relatively short section that takes each requirement in the URS and assesses risk likelihood (likelihood or frequency of a fault) versus the criticality of requirement or effect of hazard to classify the risk into one of three categories (category 1, 2, or 3). This risk classification is then plotted against the probability of detection to determine high, medium, and low priority of testing. A high risk classification, coupled with a low likelihood of detection, determines the highest class of test priority.

This probably encapsulates the overall approach of the guide – regulatory rationale rather than business approach. Using this approach, I believe that you will be carrying out overly complex and overly detailed risk assessments forever for commercial systems that constitute the majority of laboratory systems. What the writers of the GPG have forgotten is that the FDA has gone back to basics with Part 11 interpretation (9). Remember that the GMP predicate rules (21 CFR 211 and ICH Q7A for active pharmaceutical ingredients) for equipment/computerized systems state:

§211.63 Equipment Design, Size, and Location: Equipment used in the manufacture, processing, packing, or holding of a drug product shall be of appropriate design, adequate size, and suitably located to facilitate operations for its intended use and for its cleaning and maintenance. (7) And:

ICH Q7A (GMP for active pharmaceutical ingredients), in §5.4 on Computerized Systems states in §5.42: Commercially available software that has been qualified does not require the same level of testing. (10)

The fundamental aim of any computerized system validation should be to define its intended use and then test it to demonstrate that it complies with specification. The risk assessment should focus the testing effort where it is needed most but built on the testing that a vendor has already done as the GPG notes on page 34 (1). Where a vendor has tested the system in the way that you use it (either in system testing or the OQ) then why do you need to repeat this?

Cavalry to the Rescue? — The AAPS Guide on Instrument Qualification: As usual in the world, each professional group must have their own say in how things should be done. The American Association of Pharmaceutical Scientists (AAPS) is no exception and have produced a white paper entitled "Qualification of analytical instruments for use in the pharmaceutical industry; a scientific approach" (2). Of course, this is a different approach from GAMP, however, on the bright side, the dishwashers bit the dust long before the final version of this publication!

In contrast to the GAMP GPG, which looks at laboratory equipment from the computer perspective, the AAPS document looks at the same issue from equipment qualification perspective. The AAPS white paper has devised three classes of instruments with a user requirements specification necessary to start the process.

Group A Instruments: Conformance to the specification is achieved visually with no further qualification required. Examples of this group are ovens, vortex mixers, magnetic stirrers, and nitrogen evaporators.

Group B Instruments: Conformance to specification is achieved according to the individual instruments SOP. Installation of the instrument is relatively simple and causes of failure can be observed easily. Examples of instruments in this group are balance, IR spectrometers, pipettes, vacuum ovens, and thermometers.

Group C Instruments: Conformance to user requirements is highly method-specific according to the guide. Installation can be complex and require specialist skills (for example, the vendor). A full qualification is required for the following spectrometers: atomic absorption, flame absorption, inductively coupled plasma, mass, Raman, UV–vis, and X-ray fluorescence.

So this approach is simpler, but the only consideration of the computer aspects is limited to data storage, backup, and archive. Thus, this approach is rather simplistic from the computer-validation perspective.

Furthermore, the definition of IQ, OQ, and PQ is from the equipment qualification perspective (naturally) with operational release occurring after the OQ stage and PQ intended to ensure continued performance of the instrument. This is different from the GAMP GPG, which uses the computer-validation definition of IQ, OQ, and PQ, where PQ is enduser testing and operational release occurs after the end of the PQ phase (2). This is a great problem when two major publications cannot agree on terminology for the same subject.

However, the AAPS white paper is now the baseline document for the new proposed general chapter <1058> for the next version of the USP, the draft of which has just been published for comment in Pharmacopoeial Forum (11). This highlights the flawed approach of the GAMP GPG because there is now a de facto differentiation between laboratory equipment qualification and computer system validation that will be incorporated in the USP.

So are we any further forward? Not really. We are just nibbling at the problem from a different perspective but without solving it decisively. Consider the following issues that are not fully covered by the AAPS guide that will now be enshrined in a formal regulatory text:

- The scope of the guidance and proposed USP chapter is limited only to commercial off-the-shelf analytical instrumentation and equipment.

- The three instrument groups are described along with suggested testing approaches to be conducted for each. However, in my view, there is not sufficient definition of the criteria for placing instruments in particular groups.

- Group C instruments cover a wide spectrum of complexity and risk, and might have very diverse requirements. There is no specific allowance made within the approach for custom developed applications such as macros commonly found when operating spectrometers.

- The guide covers the initial qualification activities for analytical instruments but there is very little on the validation of the software that controls the instrument. There is little guidance on operational, maintenance and control activities following implementation such as access control, change control, configuration management, and data backup. How many spectrometers can you name that don't have computer-controlled equipment and data acquisition?

- The proposed chapter uses the term "analytical instrument qualification (AIQ)" to describe the process of ensuring that an instrument is suitable for its intended application, but the instrument is only a part of the whole computerized system.

Integrated Approach to Computer Validation and Instrument Qualification

What we really need for any regulated laboratory is an integrated approach to the twin problems of instrument qualification and computer validation. As noted by the GAMP GPG, the majority of laboratory and spectrometer systems come with some degree of computerization from firmware to configurable off-the-shelf software (1). The application software controls the instrument. If you qualify the instrument, you will usually need the software to undertake many of the qualification tests with an option to validate the software at the time.

BUT . . .

We look at the two issues separately.

Consider the AAPS Analytical Instrument Qualification Guide (2) and GAMP laboratory GPG (1) as two examples we have looked at in this column. They are looking at different parts of the same overall problem and coming up with two different approaches. It's no wonder we don't take a considered and holistic view of the whole problem.

For example, we use the same qualification terminology (IQ, OQ, and PQ) for both instrument qualification and computer system validation but they mean different things (12). This fact is exemplified in the two guides. Confused? You should be. If you are not — then you have not understood the problem!

Therefore, we need to develop the following guidance as a minimum:

- Integrated terminology covering both the qualification of the instrument and validation of the software. This must ensure that the laboratory is not separated from the organization or that it creates a profession of "Lablish" interpreters.

- Page 6: I think that "qualify equipment" and "validate systems" is too simplistic, as there is no clear and generally agreed distinction between "equipment" and "system."

- Simple classification of laboratory equipment software, based upon the existing GAMP software categories to be consistent with the rest of the organization. The laboratory is not a unique part of a facility any more than is production.

- Realistic life cycle(s) based upon the further development of the simple SILC outlined in the GPG that reflect the different options that we face in the laboratory: from COTS to configurable COTS and where necessary, customization of an application.

- Writing a specification or specifications to document both the instrument and the associated software functions. Figure 5 shows one approach to an integrated approach by considering the equipment operational requirements at both the modular and holistic levels and the software functions required; both of which are based upon the way of working in a specific laboratory. The equipment qualification requirements for traceable reference standards can also be devised for input into the URS (12).

Figure 5: Integrated approach to laboratory instrument qualification and validation (modified from C. Burgess, personal communication).

- Simple risk assessment methodology that reflects the majority of instrument and systems are commercial.

- Integrated and practical approaches to combined equipment qualification and computer validation to test and demonstrate that the system does what it is intended to do.

I can go on (and usually do) in more detail, but the plain truth is that we don't have this holistic approach yet.

Summary

In today's risk-based environment, computer validation and equipment should be getting easier, quicker, and simpler. Although the GAMP GPG for laboratory computerized systems was published in 2005, it reads as if it were published under the older and more stringent regulatory approach that existed before 2002 and the FDA's Pharmaceutical Quality Initiative in 2004.

The great concept is the System Implementation Life Cycle and a realistic (at last!) System Development Life Cycle as the good points from this document. Take and use these within your existing computer validation frameworks. You can ignore much of the rest, as you'll get separated from the rest of the computer validation world in your organization and do much more work that you do at the moment.

R.D. McDowall is principal of McDowall Consulting and director of R.D. McDowall Limited, and "Questions of Quality" column editor for LCGC Europe, Spectroscopy's sister magazine. Address correspondence to him at 73 Murray Avenue, Bromley, Kent, BR1 3DJ, UK.

References

(1) GAMP Forum Good Practice Guide — Laboratory Systems (International Society for Pharmaceutical Engineering, Tampa, Florida, 2005).

(2) S.K. Bansal, T. Layloff, E.D. Bush, M. Hamilton, E.A. Hankinson, J.S. Landy, S. Lowes, M.M. Nasr, P.A. St. Jean, and V.P. Shah, Qualification of Analytical Instruments for Use in the Pharmaceutical Industry: A Scientific Approach (American Association of Pharmaceutical Scientists, 2004).

(3) Good Automated Manufacturing Practice (GAMP) Guidelines, version 4 (International Society for Pharmaceutical Engineering, Tampa, Florida, 2001).

(4) W. Furman, R. Tetzlaff, and T. Layloff, JOAC Int. 77, 1314–1317 (1994).

(5) "Validation of Computer-Related Systems," Parenteral Drug Association Tech. Rep. 18, 1995 (Journal PDA , 49 S1–S17).

(6) FDA Guidance on Process Validation Guidance (1987).

(7) FDA Current Good Manufacturing Practice for Finished Pharmaceutical Products (21 CFR 211).

(8) R.D. McDowall, Quality Assurance J. 9, 196–227 (2005).

(9) FDA Guidance for Industry on Part 11 Scope and Application (2003).

(10) ICH Q7A Good Manufacturing Practice for Active Pharmaceutical Ingredients (2000).

(11) Pharmacopoeal Forum, <1058> Analytical Equipment Qualification (January 2005).

(12) R.D. McDowall, Validation of Chromatography Data Systems: Meeting Business and Regulatory Requirements (Royal Society of Chemistry, Cambridge, UK, 2005).

(13) Validation Master Plan, Installation and Operational Qualification, Non-Sterile Process Validation, Cleaning Validation PIC/S Guide PI 006-1 (PIC/S, Geneva, Switzerland) 2001.

High-Speed Laser MS for Precise, Prep-Free Environmental Particle Tracking

April 21st 2025Scientists at Oak Ridge National Laboratory have demonstrated that a fast, laser-based mass spectrometry method—LA-ICP-TOF-MS—can accurately detect and identify airborne environmental particles, including toxic metal particles like ruthenium, without the need for complex sample preparation. The work offers a breakthrough in rapid, high-resolution analysis of environmental pollutants.

The Fundamental Role of Advanced Hyphenated Techniques in Lithium-Ion Battery Research

December 4th 2024Spectroscopy spoke with Uwe Karst, a full professor at the University of Münster in the Institute of Inorganic and Analytical Chemistry, to discuss his research on hyphenated analytical techniques in battery research.

Mass Spectrometry for Forensic Analysis: An Interview with Glen Jackson

November 27th 2024As part of “The Future of Forensic Analysis” content series, Spectroscopy sat down with Glen P. Jackson of West Virginia University to talk about the historical development of mass spectrometry in forensic analysis.

Detecting Cancer Biomarkers in Canines: An Interview with Landulfo Silveira Jr.

November 5th 2024Spectroscopy sat down with Landulfo Silveira Jr. of Universidade Anhembi Morumbi-UAM and Center for Innovation, Technology and Education-CITÉ (São Paulo, Brazil) to talk about his team’s latest research using Raman spectroscopy to detect biomarkers of cancer in canine sera.