Are You Ready for the Latest Data Integrity Guidance? Part 2: Computerized Systems, Outsourcing, Quality, and Regulatory Oversight

The new PIC/S data guidance document PI-041 was published in July 2021. This column is the second part of a review looking at the expectations for computerized systems, outsourcing, regulatory actions, and remediation of data integrity failures. Spoiler alert: You won’t like this column if you use hybrid systems.

The final version of the PIC/S guidance on Good Practices for Data Management and Integrity in Regulated GMP/GDP Environments with a document number PI-041 (1) was published in July of 2021. The document scope covers all activities under the remit of both Good Manufacturing Practice (GMP) and Good Distribution Practice (GDP) regulations. This column will only focus on laboratories working to GMP.

The last “Focus on Quality” column reviewed the first part of the document covering scope, data governance, organizational influences on data integrity (DI) management, general DI principles, and specific DI considerations for paper records (2). In this second part of the review, we focus on the requirements for computerized systems and take a high-level look at outsourcing, regulatory actions, and remediation of data integrity failures.

Specific DI Considerations for Computerized Systems

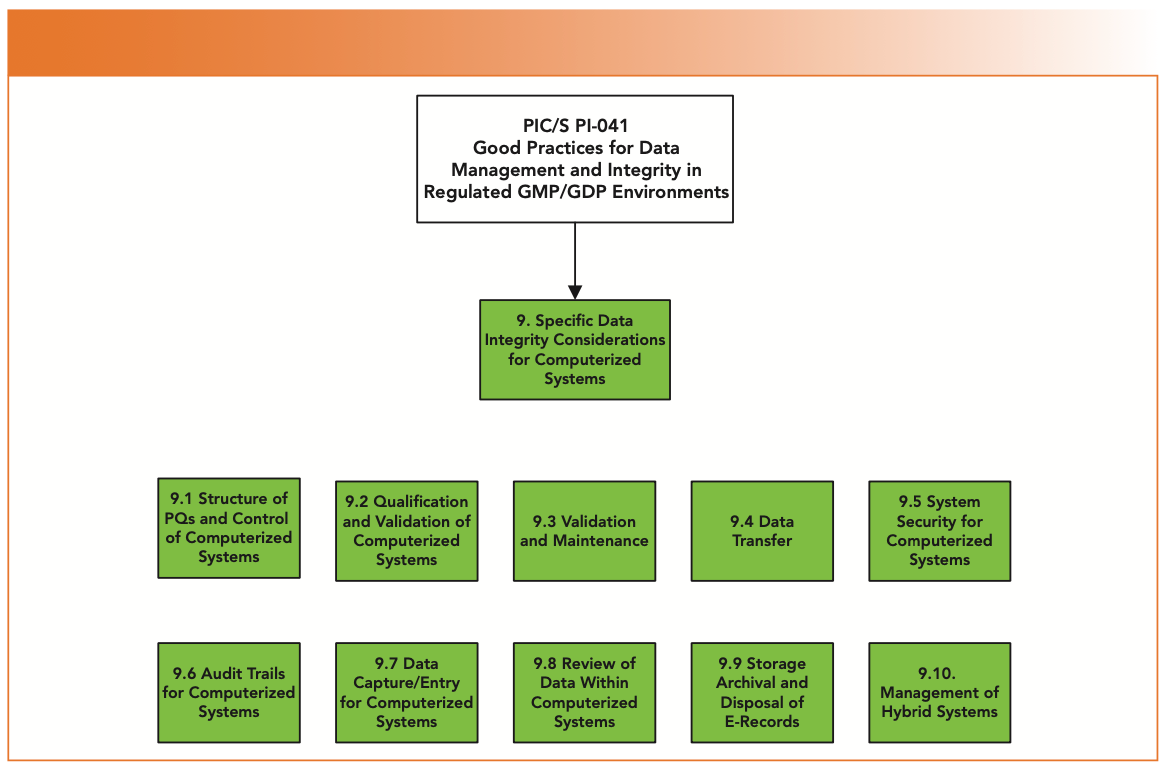

Section 9 of PI-041 is focused on specific considerations for ensuring data integrity for computerized systems (1). Section 9 is probably the most pertinent section for Spectroscopy readers because the majority of spectrometers have a workstation attached with application software to control the instrument and acquire, process, report, and store spectroscopic data. PI-041 section 9 consists of 10 subsections, as shown in Figure 1. This column covers the key sections from this guidance, starting with the Pharmaceutical Quality System, and the role it should (but often does not) play in controlling computerized systems.

FIGURE 1: Specific data integrity considerations for computerized systems (from PIC/S PI-041)

Role of the PQS in Controlling Computerized Systems

To ensure overarching control of computerized systems and the processes automated by them, the Pharmaceutical Quality System (PQS) needs to ensure the following:

- Evaluation and control of computerized systems to meet GMP regulations (essentially Chapter 4 and Annex 11 [3, 4])

- That a system meets its intended purpose

- Knowledge of the data integrity risks and vulnerabilities of each system and the ability to manage them.

- Knowledge of the impact each system has on product quality and the criticality of the system

- Ensuring that data generated by a system is protected from accidental and deliberate manipulation

- An assessment that suppliers of computerized systems have an ad- equate understanding of applicable regulations, and that understanding is reflected in the applications that they market

- An assessment of the criticality and risks to the data and metadata generated by each system. Data process mapping is one way to understand how the system operates, the meta-data used to acquire and manipulate data, how the data are stored, and the access allowed to each user role.

These areas are discussed in more detail in the remaining subsections in this part of the PI-041 guidance.

Data Integrity and Compliance Starts with System Purchase

Do you have a death wish to keep perpetuating data integrity problems? If you are a DI team member, a CSV expert, or a consultant, you may have answered “Yes.“ However, if you work in the laboratory and must trudge through miles of procedural controls to ensure data integrity, the answer should be a resounding “No.” See the discussion on the hidden factory in your laboratory for a detailed description of the pain that paper can cause (5). But that lovely shiny new spectrometer that has just been delivered has arrived to resolve problems has the same old issues you have with the current one—a lack of data integrity controls. If you want to make money out of data integrity, buy shares in paper companies. You focused on the instrument and not the software during the selection process, you naughty people!

To avoid perpetuating data integrity problems, it is essential that any new analytical instruments and computerized systems have adequate technical controls in the software to protect the electronic records. Clause 9.1.4 of PI-041 notes that laboratories must ensure that suppliers have an adequate understanding of regulations and data integrity requirements, and, as noted in 9.3 item 1, specific attention should be paid to the evaluation of data integrity controls (1). Purchase of inadequate instruments is still a problem, as shown by Smith and McDowall, who analyzed over 100 FDA citations for infrared instruments. Over 40% of the citations were because of lack of software controls present before the instrument was purchased, such as no security, conflicts of interest, or no audit trail (6). Although there is the regulatory expectation that one should not purchase inadequate systems, as laboratories focus on the instrument and not the software data, integrity problems will be perpetuated.

It’s Only an FT-IR, Isn’t It?

Don’t forget how the Purchasing department can screw up data integrity. You may have spent time and effort in the evaluation of a specific instrument, and the software and the capital expenditure justification goes through the approval process. You await delivery of a shiny new red spectrometer but a yellow one you rejected due to inadequate software turns up instead. The gnomes of Purchasing have struck again, saying, “I got this one $1,000 cheaper on eBay!” The excuse goes along the lines “Its only an FT-IR, isn’t it?” Purchasing needs to be brought into the data integrity loop; otherwise what hope is there for your organization?

Validation of Systems

Clause 9.2.2 make an excellent point that a system may be validated, but the data may still be vulnerable (1); for more discussion on this point, please read a previous “Questions of Quality” column on this topic (7).

Section 9.3 has the first mention of the expectation and potential risk of not meeting regulatory expectations, with six topics for validation and maintenance (1). Listed below are the expectations and my comments; for the risks of not meeting them, you’ll have to read the document itself:

- Purchasing systems that have been properly evaluated, including their data integrity controls, and validating them for intended use is a key expectation that most people will ignore totally. We will revisit this later. Software configuration settings must be documented, as this is a key part of validation. This section also covers legacy systems and where data integrity controls are lacking “additional controls,” meaning SOPs and training should be implemented.

- There should be an inventory of all computerized systems, with risk assessments and data vulnerability assessments. Now, we have an inconsistency with clause 3.7 that states that this guide is not mandatory or enforceable under law. However, there is a legal requirement in Annex 11 for an inventory in clause 4.3, as well as application of risk management in clause 1 (3). Data process mapping to identify data integrity vulnerabilities, and then remediating them, is common sense in today’s regulatory environment (8).

- For new systems, a Validation Summary Report (VSR) is expected, along with a shopping list of items that need to be included, including a list of all users, configuration details, etc. (1). The writers of this document appear to have forgotten the middle word of what is being written: summary. A VSR is a concise document that should point to where such information can be found in the suite of validation documents. Who in their right mind would include a list of all current users in a VSR, especially for a large networked application? “Bonkers” is the politest word I can use.

- The validation and testing approach outlined is based on Annex 15 on Qualification and Validation (9), rather than Annex 11 for Computerized Systems (3). As such, it is a poor and apparently inflexible approach being focused on manufacturing rather than laboratory systems, as it lists user requirement specifications (URS), design qualification (DQ), factory acceptance test (FAT), site acceptance test (SAT), installation qualification (IQ), operational qualification (OQ), and performance qualification (PQ) documents (1). How many laboratories perform FAT and SAT testing in their validations? This approach ignores clause 2.5 in Annex 15 allowing the merger of documents (9), and, furthermore, does not reference clause 1 of Annex 11 (3) regarding the use of risk management to determine the extent of validation work required. The list omits the configuration specification ,and, if custom software is being developed, functional and design specifications. In all, a poor set of expectations.

- Periodic review is another concept not mandatory or enforceable under law. However, we now reach the point where this becomes audience participation time in a British pantomime, where the evil regulatory villain shouts to the audience it’s not enforceable by law—and the laboratory audience shout back, “Oh, yes it is!” Have you ever read Annex 11 clause 11 on periodic evaluation (3)?

- We now come to the point in PI-041 where the regulatory enthusiasm for regular system updates (such as, for example, patches and application upgrades) meets the buffers of pharmaceutical reality—requirements for timely update of security patches (1). We assess this in a separate section.

Data Transfer

Another pantomime moment arrives when we consider section 9.4 and data transfer.

- The “not mandatory” or “enforceable under law” statement is inconsistent with item 1 about the need to validate data transfer. This is a specific requirement of clause 5 of Annex 11 (3).

- A good point regarding PI-041 is when software is updated, the laboratory should confirm that old data can still be read (1). This may be in the existing data format, or may require migration to a new data format, but this is another specific requirement in clause 4.8 of Annex 11 (3) that is legally enforceable.

Now we come to what we say in English is the pièce de résistance: When conversion to the new data format is not possible, the old software should be maintained. Aargh! Computer museum, here we come! The problem is that the life of the data exceeds the life of the instrument that created them. One way of keeping a system on life support could be virtualization, provided that the software does not have a dongle or requires the instrument to be connected to operate. Expectation 3 also looks at the problem further, and suggests conversion to a different file format while acknowledging that data could be lost as a result, but that the process needs to be controlled and validated. The problem is that spectroscopic data are mainly dynamic, and guidance documents expect dynamic data to remain dynamic (1,10–12).

System Security and Access Control

Section 9.5 covers five pages as it sets the expectations for system security, appropriate access to user functions, configuration to ensure data integrity, network protection, and restrictions on use of USB devices (1). Rather than go though the shopping list of non-mandatory expectations, I’ll cherry pick the key items from my perspective. In doing this, I’m making the assumption that you know about individual user identities with appropriate access privileges. Please read the guidance document to gain the full picture of the regulatory expectations here.

Strict segregation of duties is essential so that administrators are independent of laboratory users, which is reflected in several other regulatory guidances (1,10–12). However, one of the common questions that arises during training is if a laboratory has a standalone system with only a few users, how should this be achieved? The PIC/S guidance recognizes that this is a potential problem for smaller organizations, and suggests that a user can have either two user identities or roles:

- An administrator with no user functions

- A user with no administrator functions.

However, if this option is selected, it is critical that the audit trail records the respective activities to demonstrate no conflict of interest (1).

The format and use of passwords must be controlled. This subject was discussed at length in a previous “Focus on Quality” column (13); don’t have complex passwords that are difficult to remember but string easy to remember words together. Doing so will save those groveling calls to the help desk saying you have forgotten your password and locked your account.

In expectation 1, it is noted that a system should generate a list of successful and unsuccessful login attempts, with dates and times plus a session length if a user has successfully logged in. For the latter expectation in a document that is purportedly non-mandatory and not legally enforceable requirements, this is going too far—you can work this out by log-on and log-off times.

There are many security expectations reading as a list of system requirements that most users won’t have read, or even bother with, during selection of an instrument and its data system. For example, can a system generate a list of users with user identification and their role? Most networked systems can generate a similar list, but not many standalone systems can, as this does not feature on the horizon of supplier requirements. If you use spreadsheets, forget it!

Network protection, including antivirus software, intrusion detection, and firewalls, is covered in table 9.5.3; as noted earlier, operating security patching was mentioned in table 9.3.6 (1). Personally, there should have been a separate section on cybersecurity that merges both these elements instead of separating them.

Table 9.5.3 discusses electronic signatures and notes that records must be permanently linked to their respective record, along with the date and time of signing (1). Again, in contrast to clause 3.7, this is a legal requirement lifted directly from clause 14 of Annex 11 (3). An extension to the requirements of Annex 11 is the expectation to revoke an electronic signature, but show on the record that it is now unsigned. Finally, there is encouragement to use biometric and digital signatures, I have doubts this will be implemented on small laboratory systems until the market requires it, and the technology reduces in price. The placing of this discussion is strange: security. I would have positioned it as a way of improving data integrity and process efficiency by eliminating paper in Section 9.8, as many systems are still hybrid. Hybrid systems will be discussed later. Another spoiler alert! You won’t like the comments.

There is a specific section on the control of USB devices, either as sticks or thumb drives, but also cameras, smartphones, and other similar equipment (1). This section is to ensure that malware is not introduced into an organization, and devices are not used to copy and manipulate data. See the discussion on the use of USB sticks in an earlier “Focus on Quality” column this year (14).

Audit Trails Are Critical for Data Integrity

In contrast with the security section, the one for audit trails is shorter, which is surprising given how critical an audit trail is for ensuring traceability of actions and data integrity. The first expectation is that a laboratory purchases a system with an audit trail. However, there is an alternative option available in EU GMP Annex 11 clause 12.4 that management systems for data and for documents should be designed to record the identity of operators entering, changing, confirming, or deleting data, including date and time (3). Therefore, if an application can meet 12.4 requirements, then an audit trail is not required.

The guidance reiterates that proper system selection should ensure that software should have an adequate audit trail that users must verify the functionality of during the validation (1). Some applications may have more than one audit trail, and it is important to know which ones are important for monitoring changes to data. A good point is that the guidance suggests looking at entries for changes to data with a focus on anomalous or unauthorized activities to allow review by exception. With software having more than one audit trail, the review of any noncritical audit trails can be performed in periodic reviews at longer time intervals.

The guidance reiterates the issue with proper selection of software, as systems that prevent users from changing parameters or permission settings can reduce the need for detailed review of all audit trail entries. This allows a laboratory to implement an effective review by exception approach—but only where there are effective controls that have been implemented, documented, and validated.

Know and Manage Data Vulnerabilities

The guidance is very clear, along with the WHO and MHRA guidances (10,11), that laboratories must know the criticality of data generated by any computerized system, as well as any vulnerabilities (1). Data process mapping (8) is suggested as a way of identifying vulnerabilities such as shared user identities, or data files stored in directories that can deleted outside of the application with no audit trail entry. The regulatory expectation is that these vulnerabilities should be fixed using technical controls, although the guidance acknowledges that legacy systems may require procedural controls to ensure data integrity before these are updated or replaced. Clause 9.2.2 makes the point that a system may be qualified, calibrated, and validated, but there is no guarantee that the data contained within it are adequately protected (1), hence the need to identify and mitigate any data vulnerabilities.

Don’t Touch the System – It’s Validated!

The traditional approach to validation of a computerized system in a regulated laboratory is once validated, no changes to the system are made. In contrast, in the security section the PIC/S guidance takes a more pragmatic approach on page 37, and suggests that computerized systems should be updated in a timely manner, including security patches and new application versions (1). This is good in principle, but there needs to be a different mindset within industry to commit and follow this in practice. For example, it is easier to update an application incrementally with minor re-validation rather than wait until the application goes out of support, and panic as a full validation and possible data migration project may be required. However, the detail provided here appears to be more of an inspector’s checklist with little flexibility.

Operating a Computerized System

Sections 9.7 to 9.9 cover the operational use of a computerized system from data entry, through data review, data storage, and finally destruction of the e-records (1). Yet again, there are regulatory expectations listed that are regulatory requirements, such as:

- 9.7.1 Critical data entered manually should be verified by a second person or a validated process (clause 6 of Annex 11) (3)

- 9.7.2 Changes to data need to be authorized and controlled. I’m not certain that “authorized” is the correct word, as the regulations merely use the word “reviewed.” However, these are regulations in Chapter 4.9 (4), Chapter 6.17 vii (15), and Annex 11.9 (3).

Some other nuggets of gold are:

- 9.8.1 requires a risk assessment to identify all GMP critical data (1). Whoa! We performed an analysis, but, before we review the data, we need to identify critical data. We are in the process of reviewing data, and we need a risk assessment? This should have been undertaken during evaluation and validation!

- There is the requirement to write an SOP to review audit trails (1). This is wrong. Look around your laboratory; how many different systems are there? There are basic regulatory requirements for audit trails, but there is no standard implementation. You won’t have one SOP for audit trail review; you will have as many review procedures as you have computerized systems (assuming that each one has an audit trail). A far better approach would be to have a single SOP for second person review that calls the appropriate procedure or work instruction for audit trail review, as shown in Figure 2. This is an easier way to review audit trails rather than have a generic SOP that will be useless, as it will not have enough detail for each system’s audit trail review.

- An expectation is the need for quality oversight of audit trail review that should be incorporated into the Chapter 9 self-inspections (16) in an organization.

- Back to regulations disguised as expectations; most of the items in section 9.9 can be derived from clause 7 of Annex 11 (3):

- Backup must include all data and associated metadata, and be capable of recovery

- Backups should be readable

- In the absence of alternatives, backup using USB devices must be carefully controlled. Backup must be performed automatically and must not use either drag and drop or copy and paste. The backup must not allow data deletion (1), which implies that it should be performed by an independent person.

There is an assumption that data will be archived offline; however, networked systems can archive data on-line by making the data read only, and only accessible by designated users.

FIGURE 2: Procedure for second person review with linked specific work instructions for individual system audit trail review.

Managing Hybrid Systems

For those that use hybrid systems, Section 9.10 does not make for comfortable reading; such systems are not encouraged, and should be replaced as soon as possible (1). They also require additional controls, due to the complexity of the record set and increased ability to manipulate data. This is essentially the same as the stance taken by the WHO guidance (10).

A potentially onerous requirement is a detailed system description of the entire system that outlines all major components, how they interact, the function of each one, and the controls to ensure the integrity of data. How many hybrid systems do you have in your laboratory, including spreadsheets?

To read further about hybrid systems, and why they need to be replaced, see the following references (17–19).

Outsourced Activities

When GMP work is outsourced, such as API production, con- tract manufacture, or contract analysis, the same principles of data integrity are expected. After an adequate assessment of the facility, technical ability, and approaches to data integrity, requirements for DI and escalation of data integrity issues to contract giver need to be included in agreements between the two parties, as required by Chapter 7 (20). Limitations of summary reports were discussed in Part 1 of this review, as well as ways of reviewing analytical data remotely by video conference (2).

Regulatory Actions for DI Findings

Section 11 has a section where the various PIC/S regulations and guidance documents are mapped to the ALCOA+ criteria. Of most use is a classification of data integrity deficiencies in four categories (1):

- Critical: Impact to product with actual or potential risk to patient health

- Major: Impact to product with no risk to patient health

- Major: No impact to product, evidence of moderate failure

- Other: No impact to product, limited evidence of failure

Examples given for each of the above deficiency categories are given to help during inspections. It may be prudent to adopt the same approach for classifying DI findings in internal audits or supplier assessments.

Remediation of DI Failures

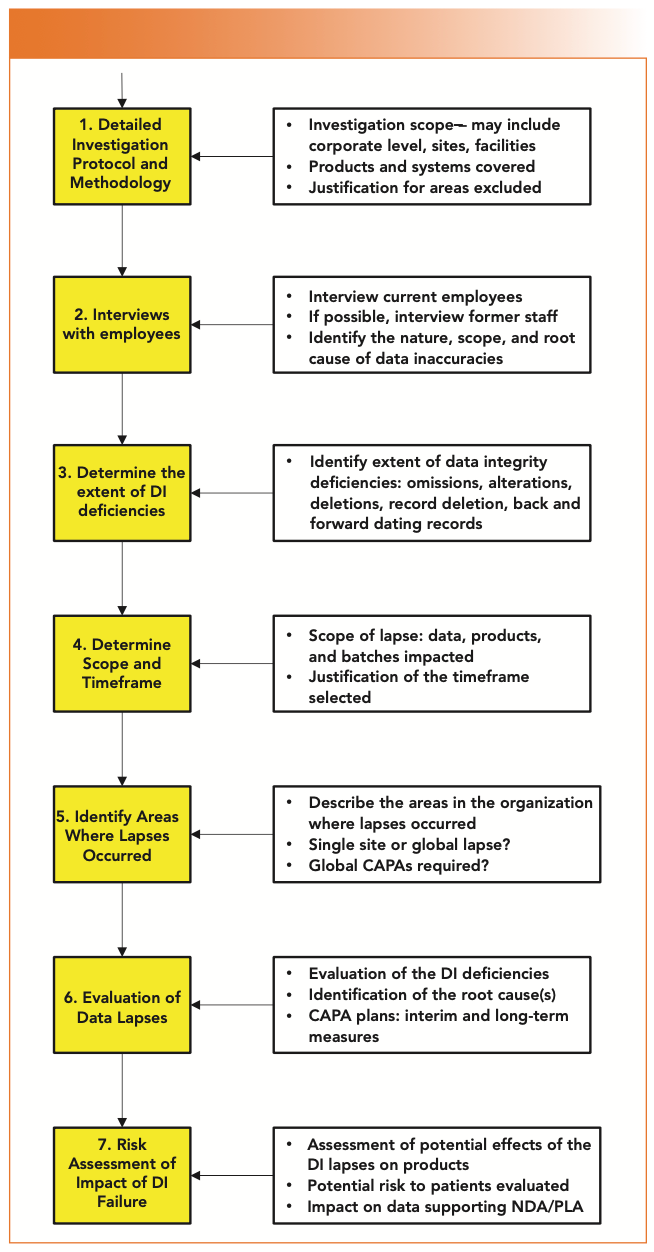

The last section is guidance of how an organization should investigate data integrity failures. The process is shown in Figure 3 that is taken from Section 12.1.1.1 in PI-041 (1). What the guidance does not state is that a data integrity investigation is a very detailed process and time-consuming process. From personal experience, it is unlikely that any root cause will be determined at stage 2 of the investigation (interviewing employees). Root cause determination comes at stage 6 of Figure 3, when the investigation team has a complete picture of the extent and depth of the data integrity problems.

FIGURE 3: Process for investigation of data integrity failures (PIC/S PI-041).

In addition to identification of data integrity problems, a data integrity investigation will also identify poor data management practices. Don’t ignore them, they need to be identified, classified, and remediated in parallel, as they could be the source of the next data integrity investigation. These will also be included in the data investigation report.

PI-041 makes the assumption that all data integrity problems are caused by evil people. This is not so. I have been involved with one investigation that was caused by a software error (18).

The only other data integrity guidance that goes into detail about investigations is the FDA data integrity guidance in Question 18 (12) that calls out the agency’s Application Integrity Policy (21), which is broadly similar to that shown in Figure 3.

Some of the outcomes of an investigation could be:

- Data in a product license submission are falsified

- Data manipulation resulting in a risk to patient health, necessitating a batch recall

Is there a material impact that requires the company to inform one or more regulatory authorities? The classic data integrity case is that of AveXis, a subsidiary of Novartis, and data integrity lapses found during the development of the biological Zolgensma for treating muscular atrophy. The company was granted a product license for the product, and then told the FDA there were data integrity problems. Understandably, the FDA were not happy campers, and in July 2019 went on site and subsequently issued a 483 (22). The issue is that a company needs to have a good understanding of all of the data integrity issues, and have at least a draft report available before approaching a regulatory authority to discuss the lapses.

Section 12 ends with a discussion on the indicators of improvement following an onsite inspection to verify that the CAPA actions have been effective. Indicators of improvement are listed as (1):

- A thorough and open investigation of the issue, and how to implement effective CAPA actions

- Having open communications with clients and other regulators

- Evidence of open communication of the DI expectations, and processes for open reporting of issues and opportunities for improvement across the organization

- Resolution of the DI violations, and remediation of computerized system vulnerabilities

- Implementation of data integrity policies with effective training

- Implementation of routine data verification practices

The best advice I can offer is to ensure that DI principles are infused throughout the organization, the PQS, and computerized systems, and your staff are trained in the DI policies. The effort and cost, along with loss of reputation with regulatory authorities, is not worth any efforts to save money. The cost of noncompliance is always much more expensive than the cost of compliance (23).

Summary

This two-part column has focused on the section of the new PIC/S PI-041 guidance on Good Practices for Data Management and Integrity in Regulated GMP/GDP Environments dealing with computerized systems. It is a good guidance, with a wide scope and a lot of detail, but it had the potential to be much better. The reason is that it veers in places from guidance with room for interpretation to a regulatory checklist list, especially for computerized systems. Some of the requirements, such as to be up to date with patching, won’t meet with reality, as there are many laboratory systems using obsolete operating systems that will be unable to be patched. As a result, some requirements cannot be implemented.

References

(1) PIC/S PI-041 Good Practices for Data Management and Integrity in Regulated GMP/GDP Environments (Pharmaceutical Inspection Convention/Pharmaceutical Inspection Cooperation Scheme, Geneva, Switzerland, 2021).

(2) R.D. McDowall, Spectroscopy 36(11), 16–21 (2021).

(3) EudraLex, Volume 4 Good Manufacturing Practice (GMP) Guidelines, Annex 11: Computerised Systems (European Commission, Brussels, Belgium, 2011).

(4) EudraLex, Volume 4 Good Manufacturing Practice (GMP) Guidelines, Chapter 4: Documentation (European Commission, Brussels, Belgium, 2011).

(5) R.D. McDowall, LCGC Europe 34(8), 326–330 (2021).

(6) P.A. Smith and R.D. McDowall, Spectroscopy 34(9), 22–28 (2019).

(7) R.D. McDowall, LCGC Europe 30(1), 93–96 (2016).

(8) R.D. McDowall, LCGC N. Am. 37(2), 118–123 (2019).

(9) EudraLex, Volume 4 Good Manufacturing Practice (GMP) Guidelines, Annex 15: Qualification and Validation (European Commission, Brussels, Belgium, 2011).

(10) WHO Technical Report Series No. 996 Annex 5: Guidance on Good Data and Records Management Practices (World Health Organisation, Geneva, Switzerland, 2016).

(11) MHRA GXP Data Integrity Guidance and Definitions (Medicines and Healthcare Products Regulatory Agency, London, United Kingdom, 2018).

(12) FDA Guidance for Industry Data Integrity and Compliance With Drug CGMP Questions and Answers (FDA, Silver Spring, MD, 2018).

(13) R.D. McDowall, Spectroscopy 33(11), 20–23 (2018).

(14) R.D. McDowall, Spectroscopy 36(4), 14–17 (2021).

(15) EudraLex, Volume 4 Good Manufacturing Practice (GMP) Guidelines, Chapter 6: Quality Control (European Commission, Brussels, Belgium, 2014).

(16) PIC/S PI-023-2 Aide-Memoire Inspection of Pharmaceutical Quality Control Laboratories (Pharmaceutical Inspection Convention/Pharmaceutical Inspection Co-Operation Scheme, Geneva Switzerland, 2007).

(17) GAMP Guide Records and Data integrity (International Society for Pharmaceutical Engineering, Tampa, FL, 2017).

(18) R.D. McDowall, Data Integrity and Data Governance: Practical Implementation in Regulated Laboratories (Royal Society of Chemistry, Cambridge, United Kingdom, 2019).

(19) R.D. McDowall, LCGC N. Am. 37(3), 180–184 (2019).

(20) EudraLex, Volume 4 Good Manufacturing Practice (GMP) Guidelines, Chapter 7: Outsourced Activities (European Commission, Brussels, Belgium, 2013).

(21) FDA Application Integrity Policy: Fraud, Untrue Statements of Material Facts, Bribery, and Illegal Gratuities (Compliance Policy Guide Section 120.100) (FDA, Rockville, MD, 1991).

(22) FDA 483 Observation AveXis Inc. (FDA, Silver Spring, MD, 2019).

(23) R.D. McDowall, Spectroscopy 35(11), 13–22 (2020).

R.D. McDowall is the director of R.D. McDowall Limited and the editor of the “Questions of Quality” column for LCGC Europe, Spectroscopy’s sister magazine. Direct correspondence to: SpectroscopyEdit@MMHGroup.com ●

Synthesizing Synthetic Oligonucleotides: An Interview with the CEO of Oligo Factory

February 6th 2024LCGC and Spectroscopy Editor Patrick Lavery spoke with Oligo Factory CEO Chris Boggess about the company’s recently attained compliance with Good Manufacturing Practice (GMP) International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use (ICH) Expert Working Group (Q7) guidance and its distinction from Research Use Only (RUO) and International Organization for Standardization (ISO) 13485 designations.