Logger Rhythm

A logarithm is a mathematical function that shows up in some forms of spectroscopy. Understanding what it is can give us a better appreciation for its function in our fields.

What is "log"? The "log" represents something called a logarithm. A logarithm is a mathematical function that shows up in some forms of spectroscopy and many other aspects of science. Understanding what it is can give us a better appreciation for its function in our fields.

Oh, gee, another column on a math topic? Weren't the seven installments on Maxwell's equations, full of vector calculus, enough? Well, there are math topics other than calculus that impact spectroscopy; this installment discusses one of them. But there is a calculus connection . . .

Beer's law (more formally known as Bougier-Lambert-Beer's law, for those who remember an installment from long ago [1]) is a simple mathematical relationship used in spectroscopy. Its mathematical form is

where A is the absorbance of the sample, I0 is the initial intensity of light at a certain wavelength, and I is the intensity of the same light after it has passed through a sample.

What is "log"? Log represents something called a logarithm, which is a special sort of mathematical function that shows up in some forms of spectroscopy and many other aspects of science. Understanding what it is can give us a better appreciation for its function in our fields.

The Logarithm

The four basic operations of arithmetic are addition, subtraction, multiplication, and division. Of these four operations, most people find addition and subtraction easier than multiplication and division — especially in the times before calculators.

John Napier (1550–1617; the spelling of his last name has several variants) was a Scottish physicist and mathematician who has several claims to fame, including the popularization of the decimal point in a fashion still used today. But his biggest claim to fame was the 1614 publication of a book titled Mirifici Logarithmorum Canonis Constructio, which is still available as an English translation (2). In this book, Napier introduced a new invention: logarithms. At first called "artificial numbers," the word "logarithm" was coined by Napier as a combination of the Greek words λóγoς (logos), meaning "proportion," and ἀριθµóς (arithmos), meaning "number." Napier's definition of a logarithm is insightful (2): "The logarithm of a given sine is that number which has increased arithmetically with the same velocity throughout as that with which radius began to decrease geometrically, and in the same time as radius has decreased to the given sine."

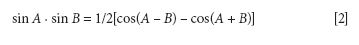

Napier was originally concerned with the application of his invention in trigonometry; hence his references to "sine" and "radius." It is possible that he was inspired by a very useful equation, however, that many of us learn in trigonometry:

This equation, one of the so-called prosthaphæretic rules, turns multiplying two sine functions into the subtraction of two cosine functions — and as already stated, addition and subtraction are far easier than multiplication and division! (The word "prosthaphæretic" derives from the Greek words for addition and subtraction. People who wear artificial limbs called prosthetics and people who donate blood platelets through a process called apheresis are already familiar with the Greek terms.) It is conjectured that knowing this rule inspired Napier to take things a bit further (3).

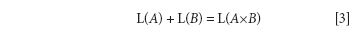

What does the Napier quotation mean? Basically, it implies that instead of performing geometric ratios (multiplication and division), you can perform arithmetic ratios (addition and subtraction). The logarithm of a number is that number which, when two logarithms are added together, the result is the logarithm of a third number that is the product of the two original numbers. Using the letter "L" to represent a logarithm and with numbers A and B, it means that

Thus, multiplication (and its inverse, division) becomes addition (or its inverse, subtraction). Because addition is typically easier than multiplication, Napier simplified multiplication by essentially converting it into addition.

Napier's original "invention" of the logarithm, based on parts per 10,000,000, seems rather distant from our current definition: His method seems rather complicated (see reference 2 if you want the details), but it is clear that he actually defined negative logarithms, as he based his original number table on decreasing, rather than increasing, numbers. Thus, the so-called "Naperian logarithm" is best remembered for historical discussions rather than practical. But as a mathematical concept, the idea was sound and was readily adopted by mathematicians and scientists. Just a year after publication of Napier's book, English mathematician Henry Briggs consulted with Napier and proposed that the definition of logarithm be reversed — that it be based on 10 rather than parts per 10,000,000. In 1624, Briggs published Arithmetica Logarithmica, which contained 30,000 logarithms up to 100,000.

In modern notation, the logarithm is related to the expression of a number as a power of some other number, called a base. For example, the number 4 can be represented as the base 2 raised to the power of 2, while 8 can be expressed as the base two raised to the power of 3:

4 = 22 8 = 23

Multiplying 4 and 8 is the same as multiplying 22 and 23:

4 × 8 = 22 × 23

The algebra of exponents states that 22 times 23 is equal to 2(2 + 3):

22 × 23 = 2(2 + 3) = 25 = 32 = 4 × 8

So instead of multiplying 4 and 8, we add 2 and 3 — again, addition is much easier than multiplication. In logarithmic terms, we would write this as

log 4 + log 8 = log 32

where "log" stands for "logarithm." That is, where you would multiply numbers, you would add their logarithms. This example is trivial, as most people can multiply 4 and 8 without resorting to exponential notation and logarithms. But when the numbers are not so straightforward, the multiplication is not so easy, and converting the multiplication to an addition is a significant savings in brain power. (In fact, Pierre-Simon Laplace is said to claim that the invention of logarithms "by shortening the labors, doubled the life of the astronomer" [4].) In 1622, English mathematician William Oughtred invented the slide rule to assist with calculations, a tool that lasted for over three centuries until it was replaced by the calculator.

As we mentioned earlier, using 10 as a base for logarithms made certain mathematical sense, as humanity developed a decimal and place-based numbering system based on 10 unique digits. The definition of the base-10, or common, logarithm is now well-known:

If y = 10x, then log y = x

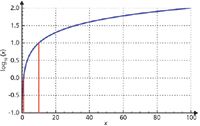

The logarithm, then, is a function, just as sine and cosine are functions (although "operation" may be a better term than "function"). A plot of the logarithm function is shown in Figure 1. Note that to maintain continuity, logarithms of numbers between 1 and 10 are decimal numbers, the logarithm of 1 is 0, and logarithms of numbers less than 1 are negative. Logarithms of negative numbers are not real, but can be expressed in terms of imaginary numbers (5). Figure 1 also demonstrates a convention when expressing logarithms: The base is listed explicitly as a right subscript on the "log" label. Logarithms based on 10 follow a simple progression:

log10 (1) = 0

log10 (10) = 1

log10 (100) = 2

log10 (1000) = 3

and so on

Figure 1: A plot of log10(x) vs. x (blue line). The two red lines indicate certain benchmarks for the log function: where it equals zero (at x = 1) and where it equals 1 (at x = 10).

Logarithms between powers of 10 have decimals: hence, log10 (500) = 2.69897 . . . and so forth.

The bases of logarithms can, however, vary. For example, we can use 2 as the base for logarithms. When that is the case, log2 (1) = 0 and log2 (10) = 2.32192 . . . . Want to use pi as a base for logarithms? Then logπ (5) = 1.40595 . . . and logπ (10) = 2.011465 . . ., while logπ (2013) = 6.64557 . . . . Any number can be used as a base, which brings us to our next point.

The Natural Logarithm

Imagine that you're a banker and that you pay a certain percentage interest on a savings account value. How do you calculate the amount of interest over a given time period? Essentially you take the rate, typically expressed as a decimal value, over the time period of the interest payment. For an initial balance B and a decimal rate R, the new amount N after the given time period is

N = B(1 + R)

For example, if your initial amount is $100 and your annual interest rate is 4% (0.04 in decimal terms), your new amount after one year's time is

N = $100(1 + 0.04) = $104

If the account goes for a second year, the amount at the end of the second year is

N = $104(1 + 0.04) = $108.16

After a third year, the amount is

N = $108.16(1 + 0.04) = $112.49

and so forth. It should be easily seen that, in general, the amount over n number of years is

N = B(1 + R)n

If the interest is calculated once per time period, it is easy to determine your new amount. However, many banks advertise a certain annual rate, but calculate the interest more than once a year. If the bank calculates t number of times per year, then the actual decimal rate per time period is R/t. The new balance at the end of the year is now calculated by

N = B(1 + R/t)t

and, following from the previous equation, over n years the new amount N is

N = B(1 + R/t)tn

Table I shows the new amounts for calculating interest once a year, once a month, once a week, and once a day. As you can see, initially there is a benefit to having a smaller interest rate but applied more times (you get 74 more cents for monthly interest calculations than for a single annual one!), the benefit tops out quickly, suggesting that in any formula like this, there is a finite limit (and if it weren't, banks might go out of business!).

Table I: New amounts on a $1000 initial balance for various divisions of a 4% annual interest rate after one year

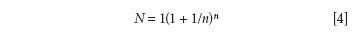

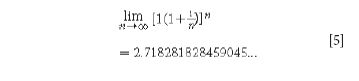

Suppose we normalize this: Set B = 1, R = 1, and t = 1 and let n get larger and larger, corresponding to a smaller "interest rate" but calculated a larger number of times. What we get is the expression

What happens as n gets larger and larger? There is a temptation to suggest that N goes to infinity as n goes to infinity, but when n = infinity, we have, essentially, (1 + 0)infinity, which is just 1. Perhaps there is a happy medium between 1 and infinity? It turns out that there is. Go ahead and enter this expression into a spreadsheet or other calculator and evaluate it; the answer will actually converge on a number:

As it turns out, this numerical value has vast implications for the natural world, and is given the symbol e, so labeled by the great mathematician Leonard Euler in 1731; as such, it is sometimes called Euler's constant.

Going back to the previous section about logarithms: if any number can be used as a base (like π), then why not e? Logarithms that use e as their base are called natural logarithms.

Why "Natural"?

Why "natural"? Surely, 10 is more natural than 2.71828. Well, not really — 10 as a base is likely more anthropocentric than anything else — humans have 10 fingers and toes, at least one set of which (the fingers) is commonly used for counting. If the three-toed sloths had developed logarithms, they would probably argue that 3 is a "natural" base for logarithms. The reality is that the base e and the logarithm involving it show up in various mathematical applications, well, naturally.

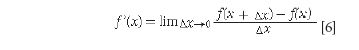

It is easiest to demonstrate the naturality of e as a base by doing some simple calculus. Recall the fundamental definition of a derivative of a function, labeled f'(x):

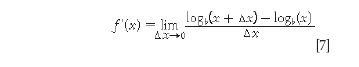

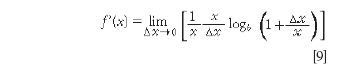

If we substitute f(x) = logbx for our function (where b is the base), we have

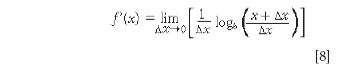

We can factor out the 1/Δx and rewrite the subtraction of two logarithms as the logarithm of a fraction:

We now multiply the 1/Δx by x/x (which is simply 1) and rewrite the fraction inside the logarithm. We get the following expression:

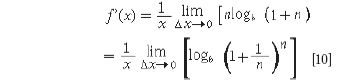

The 1/x term can be factored out of the limit. If we define n = x/Δx, we can determine a final expression as

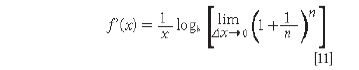

The limit and log can be switched to yield a derivative that contains an expression that should be familiar:

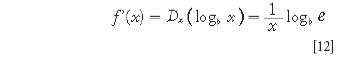

The expression inside the square brackets is, of course, e (see equation 5). Thus, we have

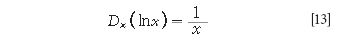

The logarithm expression simplifies if e is the base, because loge(e) = 1. Logarithms using e as a base, so-called natural logarithms, are usually denoted "ln" rather than "log". This last equation therefore becomes

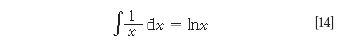

That is, the derivative of the natural logarithm of a function is the reciprocal of the function. (The chain rule also applies.) An equivalent statement is that the integral of a reciprocal function is the natural logarithm of the function; that is,

If you recall that an integral is an area under a curve, then equation 14 has a very interesting geometric ramification: The area under the curve y = 1/x between the limits of 1 and x equals ln x (see Figure 2).

Figure 2: A plot of y = 1/x, showing that the area under the curve starting at 1 and going to some limit greater than 1 equals the natural logarithm of that limit.

There are other ways e shows up in mathematics; reference 4 discusses other appearances. As such, using a base of 2.71828 . . . is (to overuse the word) natural — even for three-toed sloths.

Back to Spectroscopy

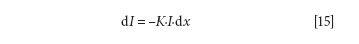

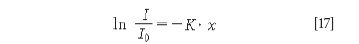

It is in this last manner that the logarithm, including the natural logarithm, shows up in spectroscopy. Early investigation of the absorption of light by matter showed that the infinitesimal decrease in the intensity of light, dI, absorbed by an infinitesimal thickness of sample, dx, was proportional to the absolute intensity of the light, I:

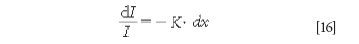

where K is the proportionality constant. This expression rearranges to

and to get the total change in intensity, we integrate and evaluate between the initial intensity, I0, and the final intensity I:

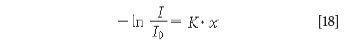

where x now represents the thickness of the sample. It is typical to write the negative sign with the logarithm term, so we usually see this equation written as

Equation 18 is one way of defining the absorbance, A, of a sample in a spectroscopic measurement. If the natural logarithm is used, the absorbance is labeled A' and the constant K is usually depicted as α', called the absorption coefficient of the same. Absorbance is usually defined most directly in terms of the base-10 logarithm; hence we have

where α = α'/2.303 . . . and A = A'/2.303 . . . The "2.303 . . ." is the value of ln(10) and represents the multiplicative conversion between base-10 logarithms and natural logarithms.

Relationships like equation 15 — wherein the change of a variable is proportional to the value itself — show up regularly in science. That's one reason why "logger rhythms" are so common and so useful.

References

(1) D.W. Ball, Spectroscopy 14(5), 16–17 (1999).

(2) J. Napier, The Construction of the Wonderful Canon of Logarithms, translated by W.R. MacDonald (Dawsons of Pall Mall, London, 1966).

(3) E. Maor, e: The Story of a Number, (Princeton University Press, Princeton, New Jersey, 1994).

(4) A.G.R. Smith, Science & Society in the Sixteenth and Seventeenth Centuries (Science History Publications, New York, 1972). Note: Although Laplace is quoted in this reference, the source of the actual quote was not itself referenced. Others have commented on the lack of original source. Any feedback regarding the ultimate source of the Laplace quote would be appreciated.

(5) D.M. McQuarrie, Mathematical Methods for Scientists and Engineers, (University Science Books, Sausalito, California, 2003).

David W. Ball is a professor of chemistry at Cleveland State University in Ohio. Many of his "Baseline" columns have been reprinted as The Basics of Spectroscopy, available through SPIE Press. Professor Ball sees spectroscopy from a physical chemistry perspective, because that's his background. He recently served as Distinguished Visiting Professor at the US Air Force Academy, but is now back home in Ohio. He can be reached at d.ball@csuohio.edu

David W. Ball

LIBS Illuminates the Hidden Health Risks of Indoor Welding and Soldering

April 23rd 2025A new dual-spectroscopy approach reveals real-time pollution threats in indoor workspaces. Chinese researchers have pioneered the use of laser-induced breakdown spectroscopy (LIBS) and aerosol mass spectrometry to uncover and monitor harmful heavy metal and dust emissions from soldering and welding in real-time. These complementary tools offer a fast, accurate means to evaluate air quality threats in industrial and indoor environments—where people spend most of their time.

NIR Spectroscopy Explored as Sustainable Approach to Detecting Bovine Mastitis

April 23rd 2025A new study published in Applied Food Research demonstrates that near-infrared spectroscopy (NIRS) can effectively detect subclinical bovine mastitis in milk, offering a fast, non-invasive method to guide targeted antibiotic treatment and support sustainable dairy practices.