New LiDAR System Tested to Further Automated Recognition Systems

Scientists from Pusan National University in the Republic of Korea recently tested a new light detection and ranging (LiDAR)-based system for improving autonomous recognition systems. Their findings were later published in Scientific Reports (1).

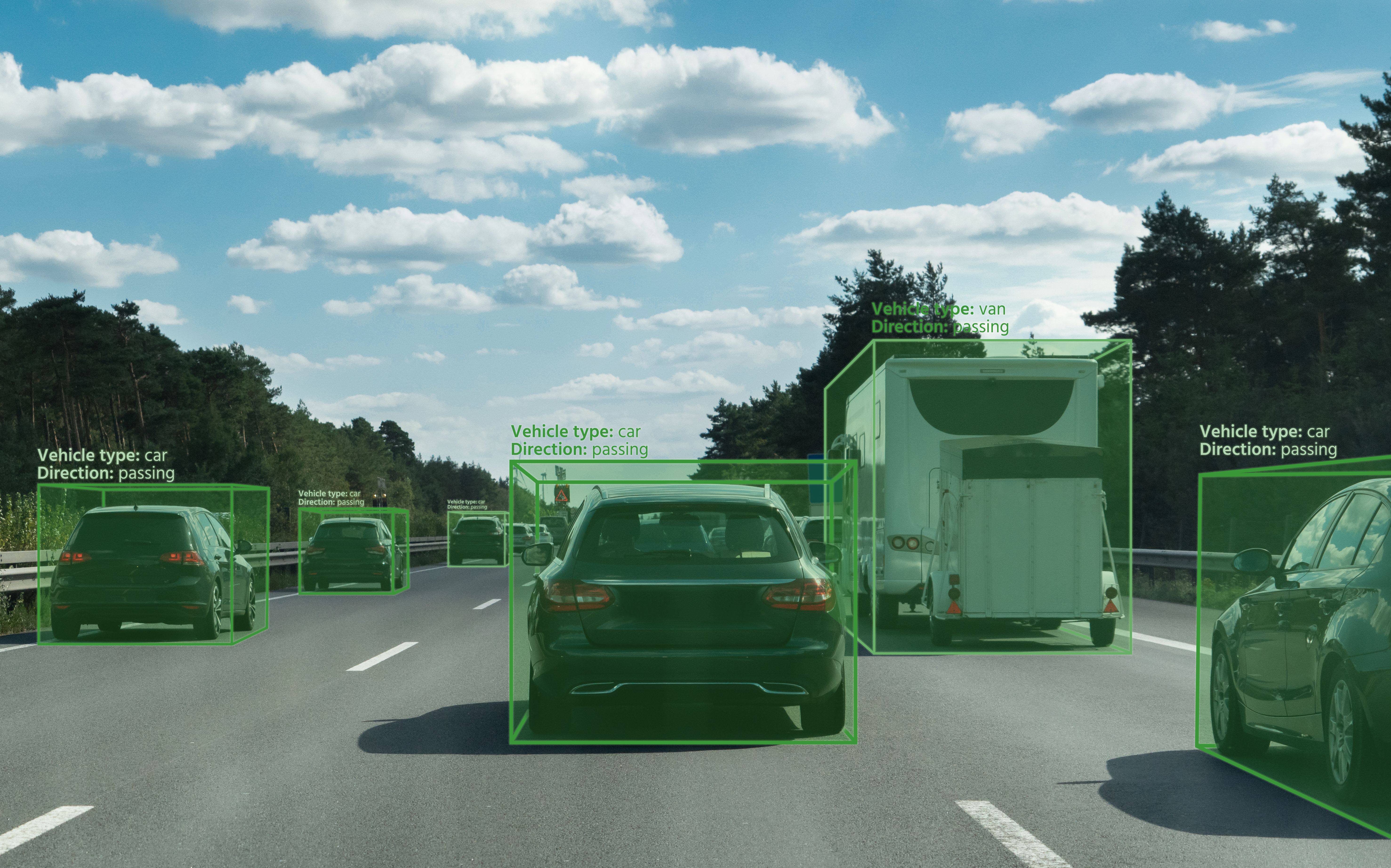

Autonomous vehicle vision with system recognition of cars | Image Credit: © scharfsinn86 - stock.adobe.com

Autonomous recognition systems have had massive growth in recent years, finding applications in fields like robotics and driving systems. These systems usually employ camera or light detection and ranging (LiDAR) systems to acquire surrounding environment information during driving. This allows for crucial feedback to be provided for navigation and safety strategies, such as routing and collision avoidance. When combined with time-of-flight (ToF) based light detection, LiDAR systems can allow for measuring object distances over hundreds of meters with centimeter access; this can be furthered when artificial intelligence (AI) is used alongside these systems. However, object recognition and classification through shape information is a system that can still suffer from inaccuracy and misidentification, such as ice on the road or distinguishing between real people and human-shaped objects.

Multi-spectral LiDAR systems have recently been introduced for overcoming these limitations by providing additional material information based on spectroscopic imaging.However, previous iterations of these systems typically employ spectrally resolved detection methods by using bandpass filters or complex dispersive optical systems, which have inherent limitations. For this experiment, the scientists proposed a time-division-multiplexing (TDM) based multi-spectral LiDAR system for semantic object inference by the simultaneous acquisition of spatial and spectral information. The TDM method, which implements spectroscopy by sampling pulses of different wavelengths in the time domain, can eliminate optical loss in dispersive spectroscopy while simultaneously providing a simple, compact, and cost-effective system.

According to the scientists, “By minimizing the time delay between the pulses of different wavelengths within a TDM burst, all pulses arrive at the same location during a scan, thereby collecting spectral information from the same spot on the object, which simplifies data processing for the object classification and allows maintaining a sufficient scan rate of the LiDAR system” (1). Regarding the TDM based multi-spectral LiDAR system used in this experiment, nanosecond pulse lasers with five different wavelengths (980 nm, 1060 nm, 1310 nm, 1550 nm, and 1650 nm) in the short-wave infrared (SWIR) range are utilized, covering a 670 nm bandwidth to acquire sufficient material-dependent differences in reflectance.

To demonstrate the system’s recognition performance, the scientists mapped the multi-spectral images of a human hand, a mannequin hand, a fabric gloved hand, a nitrile gloved hand, and a printed human hand onto a red, green and blue (RGB)-color encoded image. This image clearly visualizes spectral differences as RGB color depending on the material while having a similar shape. Further, the multi-spectral image’s classification performance was demonstrated using a convolution neural network (CNN) model based on the full multi-spectral data set.

Following the experiment, the scientists claimed their system minimized optical loss of the system while enabling simultaneous ranging of the target objects. With the multi-spectral images, a clear distinction was shown between different materials when mapped into an RGB-color encoded image; further, they are believed to be well-suited for systematic classification via CNN architecture with high accuracy, which makes full use of the spectral information. Though there is more research to be done, the scientists believe this technology offers potential for the development of compact multi-spectral LiDAR systems to enhance the safety and reliability of autonomous driving.

Reference

(1) Kim, S.; Jeong, T-I.; Kim, S.; Choi, E.; et al. Time Division Multiplexing Based Multi-Spectral Semantic Camera for LiDAR Applications. Scientific Reports 2024, 14, 11445. DOI: 10.1038/s41598-024-62342-2

New Study Provides Insights into Chiral Smectic Phases

March 31st 2025Researchers from the Institute of Nuclear Physics Polish Academy of Sciences have unveiled new insights into the molecular arrangement of the 7HH6 compound’s smectic phases using X-ray diffraction (XRD) and infrared (IR) spectroscopy.

Exoplanet Discovery Using Spectroscopy

March 26th 2025Recent advancements in exoplanet detection, including high-resolution spectroscopy, adaptive optics, and artificial intelligence (AI)-driven data analysis, are significantly improving our ability to identify and study distant planets. These developments mark a turning point in the search for habitable worlds beyond our solar system.

Using Spectroscopy to Reveal the Secrets of Space

March 25th 2025Scientists are using advanced spectroscopic techniques to probe the universe, uncovering vital insights about celestial objects. A new study by Diriba Gonfa Tolasa of Assosa University, Ethiopia, highlights how atomic and molecular physics contribute to astrophysical discoveries, shaping our understanding of stars, galaxies, and even the possibility of extraterrestrial life.