In Vivo XRF Analysis of Toxic Elements

David R. Chettle, a professor at McMaster University in Hamilton, Ontario, Canada, uses X-ray fluorescence spectroscopy for the in vivo measurement of toxic elements in human subjects, with the goal of developing devices that can be used to investigate the possible health effects of toxin exposure. He recently spoke to us about his research.

In recent years, researchers have been making important developments to advance the effectiveness of spectroscopic techniques for biomedical uses ranging from the identification of infectious agents to measuring the edges of cancerous tumors. X-ray fluorescence (XRF) spectroscopy is among the techniques that can have useful medical applications. David R. Chettle, a professor in the Department of Physics and Astronomy at McMaster University in Hamilton, Ontario, Canada, uses XRF for the in vivo measurement of toxic elements in human subjects, with the goal of developing devices that can be used to investigate the possible health effects of toxin exposure. He recently spoke to us about his research.

Your research has focused on the use of XRF for noninvasive in vivo measurements of several elements in human subjects (1–4). In vivo bone lead measurements were first performed in the mid-1970s. What has been the main motivation for developing in vivo bone lead measurement techniques with XRF?

Lead (Pb) is toxic to humans. Nearly all Pb in the adult human body is stored in bone, and Pb stays in bone a long time, with biological half lives ranging between 5 years and 30 years. Taken together, these facts mean that bone Pb reflects long-term Pb exposure and measurements of bone Pb provide insight into the long-term human metabolism of Pb. Several research groups have therefore used in vivo XRF bone Pb measurements to study the metabolism of Pb and to investigate the relationship between health effects in chronic Pb exposure, both in the workplace and in the general environment.

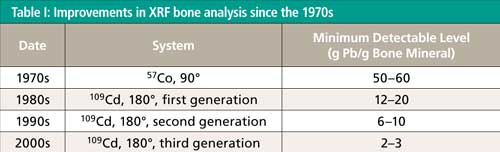

Can you briefly describe the major advances in the technique since the 1970s with respect to instrumentation, method, and minimum detectable level?

The first in vivo bone Pb measurements, carried out by Ahlgren and Mattsson in the early 1970s (5), used the 122-keV γ-rays from 57Co to excite Pb K-shell X-rays. They used a 90° scattering geometry and a Ge(Li) detector. Later Somervaille and colleagues (6) used the 88-keV γ-rays from 109Cd in place of 57Co, together with a near 180° scattering geometry and, by that time, a HpGe detector. There were two main advantages: The energy was very close to the K-shell absorption edge (88 keV) in Pb, so the photoelectric cross section was larger than for 57Co. Secondly, the dominant Compton scattering feature was lower in energy than the Pb K X-rays for the 109Cd system, whereas the Compton scatter feature was higher in energy than the Pb K X-rays for the 57Co system. This meant that the continuum underlying the Pb X-ray signal was much smaller for 109Cd than for 57Co.

There have been subsequent developments of the 109Cd bone Pb system. The first-generation system used an annular 109Cd source with an inner diameter of 22 mm, with a 16-mm-diameter HpGe detector, so the source surrounded the detector. The second-generation system, dating from the early 1990s, inverted this geometry; a small spot source of 109Cd was mounted centrally in front of a larger (51-mm-diameter, 20-mm-thick) HpGe detector. This configuration has been most widely adopted in different research laboratories. The limitation on the second-generation system was the maximum pulse throughput rate. So the third-generation system replaced the single detector with an array of four smaller detectors in a “clover leaf” configuration. So, the total throughput could be four times larger and the detector resolution was also improved, resulting in a significant improvement in minimum detectable level. These successive improvements are summarized in Table I.

What other techniques have been used to perform these measurements? What are the advantages of the in vivo XRF approach compared with those methods?

The main technique, apart from X-ray fluorescence, that is used for in vivo elemental analysis is neutron activation analysis. This technique either counts γ-rays emitted following the decay of a radioisotope induced by the neutron irradiation, or γ-rays emitted promptly (~10-12 s) after neutron absorption. For most elements either X-ray fluorescence or neutron activation analysis is clearly preferable, so the two techniques should be seen as largely complementary. For example, for Pb, X-ray fluorescence is preferred. The Pb nuclei are unusually stable, because of their nuclear structure, so cross sections for neutron absorption are small compared to the photoelectric cross section in Pb. On the other hand, for aluminum, X-ray energies are so low that they are very unlikely to escape from the body, whereas the radioisotope 28Al, formed by neutron absorption in natural 27Al, emits a γ-ray of energy of 1.79 MeV, so neutron activation is to be preferred for Al. For some elements, either technique is viable. For cadmium, the photoelectric cross section at 60 keV is 49.0 cm-1. The cross section for neutron absorption in the isotope 113Cd is 20,000 barn, which is equivalent to 931 cm-1, but 113Cd is only 0.1222 abundant, so across the element Cd, the neutron absorption cross section is 114 cm-1. There are other factors to be taken into account, but effectively the two techniques are comparable in the ability to detect Cd in the human body.

You used the in vivo XRF technique in a four-year study of the levels of bone strontium in individuals suffering from osteoporosis or osteopenia who have self-administered with strontium citrate supplements (2). Can you briefly describe the method used for collecting the data in this study?

The radioisotope source 125I was used to excite the Sr K X-rays. 125I emits γ-rays at a little over 35 keV, but the predominant emission is of tellurium X-rays. In addition, the 125I was in the form of seeds used for brachytherapy, in which the iodine is adsorbed onto silver beads, so the source emits silver X-rays, as well as the direct emissions from 125I. The detector was a Si(Li) (16 mm diameter, 10 mm thick) and a backscatter (~180°) geometry was used. The Sr was measured in a finger and at the ankle, and each measurement took 30 min.

You and your group have also studied the bone lead concentration of a cross section of the population of Toronto, Ontario, Canada (3). What basic approach was used for those measurements? What was the age range of your test subjects, and what general results did you obtain?

The bone Pb study was conducted in close collaboration with Health Canada and colleagues at other institutions. A third-generation system (four HpGe detectors, each 16 mm diameter, 10 mm thick) was used for bone Pb measurements at the tibia (shin) and at the calcaneus (heel). A questionnaire was administered and blood samples were also collected. One of the objectives for Health Canada was to test how much information could be collected in a 1-h visit by each participant; so each bone Pb measurement lasted 22 min. The youngest participant was one year old and the oldest 82. The participants were roughly equally distributed between the sexes and across the age range. The lowest bone Pb levels were in people in their 20s or early 30s, and there was little difference between women and men. The bone Pb levels were about a factor of two lower than they had been in the nearby city of Hamilton in studies conducted in the 1990s.

Gadolinium, which is used in magnetic resonance imaging (MRI) contrast reagents, was the focus of another of your in vivo XRF bone studies (4). Why was gadolinium an important element for monitoring by XRF in this study? How did your experimental setup and method for this study differ from that used in the bone lead research?

Gadolinium is a very valuable contrast agent for MRI. It is administered in a bound form, and it is generally presumed that virtually all the Gd is eliminated from the body over a short period of time. However, evidence is growing that a small proportion of the Gd can be retained and the question is being raised as to whether this minor amount of residual Gd could in any way be harmful. This is the context in which the in vivo measurement of this residual Gd was developed. The system used is very nearly the same as that used for bone Pb, using the four-detector array. The spectral analysis obviously focuses on the Gd X-rays, rather than the Pb X-rays; we have also attempted to minimize the amount of tungsten associated with the source and the source collimator, because W K X-rays Compton scattered through about 180° raise the continuum level under the Gd X-rays and so worsen the detection limit.

What are the next steps in your research?

In terms of improving detection limit for Pb or Gd, we are exploring the ultrahigh-throughput pulse processing electronics that have been successfully used with silicon drift detectors, primarily at synchrotrons. There are challenges with using these electronics with the relatively large HpGe detectors needed for the 40–100 keV energy range, so we are actively working with the companies concerned to improve detector resolution while still being able to take advantage of the dramatically large count rate throughput that can be achieved.

In terms of applications, there is evidence that parameters that have been treated as fixed-such as the relationship between cumulative blood Pb and uptake of Pb into bone, or the half life governing the release of Pb from bone back into blood-may not be constant, but may depend on intensity of exposure or cumulative past exposure, or they may vary with a person’s age. This theory is controversial and does have potential public health consequences, so we wish to explore it further.

There is some evidence that levels of Sr in western people may be less than is best for their bone health, but there is also evidence that supplementing with Sr may have harmful side effects. Our data showed that bone Sr levels increased by at least 10 times, and as much as 100 times in people who were self supplementing, and this increase occurred during a relatively short period of up to four years. It is possible that this is too much, in too short a time, and too late-that is, the supplementation was done after the damage to bone had already been sustained. We would like to investigate the hypothesis that much more modest supplementation, administered very gradually and earlier in life, could help to diminish the prevalence of osteoporosis. This would be a multistep, multidisciplinary, and highly collaborative program of research.

We have only just begun to make Gd measurements. As well as trying to improve the detection limit, we need to find out how Gd retention varies with the type of contrast agent used, number of times administered, time since administration, and the varieties of health or disease of the people concerned.

References

- D.R. Chettle, Pramana-J. Phys.76(2), 249–259 (2011).

- H. Moise, D.R. Chettle, and A. Pejovi´c-Mili´c, Physiol. Meas. 37, 429–441 (2016).

- M.L. Lord, F.E. McNeill, J.L. Gräfe, A.L. Galusha, P.J. Parsons, M.D. Noseworthy, L. Howard, and D.R. Chettle, Applied Radiation and Isotopes120, 111–118 (2017).

- S. Behinaein, D.R. Chettle, M. Fisher, W.I. Manton, L. Marro, D.E.B. Fleming, N. Healey, M. Inskip, T.E. Arbuckle, and F.E. McNeill, Physio. Meas. 38, 431–451 (2017).

- L. Ahlgren, K. Lidén, S. Mattsson, and S. Tejning, Scand. J. Work, Environ. & Health2, 82 (1976).

- L.J. Somervaille, D.R. Chettle, and M.C. Scott, Phys. Med. Biol. 30, 929 (1985).

Smarter Sensors, Cleaner Earth Using AI and IoT for Pollution Monitoring

April 22nd 2025A global research team has detailed how smart sensors, artificial intelligence (AI), machine learning, and Internet of Things (IoT) technologies are transforming the detection and management of environmental pollutants. Their comprehensive review highlights how spectroscopy and sensor networks are now key tools in real-time pollution tracking.