Sampling in Mass Spectrometry

Columnist Kenneth L. Busch discusses some of the basic considerations for valid sampling, with some examples pertinent to mass spectrometry.

"Today it seems impossible to introduce students in any significant way to all of the apparatus and instruments available for separation and measurement. Anyway, I know of no evidence that industry wants the product of a button-pushing, meter-reading excursion past an array of expensive instruments." So stated M.G. Mellon of Purdue University at a meeting in 1959 (1), describing the goals of a university course in quantitative analysis. Mellon went on to say that "It seems obvious that analyses cannot be made without samples [and for sample selection and preparation], homogeneous materials present no problem, provided enough is available for the required determination(s). In contrast, obtaining a representative sample from some heterogeneous materials is one of the most difficult analytical operations. In spite of this fact, a recent survey of 50 books revealed that 20% of them did not mention sampling." In 1971, Herb Laitinen, Editor of Analytical Chemistry, also wrote about the full role of the analytical chemist in the experimental design for sampling as well as in the measurement and interpretation of data (2).

In 2009, it might seem even more impossible to create an analytical chemistry curriculum that balances breadth of coverage with depth of education, as the tools for separation and measurement are far more numerous and diverse than in 1959. Attaining a balance is made even more difficult by the fact that the time allotted to an undergraduate university education remains about the same, and the fact that educational technology sometimes leads to information overload rather than enhanced understanding. It is safe to assume, however, that industry probably still prefers chemists who understand the basics of analysis, rather than those who might simply be button-pushers. Understanding the basics may be one of the reasons this column has appeared since 1995, and why continuing education in all of analytical chemistry has expanded within professional societies and other venues. Suggestions for incorporating sampling theory in undergraduate curriculum have been discussed (3) (using as an example the determination of metals in breakfast cereals with inductively coupled plasma spectroscopy), and specialized texts are available (4–6).

Kenneth L. Busch

Every new advance in instrumentation alters our understanding of the components that comprise a sample and reflects on measures that must be used to determine whether a sampling protocol is valid. With new analytical measurement capabilities, samples previously thought to be homogeneous may be found to exhibit significant heterogeneity. The heterogeneity may be a spatial or time distribution of sample components. A rational evaluation of sampling has always embraced these possibilities. In the final analysis, even large volume and well-mixed samples can only be defined as uniform or not when the component to be measured is specified and its distribution determined. It is prudent at the beginning of an analysis to assume that a sample volume is heterogeneous for all samples. Then, as the measurement proceeds, a limit can be established for each component at which variation will be deemed significant. Therefore, an accurate characterization of the sampling volume can only occur after the scouting data are acquired and evaluated.

Although more sophisticated theories of sampling have been developed, and these are now supported by more robust statistical tools, proper sampling is as difficult now as it was in 1959. Proper sampling still requires careful experimental design and the devotion of considerable time and resources from the analyst. The study of sampling melds mathematics, mechanics, and chemistry, and it probably still does not receive the attention it merits in general texts of analytical chemistry. In books that are written specifically for mass spectrometry, sampling is usually not mentioned at all, yielding its space to descriptions of instrumentation and details of spectral interpretation. One is left to wonder how much of the recorded mass spectrometry (MS) data may be both accurate and precise, but ultimately not suited to answer the question at hand. Challenges in sampling for MS have become especially evident as portable mass spectrometers have been taken into the field, and the analyst is faced with real-time sampling decisions. Similarly, mass spectrometers are now incorporated into production lines as quality control measurement (as in brewing), and samples may be presegmented as discrete units, or even configured as their final forms (such as a pill for which the level of active ingredient is to be assayed).

In this column, some of the basic considerations for valid sampling are described, with some examples pertinent to MS. A later column will drill down to more specific situations, such as sampling for in-field analysis or process control sampling.

We begin with an overview of basic sampling strategies for populations of samples. These strategies have been described as: random sampling, stratified random sampling, systematic sampling, and rational subgrouping. Each strategy has its particular applications area, and the selection depends upon the character of the population itself as well as the type of study (population or process) to be conducted — that is, what is to be measured. In general, a population study seeks to establish the characteristics or components of a population when they are not expected to change over time. A process study follows changes in an evolving population.

A key characteristic of random sampling is that each unit or subset of the population has an equal chance of being selected as the sample for measurement. Just as logically, random sampling only works when there is no evident stratification, segregation, or bias. The fact that bias is unknown cannot be viewed as justification for random sampling. Measures taken to reduce sample nonhomogeneity and remove bias include dissolution, stirring, mixing, or grinding.

Stratified random sampling is used when the analyst knows or suspects that there may be some component distribution that may bias measurement results, and a correction can be achieved through a selection process that ensures that each of the stratified groups is represented in the final sampled population. The size of each sample usually is related to the proportionality of the stratification.

Systematic sampling can be used in a process study by taking samples at a specified frequency or interval, if it can be shown that the selected frequency itself does not lead to bias. An example might be the selection of every 17th unit for analysis from a process stream that consists of segmented units. Alternatively, a sample might be taken every 3 min from a continuous flow. Systematic sampling has the advantage of being, well, systematic. The flow of samples to be analyzed is known ahead of time.

Finally, a process called rational grouping is a sampling strategy designed to expose suspected variations in a process or population. Such a strategy may be designed, for example, to differentiate short-term variations from long-term variations in a sample population known to be affected by both.

The next issue confronting the analyst is proper sample size or sample volume. This decision is tied to the properties of the sample as well as the performance characteristics of the measurement. Logically, a mass spectrometric analysis with high sensitivity might require a smaller sample size. That sounds ideal, but a smaller sample size might lead to a sampling error that is muted in a larger sample size.

The desired accuracy and precision of the measurement also factor into an evaluation of requisite sample size. Analysis precision can be determined from a series of measurements only if those measurements are representative of the same sample. In general, a need for greater accuracy and precision requires that a larger sample size must be initially obtained. Finally, the magnitude of the expected variation in the sample influences sample size; a greater expected variation should result in a larger sample.

We usually think of sampling as the capture of a subvolume representative of a larger sample volume (for example, grabbing a subset of a large homogeneous sample as described by Mellon). This mode of random sampling describes the origin of most samples analyzed by gas chromatography (GC)–MS or liquid chromatography (LC)–MS. We grow convinced of the validity of sampling when we observe no obvious sample stratification, and when replicate measurements return the same measured values (outside of the analytical error). To be absolutely sure of the correctness of sampling, we should sequester the entire sample volume, but this is not usually practicable. Instinctively, though, we commit to obtaining as large a sample volume as possible, even as every different initial volume is transformed into the few milliliters (or even microliters) of sample stored in the vial, and from which aliquots are drawn for analysis. The subsequent danger for the analyst who never leaves the laboratory is that every sample looks exactly the same.

In modern MS, the sample volume may also correspond to a discrete unit, such as a single tablet, and the sampling problem also expands to a determination of whether that single tablet properly represents the larger population of a production batch. In imaging MS, the sample volume may even be as small as a single cell from a living organism, or a preserved organ or tissue slice. In some situations, the analyst expects that that cell represents the collection of cells in an organ. In other instances, that particular cell, and the MS data derived from it, may be interpreted to show that the cell is different from the bulk population of cells, as in some indication of a cancerous tumor (rational subgrouping). Even surface analysis is a volume analysis, with one dimension very small relative to the others.

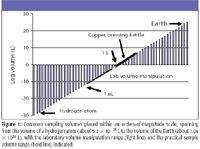

Most of the common texts on sampling refer to a sample size, and describe it in terms of mass. Sampling is, however, an issue of volume, and the segregation of a sample volume from its larger source volume. Figure 1 places sampling volumes in perspective through an orders-of-magnitude plot. This perspective allows the analyst to compare the volume of the vial in the laboratory (within the range line designated by laboratory volume manipulation) with the volume of the original sample represented (the range under the bold line), thereby emphasizing the importance of proper sampling. Remember that sample preparation procedures often involve extraction and concentration of a larger initial sample volume to produce a volume commensurate with storage requirements and the analytical method itself. In the perspective shown in Figure 1, note that these sample preparation steps (extraction, concentration, derivatization) usually are all accommodated within one or two orders-of-magnitude of the volume scale. But it is exactly these discrete physical and chemical steps that can easily lead to significant discrimination and matrix effects.

Figure 1

Practical limitations on sampled volume size means that the scale in which we operate does not usually extend very far above a 1-L volume. Furthermore, sampling volumes that exceed tens or hundreds of liters are rare compared to the multitudinous sample of smaller volumes. Figure 1 does not include a time perspective, or consider parameters relevant to sampling that change with time.

As an example of when time figures into sampling, consider the selection of samples from a large vat in which fermentation is occurring. The analyst might be tasked to monitor alcohol content, or the creation of other flavor or off-flavor components in a large volume that is physically inhomogeneous with respect to suspended solids, presumably homogenous with respect to dissolved components, and changing rapidly with time. In simpler terms, proper sampling of beer is a topic worthy of its own careful study; the volume of a typical copper brewing kettle is indicated in Figure 1.

In GC–MS and LC–MS, the sample carousel is filled with vials that each carries its own sampling history of time and place. The validity of the measurement is therefore intimately linked with the validity of the sampling. The "theory of sampling" (TOS) (6) is a comprehensive overview of all the errors that can occur in the sampling of a heterogeneous volume. The assumption is that once the errors are identified, they can be evaluated and minimized and perhaps eliminated. The comprehensive theory of sampling does include consideration of the time evolution of samples. In brief, TOS (8–10) provides a description of what is termed the total sampling error (TSE) and compares this to the total analytical error (TAE). The TAE is derived from our evaluations of the accuracy and precision of our MS measurements. From our experience, we know that the latter is on the order of a few percent, given a reasonable confidence level. For those systems in which the TSE can be estimated reliably, it usually is found to be 10–100 times the magnitude of the TAE. While we may take great personal pleasure in wringing out the best performance from our analytical instruments, common sense informs us that efforts might be more profitably directed to the sampling process. Additionally, we should consider an obligation to report results strongly linked to the sampling process itself, analogous with our obligation to report the details of the analysis along with the measurement results. The TOS especially has been thoroughly explored in the field of mineralogy and geological sampling, especially within the concept of ore assay for valuable elements.

The TOS uses concepts that would be familiar to Mellon in 1959. The terms in TOS differ somewhat from those used in modern sampling statistics, reflecting its development in the practical sciences. However, as one might expect, a sampling constant appears centrally in the mathematical treatment that describes the probability that a targeted analyte is sampled throughout a sample volume without discrimination. The heterogeneity of a sample volume is reduced by physical processes such as stirring, mixing, or grinding, and is linked to the sampling constant. In TOS, the heterogeneity is estimated mathematically in an expression that involves particle size, shape, size distribution, and factors related to analyte partitioning and extraction. Our preference for liquid sample volumes is a natural consequence of the relative importance of these factors. Finally, the common-sense conclusion that a larger sample size reduces sampling errors is also reflected in the TOS mathematical treatment. Sample volumes for GC–MS and LC–MS analyses usually are within one or two orders-of-magnitude of the volumes used for other analytical methods such as nuclear magnetic resonance spectrometry or infrared and Raman spectroscopy. All of these analytical sample volumes are accommodated within the "laboratory volume manipulation" range in Figure 1, with 1 pL being the amount of volume that can be manipulated in a single drop from a modern ink-jet printer. Although it is perhaps true that the instrumentally oriented person seldom sees the sampling side of the analytical issue, the science and the statistics are well worked out.

As mass spectrometers have become more sensitive, and viable sample volumes smaller, requirements for valid sampling have become concomitantly more stringent. The ability to rapidly complete complex measurements on replicate or duplicate samples provides a reduction in TAE, but difficulties in proper handling and storage of small sample volumes usually are unexplored. TOS for ultrasmall sample sizes, as one might suspect from a common sense approach, may need to consider new factors that are muted within larger sample volumes.

In a previous column on "Ethics and Mass Spectrometry" (11), we introduced some of the ethical implications of MS analyses that produced data used in medical diagnoses, and connected with individuals. The ethical issues deal with the certification, control, and dissemination of such data. The requirements to minimize total analytical error (TAE) remain stringent. As you might expect, issues of proper sampling have been revisited in such instances. Kayser and colleagues (12) have published a recent article on the theory of sampling and its application in tissue-based diagnosis. The presentation builds upon developments in the understanding of information available in visual images, first in their native analog format, and then later in their digitized form. Interestingly, although computer-aided tools for the manipulation of digital images are formidable (and would presumably aid in their interpretation), one early conclusion of the pathologist groups was that digital images required a longer time to evaluate. The review of sampling theory by Kayser and colleagues is designed to provide the pathologist with more "meaningful" images, and consequently, reduce the requisite evaluation time. Interestingly, a conclusion of this overview is that a strict standardization of sampling is the best approach from the diagnostic viewpoint of the pathologist.

This diagnostic study discussed earlier is provocative in its implications. While the transformation of the discussion from the limited dimensionality of a digital image to the higher dimensionality of MS data can be outlined within the same overall theory of sampling, we should consider whether the analytical chemist should be limited to strictly standardized sampling. However, in the recent past, major advances in sampling have been pioneered by the users of mass spectrometers, and the designers of instruments, who have tried new things. In consequence, such individuals have become engaged in every stage of analysis from sampling to measurement to data interpretation and evaluation. This full engagement defies the compartmentalization of responsibilities that characterizes many other professions. Mass spectrometrists are, at the core, measurement scientists, and (as Laitinen surmised) should therefore be familiar with every aspect of metrology. Proper sampling is an integral part of modern metrology, and the topic will reappear in future installments of this column.

Finally, we consider some sampling considerations especially relevant to instrumental analysis and MS in particular. Of course, it is fundamentally desirable in sampling and any subsequent sample treatment and preparation that the sample components are not changed. Yet, one might sample from an aqueous environment and ultimately produce a more concentrated solution of sample components in a nonaqueous solvent. If the chemical form of the component in the original sample is pH dependent, a change in form is almost inevitable. Similarly, if components in the original sample interact with one another or are bound to partners, then sample cleanup inevitably disturbs that interaction. It has simplistically been considered that the use of a chromatographic separation in conjunction with MS, as in GC–MS or LC–MS, is one final step in the sample cleanup process. While the resultant data set is more manageable, the sample is clearly changed. Indeed, in a technique such as MS-MS, in which that final cleanup step may be absent, the interaction of one sample component with another must always be considered carefully. The changes that we may observe in ionization efficiency in MS-MS, for example, usually are ascribed to "matrix effects," and are a direct consequence of the sample environment, and the presence of other components that make up the matrix. Seen in a more favorable light, the noncovalent interactions that can be studied with a technique such as electrospray ionization MS are a very specific beneficial form of matrix effect.

Kenneth L. Busch was involved in a graduate-school project during which he was tasked to gather samples of roadside dirt, gravel, and grass coated with hazardous oil illegally dumped from a passing tanker truck. He stood there in the hot sun, gloved hands holding a tray of 3-mL sample vials, thinking to himself "What sort of sampling design is this?" The initial level of confusion has abated only slightly in the 30 years since. This column is solely the work of the author and has no connection to the National Science Foundation. KLB can be reached at wyvernassoc@yahoo.com

References

(1) M.G. Mellon, Ohio J. Science 61(2), 87–92 (1961).

(2) H.A. Laitinen, Anal. Chem. 43(11), 1353 (1971).

(3) G.L. Donati, M.C. Santos, A.P. Fernandes, and J.A. Nóbrega, Spectrosc. Lett. 41(5), 251–257 (2008).

(4) P. Gy and A.G. Royle, Sampling for Analytical Purposes (John Wiley and Sons, New York, 1998).

(5) J. Pawliszyn, Sampling and Sample Preparation for Field and Laboratory, Fundamentals and New Directions in Sample Preparation, Volume 37 in the Comprehensive Analytical Chemistry series (Elsevier, Amsterdam, 2002).

(6) F. Pitard, Pierre Gy's Sampling Theory and Sampling Practice: Heterogeneity, Sampling Correctness, and Statistical Process Control, 2nd Ed. (CRC Press, New York, 1993).

(7) P.M. Gy, Trends Anal. Chem. 12(2), 67–76 (1995).

(8) K. Heydorn and K. Esbenson, Accreditation and Quality Assurance: Journal for Quality, Comparability and Reliability in Chemical Measurement 9(7), 391–396 (2004).

(9) L. Peterson, P. Minkkinen, and K.H. Ebenson, Chemom. Intell. Lab. Syst. 77(1–2), 261–277 (2005).

(10) K.H. Ebenson and K. Heydorn, Chemom. Intell. Lab. Syst. 74(1), 115–120 (2004).

(11) K.L. Busch, Spectroscopy 23(11), 14–21 (2008).

(12) K. Kayser, H. Schultz, T. Goldmann, J. Görtler, G. Kayser, and E. Vollmer, Diagn. Pathol. 4(6), (2009) DOI: 10.1186/1746-1596-4-6

High-Speed Laser MS for Precise, Prep-Free Environmental Particle Tracking

April 21st 2025Scientists at Oak Ridge National Laboratory have demonstrated that a fast, laser-based mass spectrometry method—LA-ICP-TOF-MS—can accurately detect and identify airborne environmental particles, including toxic metal particles like ruthenium, without the need for complex sample preparation. The work offers a breakthrough in rapid, high-resolution analysis of environmental pollutants.

The Fundamental Role of Advanced Hyphenated Techniques in Lithium-Ion Battery Research

December 4th 2024Spectroscopy spoke with Uwe Karst, a full professor at the University of Münster in the Institute of Inorganic and Analytical Chemistry, to discuss his research on hyphenated analytical techniques in battery research.

Mass Spectrometry for Forensic Analysis: An Interview with Glen Jackson

November 27th 2024As part of “The Future of Forensic Analysis” content series, Spectroscopy sat down with Glen P. Jackson of West Virginia University to talk about the historical development of mass spectrometry in forensic analysis.

Detecting Cancer Biomarkers in Canines: An Interview with Landulfo Silveira Jr.

November 5th 2024Spectroscopy sat down with Landulfo Silveira Jr. of Universidade Anhembi Morumbi-UAM and Center for Innovation, Technology and Education-CITÉ (São Paulo, Brazil) to talk about his team’s latest research using Raman spectroscopy to detect biomarkers of cancer in canine sera.