Statistics, Part III: Third Foundation

Spectroscopy

Part III of this series discusses the principle of least squares

Part III of this series discusses the principle of least squares.

This column is the continuation of our previous discussions dealing with statistics (1,2), and also presents a correction to that column. As we usually do, when we continue the discussion of a topic through more than one column, we continue the numbering of equations, figures, and tables from where we left off.

Third Foundation: The Principle of Least Squares

Background

Probability theory started being developed around the mid-1600s, with the original primary application being the description of games of chance (gambling), and later on being applied to scientific measurements that contained a random component (noise or error). Scientists, of course, were interested then, as they are now, in determining the systematic relationships between various quantities. Mathematicians had the luxury of dealing with theoretical relationships that-whether a straight line or some curved, nonlinear relationship-were always exact, since the underlying gedankenexperiments describing the systems under study were always noise-free. Experimental scientists, and I will pick on the astronomers here, did not have that luxury; they were stuck with whatever data they measured, which usually contained at least some error-instruments in those days were not magically better than modern ones! This situation led to difficulties. For example, as most if not all of us now know, an important question back then was whether the orbits of the planets were circles or some other shape, such as ellipses. In an ideal, error-free world (er, universe) it would only be necessary to ascertain the position of a planet at four different times (or five times if the measurements could not be made at equally spaced time intervals) to determine whether the orbit was circular or elliptical.

As all of us now know, however, measurements are not without error. All measured values have some error associated with them, whether or not we know what that error is. (However, as was once pointed out to me, if you actually know the error of a particular measurement, then the determination of the quantity itself would be error-free since you could then make a mathematical correction for the error of that measurement and get an error-free final result.) So, when we say we know the error of a measurement, what we really mean is that we know the distribution of random values that affect the value of the underlying quantity, which is presumed to have some “true” value that we want to ascertain. Therefore, the question the mathematicians asked themselves was, “How can we determine, from our measurements, what the true value of the physical quantity we’re seeking is?” Having determined the rules governing probabilities by then, they were, in principle at least, able to calculate an estimate of the “truth.” For this estimate, they took a very direct approach: They could set up an expression for the probability of the event of interest, then do the usual procedure of taking the derivative and setting that equal to zero, to find what became known as the maximum likelihood estimator of the property of interest.

In practice, perhaps, not so much. The problem encountered was a practical one. Depending on the complexity of the problem, the expression they had to set up and solve could contain hundreds or even thousands of terms. And this in an era when even Babbage’s mechanical calculators were 200 years or so in the future, and electricity hadn’t been discovered, much less electronic calculators developed! To solve the problem by hand was simply computationally overwhelming. To be sure, some problems were dealt with using maximum likelihood methods, but few were important enough to warrant the expenditure of so many man-hours of effort. In modern times, there are a few companies that provide software that use maximum likelihood computations to solve common problems, but even those are few and far between. Today, most computations for problems of common interest use the methods devised by Gauss and his contemporaries and successors.

Carl Friedrich Gauss and the Least-Square Method

Enter Gauss. Carl Friedrich Gauss is considered one of the three greatest mathematicians of all time by some historians of mathematics, and who am I to argue? (The other two are Archimedes and Newton. I wondered why Einstein didn’t make the list, until it dawned on me that he wasn’t a mathematician, he was a physicist.) Biographers list and discuss all the contributions that Gauss made to mathematics, but the one of interest to us here is his development of the least-square method of fitting a line to a set of data. This was not done in a sudden flash of insight. Gauss worked from 1794 to 1798 on the problem of finding another method to fit a line to a set of data that gave the same answer as the maximum likelihood method. Apparently, the least-square method came up early in his investigations, but he couldn’t prove it to be a general rule. He then approached the problem from the other side, starting from the maximum likelihood method: He sought the function and associated error distribution that would give the same fitting line as the maximum likelihood method. This turned out to be, in Gauss’s terminology, the least square residuals method.

However, there was a caveat: The errors must be distributed as exp(-(x - x0)2)-that is, what we now call the normal distribution. There were also a couple of other requirements on the data. Often people using the least-square method forget about Gauss’s caveats and the limitations of the method. Then they scratch their heads and say “I wonder why that happened?”

In addition to having the errors be normally distributed, other requirements for using the least-square approach are

- Data errors must affect only the Y variable.

- Data errors of the X variable (variables for multivariate data) must be zero.

- The model must be linear in the coefficients.

Gauss spent a good portion of his time proving some auxiliary characteristics of the least-square method, finally being able to prove that when all these conditions are met for the data at hand, models obtained using the least square method are minimum variance linear unbiased estimators. Note that all the terms stated here are used with their specific technical meanings as was discussed in the column on analysis of variance (ANOVA) (2).

Note, in fact, that in the previous column on ANOVA, we saw that the same adjectives “minimum variance, unbiased estimators” are properties of variances also. Do we know of anything else that is a minimum variance unbiased estimator? Yes, indeed. It turns out that the mean is also a minimum variance unbiased estimator. We will not prove that; it would take too much space and is beyond the scope of this column, which, you’ll recall, is about least squares. We will prove, however, that the mean is a least-square estimator. Our discussion here is an abbreviated version of the previous proof of that property of the mean, presented on page 33 in the book in reference 3.

The least-square property of a number is literally that: If you have a set of data Y containing n numbers, and you want to find some other, unknown number X, then if you compute the difference of each member of the data set Y from X, square those differences, and then add up all those squares, the least-square value of X is the one that gives the smallest possible value for that sum of squares. Algebraically, we can define the least-square value as the sum of squares (which we’ll call S) as in equation 6:

S = Σ(Yi - X)2 [6]

where the summation is taken over the n numbers in the set of values of Y. We find the minimum value for S by using the usual mathematical procedure of taking the derivative and setting it equal to zero:

dS/dX = Σ2(Yi - X) = 0 [7]

Therefore, from the last two parts of equation 7 we find

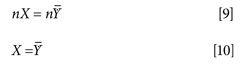

ΣX = ΣYi [8]

Thus, we see that the previously unknown value for the number that has the property of having the minimum possible sum of the squares of the differences from numbers in the data set turns out to be the mean of the data set. This is another way of saying that the mean is a least-square estimator of the data from which it was calculated.

This collection of properties-minimum variance, consistent, unbiased estimators-is considered by statisticians to be the set of desirable properties of what are known as parametric statistics. We have now shown two cases, the mean and the least-square regression line, where statistics with the least-square property also have those other desirable properties.

Are there any other statistics that are familiar to us, that also have the least-square property? Indeed, there are. Let’s look at it from this point of view, and classify the objects that are least-square estimators according to their dimensionality:

- The mean is a single point, therefore its dimensionality is zero.

- A straight line, as from a simple regression, has a dimensionality of 1 (unity).

This classification implies that the next step up in dimensionality should be an object with a dimensionality of two. What has a dimensionality of two? Well, just about any function except a straight line would fit that bill, since it would require a plane to hold it. What curved functions do we know about that result in one or more curved lines? The loadings from a principal component analysis (PCA) (or, as I think of them, the principal components themselves) would fit that bill very nicely. The fact that principal components are least-square estimators is demonstrated very nicely in an older series of “Chemometrics in Spectroscopy” columns (4–10). Ordinarily, expositions of principal components are propounded from a basis in matrix mathematics. Except for those who are completely comfortable with the higher matrix math involved, the matrix-based explanation has a tendency to hide what’s going on “under the hood” from view. The derivation of principal components is a premier example of this tendency in operation. For example, one of the “classic” texts for introductory chemometrics (11), starts the discussion of principal components on page 198 by presenting the equation Y = XR (where the bold characters indicate that the corresponding variables are matrix quantities) under the heading “Eigenvector Rotations.” Despite extensive explanations in that section, nowhere is the concept of least squares mentioned. Descriptions of principal components usually invoke the concept of their being “maximum variance” functions. Frankly, this description doesn’t make a whole lot of sense, because the variance can be unbounded, and can be inflated to any extent simply by multiplying all the data by a sufficiently large constant.

However, a familiarity with the concept of ANOVA makes the following argument apparent: A given data set composed of fixed numbers has a fixed amount of total variance. ANOVA splits that variance up into one or more pieces, the sum of which must equal the total variance of the data. Hence, maximizing one piece of the variance must, of necessity, minimize at least one of the other pieces. From this argument it is seen that “maximum variance” and “least squares” are two sides of the same coin. You can’t have one without the other. Nowhere, however, is this situation ever explained. To be fair, least squares is briefly mentioned in Sharaf’s book (11), in connection with curve fitting and resolving overlapping signals, but the relationship or application to principal components is not described.

Now we can add another item to our list:

- The mean is a single point, therefore its dimensionality is zero.

- A straight line, as from a simple regression, has a dimensionality of 1 (unity).

- The loadings from a principal component analysis have a dimensionality of 2.

As described in the above-referenced articles (4–10), principal component analysis is the way to construct the set of functions that are the best fitting functions, according to the least-square criterion, to a given set of data. In the context of spectroscopy, the dataset of interest is a collection of spectra. Mathematically, the fitting functions are arbitrary functions, but in fact are those functions that are defined by the dataset. In this sense, it is analogous to the mean (which is the least-square fitting of a point to a set of data points) and also analogous to a regression (which is the least-square fitting of a straight line to a set of data); principal components are the result of the least-squares fitting of a set of curved lines (which lie in a plane) to a set of data.

Going back to Gauss: nowadays, many people use least-squares calculations freely, without much concern for whether their data satisfy the requirements specified by Gauss. Several methods of chemical analysis, especially spectroscopic methods, use calibration algorithms dependent, in one way or another, on a least-squares calculation. How can we use this type of algorithm in such blatant disregard for the requirements of the theoretical underpinnings and yet base a successful analytical method on the results? The answer to that question is manifold (or multivariate, if you’ll forgive the expression):

- First, it doesn’t always work successfully. Anyone monitoring one or more of the on-line discussion groups about spectroscopic analysis will have seen questions posted whose substance is “Why doesn’t my model reliably produce accurate results?” While that question also has multiple possible answers, most of which are beyond the scope of this article, one of them (which is never given) is the possibility that the data used fails to meet one or more of Gauss’s requirements. Clearly, neither the questioner nor any of the responders consider that as a possible reason for the difficulties encountered with calibrations.

- Second of all, Gauss knew better. While the mathematical system he created for modeling the behavior of physical reality specified zero as the allowed error for the X variables, Gauss knew very well what we stated above: All physical measurements inevitably are contaminated with noise and error. So, how could he then make such a statement? Clearly, the answer is that Gauss knew what we now know: that zero error, being a physical impossibility, is not a hard requirement on the data. The practical meaning of that requirement on modeling is that the error must be small enough to be a good approximation to zero. Or, to put it in statistician’s language, the error must be small enough to be a good estimator of zero. Then, if the data meet that requirement, good modeling performance (that is, reliable analytical accuracy) can be obtained. In modern spectrometers, the noise level can be as small as 10-6 compared to the signal level, which is a pretty good approximation to zero, for the vast majority of situations of practical interest.

And that is why modern analytical spectroscopy works.

From the above examples we can also see that the concept of least squares joins the concepts of probability and ANOVA as the fundamental operations that underlie several of the most common and important operations that we perform.

Erratum

We can’t get away with anything! Our sharp-eyed readers insist we do everything correctly, and let us know when we make a mistake. In the case at hand, our previous column dealing with the second foundation of chemometrics, analysis of variance (ANOVA) (2), had a few errors. The numbers in the data tables were all correct, but when performing the computations on those numbers, an incorrect value was used in the computation. Specifically, when computing the F-value from the data in Table I, near the top of the middle column on page 36, we computed

1/n * Wvar = 1.1591/20 = 0.0580

Unfortunately, the number used for Wvar (1.1591) was actually the value for SDW in Table I. The correct value for Wvar is 1.3435, as can be seen in the table. Using this value of Wvar to compute F gives a value of F = 1.815.

The same error occurred in the computations for the data in Table II. The correct value for F from the data in Table II is F = 0.2308/0.0672 = 3.435. Fortunately, this numerical error did not affect the outcomes of the F-tests; the results of the first test are still not statistically significant, and the F-value from the second test is. We thank George Vickers and Kaho Kwok for their sharp eyes (and sharp pencils) for detecting this error, and bringing it to our attention.

References

- H. Mark and J. Workman, Spectroscopy30(10), 26–31 (2015).

- H. Mark and J. Workman, Spectroscopy31(2), 28–38 (2016).

- H. Mark and J. Workman, Statistics in Spectroscopy, 2nd Ed. (Academic Press, New York, 2003).

- H. Mark and J. Workman, Spectroscopy22(9), 20–29 (2007).

- H. Mark and J. Workman, Spectroscopy23(2), 30–37 (2008).

- H. Mark and J. Workman, Spectroscopy23(5), 14–17 (2008).

- H. Mark and J. Workman, Spectroscopy23(6), 22–24 (2008).

- H. Mark and J. Workman, Spectroscopy23(10), 24–29 (2008).

- H. Mark and J. Workman, Spectroscopy24(2), 16–26 (2008).

- H. Mark and J. Workman, Spectroscopy24(5), 14–15 (2009).

- M. Sharaf, D. Illman, and B. Kowalski, Chemometrics (John Wiley & Sons, New York, 1986).

Howard Mark serves on the Editorial Advisory Board of Spectroscopy and runs a consulting service, Mark Electronics, in Suffern, New York. Direct correspondence to: SpectroscopyEdit@UBM.com

Jerome Workman Jr. serves on the Editorial Advisory Board of Spectroscopy and is the Executive Vice President of Engineering at Unity Scientific, LLC, in Milford, Massachusetts. He is also an adjunct professor at U.S. National University in La Jolla, California, and Liberty University in Lynchburg, Virginia.

From Classical Regression to AI and Beyond: The Chronicles of Calibration in Spectroscopy: Part I

February 14th 2025This “Chemometrics in Spectroscopy” column traces the historical and technical development of these methods, emphasizing their application in calibrating spectrophotometers for predicting measured sample chemical or physical properties—particularly in near-infrared (NIR), infrared (IR), Raman, and atomic spectroscopy—and explores how AI and deep learning are reshaping the spectroscopic landscape.