Maxwell's Equations, Part I: History

The historical developments leading up to Maxwell's equations are discussed in Part I of this series.

Maxwell's equations for electromagnetism are the fundamental understanding of how light behaves. There are multiple versions depending on whether there is vacuum, a charge present, matter present, the system is relativistic or quantum, or if it is written in terms of differential or integral calculus. Here, I inaugurate a multipart series on a discussion of each of Maxwell's equations. The goal is for this series to become the definitive explanation of these rules (I know, a heady goal!). I trust readers will let me know if I succeed. In this first installment, we will discuss a little bit of historical development as a prelude to the introduction of the laws themselves.

One of the pinnacles of classical science was the development of an understanding of electricity and magnetism, which saw its culmination in the announcement of some mathematical relationships by James Clerk Maxwell in the early 1860s. The impact of these relationships is difficult to minimize. They provided a theoretical description of light as an electromagnetic wave, implying a wave medium (the ether), which inspired Michelson and Morley, whose failure inspired Einstein, who was also inspired by Planck and who (among others) ushered in modern science as a replacement for classical science. Again, it's difficult to minimize the impact of these equations, now known as Maxwell's equations.

Here I will discuss the history of electricity and magnetism, going through early 19th-century discoveries that led to Maxwell's work. In the next installment, I will focus on the first of Maxwell's work. In future installments, I will discuss each successive equation in turn.

History (Ancient)

Electricity has been experienced since the dawn of humanity from two rather disparate sources: lightning and fish. Ancient Greeks, Romans, Arabs, and Egyptians recorded the effect that certain aquatic life caused when touched, and who could ignore the fantastic light shows that accompanied certain storms (Figure 1). Unfortunately, although the ancients could describe the events, they could not explain exactly what was happening.

Figure 1: One of the first pictures of a lightning strike, taken by William N. Jennings around 1882.

The ancient Greeks noticed that if a sample of amber (Greek elektron) was rubbed with animal fur, it would attract small, light objects like feathers. Some, like Thales of Miletos, related this property to that of lodestone, a natural magnetic material that attracted small pieces of iron. (A relationship between electricity and magnetism would be reawakened more than 2000 years later.) Because the samples of this rock came from the nearby town of Magnesia (currently located in southern Thessaly, in central Greece), these stones ultimately were called magnets. Note that it appears that magnetism was recognized by the same time as static electricity effects (Thales lived in the 7th and 6th century BCE [before common era]), so both phenomena were known, but not understood. Chinese fortune tellers also were utilizing lodestones as early as 100 BCE. For the most part, however, these properties of rubbed amber and this particular rock remained novelties.

By 1100 CE (common era), the Chinese were using spoon-shaped pieces of lodestone as rudimentary compasses, a practice that spread quickly to the Arabs and to Europe. In 1269, Frenchman Petrus Peregrinus used the word "pole" for the first time to describe the ends of a lodestone that point in particular directions. Christopher Columbus apparently had a simple form of a compass in his voyages to the New World, as did Vasco de Gama and Magellan. So it seems that Europeans had compasses by the 1500s — indeed, the great ocean voyages by the explorers likely would have been extremely difficult without a working compass (Figure 2). In books published in 1550 and 1557, the Italian mathematician Gerolamo Cardano argued that the attractions caused by lodestones and the attractions of small objects to rubbed amber were caused by different phenomena, possibly the first time this was explicitly pointed out. (His proposed mechanisms, however, were typical of much 16th-century physical science — wrong.)

Figure 2: Compasses like the one drawn here were used by 16th century maritime explorers to sail around the world - likely the first applied use of magnetism.

In 1600, English physician and natural philosopher William Gilbert published De Magnete, Magneticisque Corporibus, et de Magno Magnete Tellure (On the Magnet and Magnetic Bodies, and on the Great Magnet the Earth) in which he became the first to make the distinction between attraction owing to magnetism and attraction caused by rubbed elektron. For example, Gilbert argued that elektron lost its attracting ability with heat, but lodestone did not. (Here is another example of a person being correct, but for the wrong reason.) He proposed that rubbing removed a substance he termed effluvium and it was the return of the lost effluvium to the object that caused attraction. Gilbert also introduced the Latinized word electricus, meaning like amber in its ability to attract. Note also what the title of Gilbert's book implies — the Earth is a "great magnet," an idea that only arguably originated with him, as the book was poorly referenced. The word electricity itself was first used by English author Thomas Browne in a book in 1646.

In the late 1600s and early 1700s, Englishman Stephen Gray carried out some experiments with electricity and was among the first to distinguish what we now call conductors and insulators. In the course of his experiments, Gray was apparently the first to suggest logically that the sparks he was generating were the same thing as lightning, but on a much smaller scale. Interestingly, announcements of Gray's discoveries were stymied by none other than Isaac Newton, who was having a dispute with other scientists (with whom Gray was associated, making him guilty by association) and, in his position of president of the Royal Society, impeded these scientists' abilities to publish their work. (Curiously, despite his advances in other areas, Newton himself did not make any major and lasting contributions to the understanding of electricity or magnetism. Gray's work was found documented in letters sent to the Royal Society after Newton had died, so his work has been historically documented.) Inspired by Gray's experiments, French chemist Charles du Fay also experimented and found that objects other than amber could be altered. However, du Fay noted that in some cases, altered objects attract while other altered objects repel. He concluded that there were two types of effluvium, which he termed vitreous (because rubbed glass exhibited one behavior) and resinous (because rubbed amber exhibited the other behavior). This was the first inkling that electricity comes as two kinds.

French mathematician and scientist René Descartes weighed in with his book Principia Philosophiae, published in 1644. His ideas were strictly mechanical: Magnetic effects were caused by the passage of tiny particles emanating from the magnetic material and passing through the luminiferous ether. Unfortunately, Descartes proposed a variety of mechanisms for how magnets worked, rather than a single one, which made his ideas arguable and inconsistent.

The Leyden jar (Figure 3) was invented simultaneously in 1745 by Ewald von Kleist and Pieter van Musschenbrök. Its name derives from the University of Leyden (also spelled Leiden) in the Netherlands, where Musschenbrök worked. It was the first modern example of a condenser or capacitor. The Leyden jar allowed for a significant (at that time) amount of charge to be stored for long periods of time, letting researchers experiment with electricity and see its effects more clearly. Advances were quick, given the ready source of what we now know as static electricity. Among other discoveries was the demonstration by Alessandro Volta that materials could be electrified by induction, in addition to direct contact. This, as well as other experiments, gave rise to the idea that electricity was some sort of fluid that could pass from one object to another, in a similar way that light or heat (caloric in those days) could transfer from one object to another. The fact that many conductors of heat were also good conductors of electricity seemed to support the notion that both effects were caused by a "fluid" of some sort. du Fay's idea of vitreous and resinous substances, mentioned above, echoes the current feelings at the time.

Figure 3: Example of an early Leyden jar, which was composed of a glass jar coated with metal foil on the outside (labeled A) and the inside (B).

Enter Benjamin Franklin, polymath. It would take (and has taken) whole books to chronicle Franklin's contributions to a variety of topics, but here we will focus on his work with electricity. Based on his own experiments, Franklin rejected du Fay's "two fluid" idea of electricity and instead proposed a "one fluid" idea, arbitrarily defining one object "negative" if it lost that fluid and "positive" if it gained fluid. (We now recognize his idea as prescient, but backwards.) As part of this idea, Franklin enunciated an early concept of the law of conservation of charge. In noting that pointed conductors lose electricity faster than blunt ones, Franklin invented the lightning rod in 1749. Although metal had been used in the past to adorn the tops of buildings, Franklin's invention appears to be the first time such a construction was used explicitly to draw a lightning strike and spare the building itself. Curiously, there was some ecclesiastical resistance to the lightning rod because lightning was deemed by many to be a sign of divine intervention and the use of a lightning rod was considered by some to be an attempt to control a deity. The danger of such attitudes was illustrated in 1769 when the rodless Church of San Nazaro in Brescia, Italy (in Lombardy, the northern part of the country) was struck by lightning, igniting approximately 100 tons of gunpowder stored in the church. The resulting explosion was said to have killed 3000 people and destroyed one-sixth of the city.

In 1752, Franklin performed his apocryphal experiment with a kite, a key, and a thunderstorm. The word apocryphal is used intentionally: Different sources have disputed accounts of what really happened. (Modern analyses suggest that performing the elementary-school portrayal of the experiment definitely would have killed Franklin.) What is generally not in dispute, though, is the result: Lightning was just electricity, not some divine sign. As mundane as it seems to us today, Franklin's demonstration that lightning was a natural phenomenon was likely as important as Copernicus' heliocentric ideas were in demonstrating the lack of a privileged, heaven-mandated position for mankind in the universe.

History (More Recent)

Up to this point, most demonstrations of electric and magnetic effects were qualitative, not quantitative. This began to change in 1779, when French physicist Charles-Augustin de Coulomb published Theorie des Machines Simples (Theory of Simple Machines). To refine this work, through the early 1780s Coulomb constructed a very fine torsion balance with which he could measure the forces caused by electrical charges. Ultimately, Coulomb proposed that electricity behaved as if it were tiny particles of matter, acting in a way similar to Newton's law of gravity, which had been known since 1687. We now know this idea as Coulomb's law. If the charge on one body is q1 and the charge on another body is q2 and the bodies are a distance r apart, then the force F between the bodies is given by equation 1:

where k is a constant. In 1785, Coulomb also proposed a similar expression for magnetism, but curiously did not relate the two phenomena. As seminal and correct as this advance was, Coulomb still adhered to a "two fluid" idea of electricity — and magnetism, as it turned out. (Interestingly, about 100 years later, James Clerk Maxwell, about whom we will have more to say, published papers from reclusive scientist Henry Cavendish, demonstrating that Cavendish had performed similar electrical experiments at about the same time but never published them. If he had, we might be referring to this equation as Cavendish's law.) Nonetheless, through the 1780s, Coulomb's work established all of the rules of static (that is, nonmoving) electrical charge.

The next advances had to do with moving charges, but it was a while before people realized that movement was involved. Luigi Galvani was an Italian doctor who studied animal anatomy at the University of Bologna. In 1771, while dissecting a frog as part of an experiment in static electricity, he noticed a small spark between the scalpel and the frog leg he was cutting into, and the immediate jerking of the leg itself. Over the next few decades, Galvani performed a host of experiments trying to pin down the conditions and reasons for this muscle action. The use of electricity from a generating electric machine or a Leyden jar caused a frog leg to jerk, as did contact with two different metals at once, like iron and copper. Galvani eventually proposed that the muscles in the leg were acting like a "fleshy Leyden jar" that contained some residual electrical fluid he called animal electricity. In the 1790s, he printed pamphlets and passed them around to his colleagues, describing his experiments and his interpretation.

One of the recipients of Galvani's pamphlets was Alessandro Volta, a professor at the University of Pavia, about 200 km northwest of Bologna. Volta was skeptical of Galvani's work, but successfully repeated the frog-leg experiments. Volta took the work further, positioning metallic probes on nerve tissue exclusively and bypassing the muscle. He made the crucial observation that some sort of induced activity was only produced if two different metals were in contact with the animal tissue. From these observations, Volta proposed that the metals were the source of the electrical action, not the animal tissue itself — the muscle was serving as the detector of electrical effects, not the source.

In 1800, Volta produced a stack of two different metals soaked in brine that supplied a steady flow of electricity (Figure 4). The construction became known as a voltaic pile, but we know it today as a battery. Instead of a sudden spark, which was how electricity was produced in the past, here was a construction that provided a steady flow, or current, of electricity. The development of the voltaic pile made new experiments in electricity possible.

Figure 4: Diagram of Volta's first pile that produced a steady supply of electricity. We know it now as a battery.

Advances came swiftly with this new, easily constructed pile. Water was electrolyzed, not for the first time but for the first time systematically, by Nicholson and Carlisle in England. English chemist Humphrey Davy — already a well-known chemist for his discoveries — proposed that if electricity were truly caused by chemical reactions (as many chemists at the time had, perhaps chauvinistically, thought), then perhaps electricity can cause chemical reactions in return. He was correct, and in 1807, he produced elemental potassium and sodium electrochemically for the first time. The electrical nature of chemistry then was realized and its ramifications continue today. Davy also isolated elemental chlorine in 1810, following up with isolation of the elements magnesium, calcium, strontium, and barium; all for the first time and all by electricity.

Davy's greatest discovery, though, was not an element. Arguably, Davy's greatest discovery was Michael Faraday. But more on Faraday later.

Much of the historical development so far has focused on electrical phenomena. Where has magnetism been? Actually, it's been here all along, but not much new has been developed. This changed in 1820. Hans Christian Oersted (more properly, Ørsted) was a Danish physicist who was a disciple of the metaphysics of Immanuel Kant. Given the recently demonstrated connection between electricity and chemistry, Oersted was certain that there were other fundamental physical connections in nature.

In the course of a lecture in April 1820, Oersted passed an electrical current from a voltaic pile through a wire that was placed parallel to a compass needle. The needle was deflected. Later experiments (especially by Ampère) demonstrated that, with a strong enough current, a magnetized compass needle will orient itself perpendicular to the direction of the current. If the current was reversed, the needle will point in the opposite direction. Here was the first definitive demonstration that electricity and magnetism affected each other: electromagnetism.

Detailed follow-up studies by Ampère in the 1820s quantified the relationship a bit. Ampère demonstrated that the magnetic effect was circular about a current-carrying wire and, more importantly, that two wires with current moving in them attracted and repelled each other as if they were magnets. Magnets, Ampère argued, are nothing more than moving electricity. The development of electromagnets at this time freed scientists from finding rare lodestones and allowed them to study magnetic effects using only coiled wires as the source of magnetism. Around this time, Biot and Savart's studies led to the law named after them, relating the intensity of the magnetic effect to the square of the distance from the electromagnet — a parallel to Coulomb's law.

In 1827, Georg Ohm announced what became known as Ohm's law, a relationship between the voltage of a pile and the current passing through the system. As simple as Ohm's law is (V = IR, in modern usage), it was contested hotly in his native Germany, probably for political reasons. Ohm's law became a cornerstone of electrical circuitry. It's uncertain how the term current became applied to the movement of electricity. Doubtless, the term arose during the time that electricity was thought to be a fluid, but it has been difficult to find the initial usage of the term. In any case, the term was well-established by the time Sir William Robert Grove wrote The Correlation of Physical Forces in 1846, which was a summary of what was known about the phenomenon of electricity to date.

Faraday

Probably no single person set the stage more for a mathematical treatment of electricity and magnetism than Michael Faraday (Figure 5). Inspired by Oersted's work, in 1821, Faraday constructed a simple device that allowed a wire with a current running through it to turn around a permanent magnet, and conversely, a permanent magnet to turn around a wire that had a current running through it. Faraday had, in fact, constructed the first rudimentary motor. What Faraday's motor demonstrated experimentally is that the forces of interaction between the current-containing wire and the magnet were circular in nature, not radial like gravity.

Figure 5: Michael Faraday was not well-versed in mathematics, but he was a first-rate experimentalist who made major advances in physics and chemistry.

By now it was clear that electrical current could generate magnetism; but what about the other way around — could magnetism generate electricity? An initial attempt to accomplish this was tried in 1824 by French scientist François Arago, who used a spinning copper disk to deflect a magnetized needle. In 1825, Faraday tried to repeat Arago's work, using an iron ring instead of a metal disk and wrapping coils of wire on either side, one coil attached to a battery and one to a galvanometer. In doing so, Faraday constructed the first transformer, but the galvanometer did not register the presence of a current. However, Faraday did note a slight jump of the galvanometer's needle. In 1831, Faraday passed a magnet through a coil of wire attached to a galvanometer, and the galvanometer registered, but only if the magnet was moving. When the magnet was halted — even when it was inside the coil — the galvanometer registered zero.

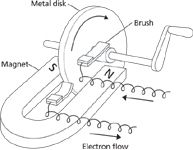

Faraday understood: It wasn't the presence of a magnetic field that caused an electrical current, it was a change in the magnetic field that caused a current. It did not matter what moved; a moving magnet can induce current in a stationary wire, or a moving wire can get a current induced by moving it across a stationary magnet. Faraday also realized that wire wasn't necessary. Taking a page from Arago, Faraday constructed a generator of electricity using a copper disk that rotated through the poles of a permanent magnet (Figure 6). Faraday used this to generate a continuous source of electricity, essentially converting mechanical motion to electrical motion. Faraday invented the dynamo, the basis of the electric industry even today.

Figure 6: Diagram of Faraday's original dynamo, which generated electricity from magnetism.

After dealing with some health issues through the 1830s, Faraday was back at work in the mid-1840s. Two decades earlier, Faraday had investigated the effect of magnetic fields on light, but got nowhere. Now, with stronger electromagnets available, Faraday went back to that investigation on the advice of William Thomson, Lord Kelvin. This time, with better equipment, Faraday noticed that the plane of plane-polarized light was rotated when a magnetic field was applied to a piece of flint glass with the light passing through it. The magnetic field had to be oriented along the direction of the light's propagation. This effect, known now as the Faraday effect or Faraday rotation, convinced Faraday of several things. First, light and magnetism were related. Second, magnetic effects were universal and not just confined to permanent or electromagnets — after all, light is associated with all matter, so why not magnetism? In late 1845, Faraday coined the term diamagnetism to describe the behavior of materials that are not attracted, and actually slightly repelled, by a magnetic field. (The amount of repulsion toward a magnetic field is typically significantly less than the magnetic attraction by materials now known as paramagnetic, which was one reason magnetic repulsion was not recognized widely until then.)

Finally, this finding reinforced in Faraday the concept of magnetic fields and their importance. In physics, a field is nothing more than a physical property whose value depends on its position in three-dimensional space. The temperature of a sample of matter, for example, is a field, as is the pressure of a gas. Temperature and pressure are examples of scalar fields; fields that have magnitude but no direction (vector fields, like magnetic fields, have magnitude and direction). This was first demonstrated in the 13th century, when Petrus Peregrinus used small needles to map out the lines of force around magnet and was able to place the needles in curved lines that terminated in the ends of the magnet, which was how he devised the concept of pole mentioned earlier. An attempt to describe a magnetic field in terms of magnetic charges analogous to electrical charges was made by the French mathematician–physicist Simeon-Denis Poisson in 1824, and while successful in some aspects, it was based on the flawed concept of "magnetic charges." However, development of the Biot-Savart law and some work by Ampère demonstrated that the concept of a field was useful in describing magnetic effects. Faraday's work on magnetism in the 1840s reinforced to him that the magnetic field was the important quantity, not the material — whether natural or electronic — that produced the field of forces that could act on other objects and produce electricity.

As crucial as Faraday's advances were for understanding electricity and magnetism, Faraday himself was not a mathematical man. He had a relatively poor grasp of the theory that modern science requires to explain observed phenomena. Thus, although he made crucial experimental advancements in the understanding of electricity and magnetism, he made little contribution to the theoretical understanding of these phenomena. That chore awaited others.

In the next installment, we will review some of the mathematics used to express Maxwell's laws and see what Maxwell's first law means.

David W. Ball David W. Ball is a professor of chemistry at Cleveland State University in Ohio. Many of his "Baseline" columns have been reprinted in book form by SPIE Press as The Basics of Spectroscopy, available through the SPIE Web Bookstore at www.spie.org. His book Field Guide to Spectroscopy was published in May 2006 and is available from SPIE Press. He can be reached at d.ball@csuohio.edu; his website is academic.csuohio.edu/ball.

LIBS Illuminates the Hidden Health Risks of Indoor Welding and Soldering

April 23rd 2025A new dual-spectroscopy approach reveals real-time pollution threats in indoor workspaces. Chinese researchers have pioneered the use of laser-induced breakdown spectroscopy (LIBS) and aerosol mass spectrometry to uncover and monitor harmful heavy metal and dust emissions from soldering and welding in real-time. These complementary tools offer a fast, accurate means to evaluate air quality threats in industrial and indoor environments—where people spend most of their time.

NIR Spectroscopy Explored as Sustainable Approach to Detecting Bovine Mastitis

April 23rd 2025A new study published in Applied Food Research demonstrates that near-infrared spectroscopy (NIRS) can effectively detect subclinical bovine mastitis in milk, offering a fast, non-invasive method to guide targeted antibiotic treatment and support sustainable dairy practices.

Smarter Sensors, Cleaner Earth Using AI and IoT for Pollution Monitoring

April 22nd 2025A global research team has detailed how smart sensors, artificial intelligence (AI), machine learning, and Internet of Things (IoT) technologies are transforming the detection and management of environmental pollutants. Their comprehensive review highlights how spectroscopy and sensor networks are now key tools in real-time pollution tracking.