Spreadsheets: A Sound Foundation for a Lack of Data Integrity?

Spreadsheets are often used to perform GMP-related calculations, but can lead to serious problems and unnecessary risk. We explain why the use of spreadsheets is heavily discouraged in a regulated laboratory environment.

Universally available on desktop computers, easy to use, and universally loved by analytical chemists, spreadsheets are used to collate lists of instruments and perform a multitude of calculations in many regulated laboratories. But spreadsheets also present an auditing and inspecting opportunity to find abundant instances of noncompliance and can lead to problems and unnecessary risk. Why is the use of spreadsheets heavily discouraged in a regulated laboratory?

If you turn on any workstationin your laboratory, you will probably find a shortcut to a spreadsheet program. When presented with this icon of temptation and seduction, a willing analyst is happy to open Pandora’s spreadsheet box and navigate between Scylla and Charybdis. Charybdis is the whirlpool of quality assurance where you have the challenge of specifying, building, and validating each spreadsheet template. Alternatively, steer the course to Scylla, the six-headed monster of regulatory noncompliance. With Scylla, if the unvalidated spreadsheet you have written and used is found, you are dead.

Spreadsheets are often used to perform GMP–related calculations, trend data, plan calibration and qualification work, and manage laboratory assets. I have even seen spreadsheets used to document the test steps for validating software—and the least said about that the better. This article discusses why spreadsheets should be eliminated as much as possible from performing GXP work to increase business efficiency and reduce regulatory risk. Note that business efficiency came before reducing regulatory risk in the last sentence. This column is dedicated to all spreadsheet lovers, or in the words of that great analytical chemist, William Shakespeare, “I come to bury spreadsheets, not to praise them.”

Regulatory Problems with Spreadsheets

One of many companies that have sailed into Scylla and been cited for noncompliant spreadsheets is Tismore Health, as seen in a Federal Drug Administration (FDA) warning letter in December 2020 (1). I hope this does not happen in your laboratory. Citation 3 is against 21 CFR 211.160(b) (2), although I would have raised the citation against 211.68(b) for failing to verify formulas. However, that is the problem with the FDA’s last century GMP regulations:

Your firm failed to establish laboratory controls that include scientifically sound and appropriate specifications, standards, sampling plans and test procedures designed to assure that components, drug products conform to appropriate standards of identity, strength, quality, and purity (21 CFR 211.160(b)).

Your firm failed to validate the spreadsheet used to perform the assay calculation for your <redacted>. Your procedures lacked guidance on how to check and manually verify the calculation sheets. During the inspection, our investigator identified a calculation error within the spreadsheet. The incorrect formula for averaging the Internal Standard peak area was used (1).

If you really want to descend into the abyss of compliance hell, let an inspector find an error in a spreadsheet. You will receive a comment like this: “There is no assurance that the associated assay results recorded are reliable and accurate.”

It is not the best situation having an inspector that thinks that all of your data are garbage. Also, if there is one calculation problem in unvalidated spreadsheets, then there are bound to be more, because there is a total lack of control when using spreadsheets.

During the review, you identified another error within your spreadsheets. The assay test result for <redacted> batch <redacted> was incorrect due to a transcription entry error for active peak area. Your firm used a new spreadsheet and entered the correct active peak area. The result was recalculated, and the final result was reported. The product had already been released with test results using the incorrect calculation although the recalculated test result was still within specification. You have committed to manually check calculations until the spreadsheet has been validated (1).

In the last sentence, we see a way to resolve the problem: You should manually check all calculations until the spreadsheet is validated. How useful are the unvalidated spreadsheets now? Read the full warning letter to see the extent of the remediation required by FDA (1). However, the approach taken by the company to manually check calculations (which is error prone in itself) misses the point about spreadsheets: They are a hybrid system that has major business process efficiency issues as well as regulatory compliance problems, as we shall see later in this column.

Epic Spreadsheet Failures

Here goes a list of the spreadsheet screw-ups. That well-known pharmaceutical company, Enron Corporation, used spreadsheets for a number of activities, and after the company went bankrupt, more than 15,000 spreadsheets were analyzed from the archive of 65,000 e-mails by two academics, Felienne Hermans and Emerson Murphy-Hill (3). Their main conclusions were:

- Out of all the spreadsheets used, 76% of them used the same 15 functions.

- In regards to spreadsheets with at least one formula, 24% of them contained an error.

- Spreadsheets were a frequent topic of e-mail conversation with 10% of emails either referring to or sending spreadsheets and frequently discussing errors in and updates to spreadsheets.

Just like in your laboratory! In fact, if spreadsheets are well embedded into your culture, a spreadsheet developed in the morning could be the department standard by the afternoon. If so, I’m assuming you are steering a course for Scylla rather than Charybdis.

In a study that had a major impact on governments, two eminent economists, Carmen Reinhart and Kenneth Rogoff, published an article “Growth in a Time of Debt,” which concluded that counties with a high amount of debt to Gross Domestic Product (GDP) (>90%) have slow economic growth. This resulted in austere policies to reduce debt and simulate growth in some countries, including the United Kingdom. Unfortunately for the two intrepid economists, they published in a non-peer reviewed part of a journal and used a spreadsheet to perform their calculations. And guess what happened? There were a few problems with the spreadsheet and the major conclusion was reversed (see Wikipedia for more details).

If you really want to understand the scope of spreadsheet disasters, take a look at Ray Panko’s website (www.panko.com). Panko is a University of Hawaii academic who has studied spreadsheets and errors associated with them for about 25 years. On his website, there is a page on spreadsheet errors that states the following (4):

- Humans are very accurate when we work. When we create a spreadsheet formula, we are correct 95 to 99% of the time.

- Unfortunately, we are not as good at finding errors. When we look diligently for errors, we only find 40 to 90%, and finding 90% is rare.

We would like to know how effective we are at finding errors when we inspect a spreadsheet. This is not just an “academic” concern. If our spreadsheets have as many errors as they seem to have, then we must test them more. One way to do this is to inspect them cell by cell. Many people believe that if we are careful enough, and if we spend enough time, we can find all of the errors in a spreadsheet. However, this ability is not realistic. If detection rates are too low, then a team inspection is necessary to catch 80 to 90% of the errors (4).

The inability to find and resolve spreadsheet errors is a worrying problem. How should we resolve it? The obvious one is that more resources need to be put into specifying the spreadsheet and how the formulas are built and tested. As Panko’s website suggests, more effort should be placed in checking the formulas. However, there is a simpler way, which is explained later on in this article.

Exterminate, Exterminate…

In a section guaranteed to ensure that many readers will want to stick large pins into my effigy, I am advocating that spreadsheets be exterminated from use in a regulatory laboratory. This is not because a spreadsheet application is bad per se, but in a regulated environment it is a recipe for potential disaster, for many reasons, as outlined in Table I.

Are You a Masochist?

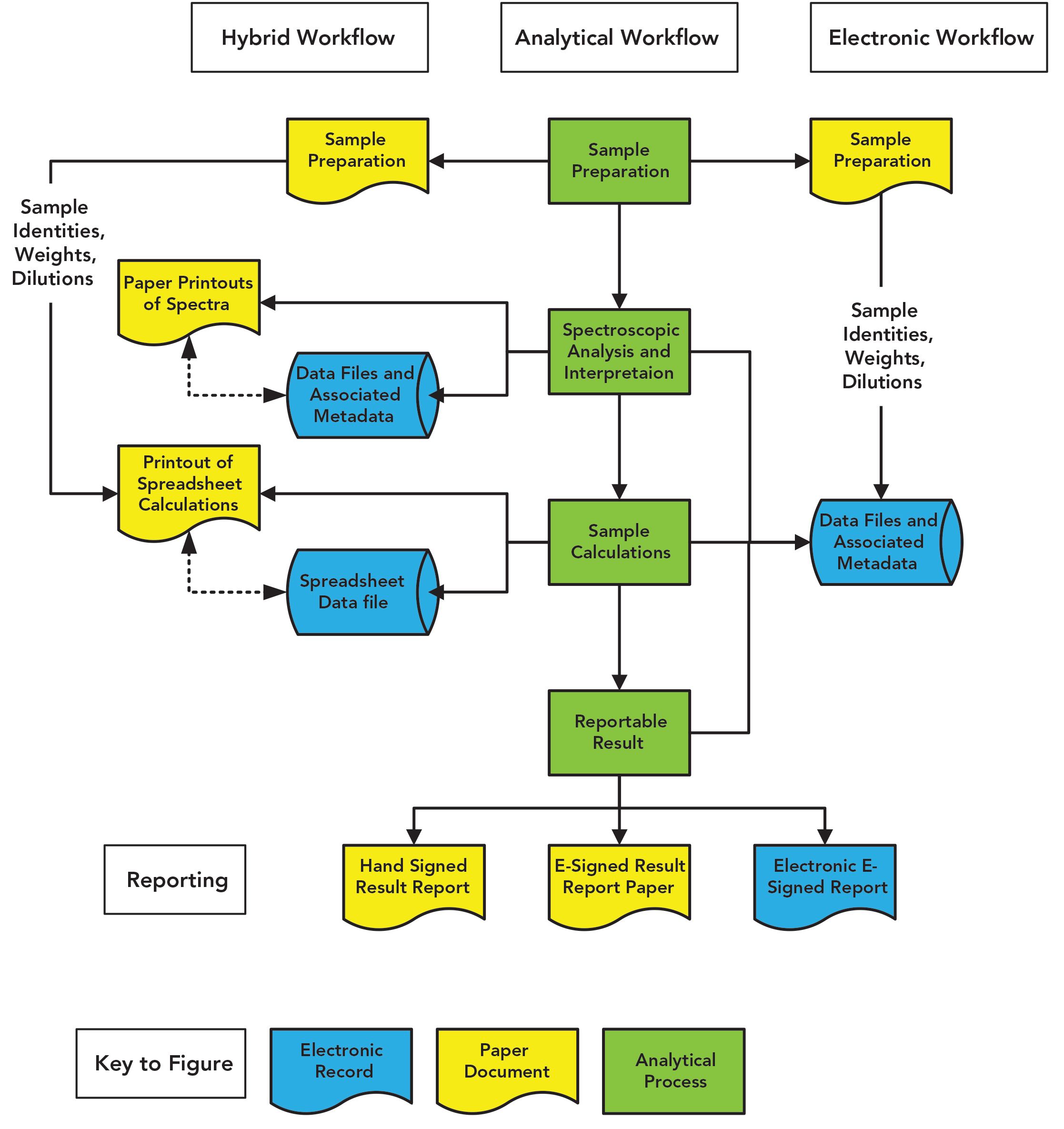

I’m not interested in your personal life, but look at your business process in the laboratory when you are using a spreadsheet. Figure 1 shows the tasks of a general quantitative analytical process in the middle in green. If you use a spreadsheet, you’ll follow the left hand side of Figure 1: Prepare the sample, analyze it along with standards, interpret the data in the spectrometer data system, print the results, manually type data into your (hopefully) validated spreadsheet, print the results out, and then type the result into a laboratory information management system (LIMS) or similar informatics application. But just look at the mess of the process and the number of records that you have created. You are a laboratory masochist.

Figure 1: Comparison of a spectroscopic analysis using a spreadsheet with an electronic process incorporating calculations in the spectrometer software.

Second Person Review Nightmare

Job done? No, enter the poor sap who is the second person reviewer. Just look at the mess this person has to review: the number of transfers between computerized systems, and the need to check for transcription errors each time data are entered manually into a different computerized system with checks of both electronic records and paper printouts. Welcome to death by compliance: transcription error checking by the reviewer, which is also an error-prone process. If you are really unlucky, the second person review takes longer than the analysis (10)!

Simpler, Compliant, and Faster Working

Guess what? Instead of the mess on the left-hand side of Figure 1, many calculations you have spent time incorporating into a spreadsheet can be done by the instrument data system—except few people bother to read the manual. You can avoid the need to print and manually enter data into a spreadsheet followed by the consequential transcription error checks. Take the time to specify and validate the calculations, and you can save much time and effort in performing the analysis and the subsequent second person review. Look at the right-hand side of Figure 1 and compare with the left-hand side and immediately you’ll see my point. You only have the sample preparation records, the manual input of data into the data system, and the electronic records in the data system. Add electronic signatures and your process is sped up immeasurably, and you only have a single location to check records. Why would you want to do anything differently?

More Detail to Kill Spreadsheets

As mentioned earlier, let us discuss two of the main problems with spreadsheets in more detail, which are hybrid systems and single accounts and passwords.

A Hybrid System

A spreadsheet macro or template is a hybrid system—an electronic record of the saved and completed file with a hand-signed paper printout. Poor design means that data are typically input manually, and there is a large transcription error checking burden placed on both the analyst and the second person reviewer. One typical omission in laboratories I have audited is the lack of record signature linking between the paper printout and the electronic record that generated it. It is not an option to state that the paper is the GXP record; the spreadsheet file is a key component of the record set of any analysis. Please read question 12 of the FDA data integrity guidance to understand what is required:

12. When does electronic data become a CGMP record?

When generated to satisfy a CGMP requirement, all data become a CGMP record. You must document, or save, the data at the time of performance to create a record in compliance with CGMP requirements, including, but not limited to, §§ 211.100(b) and 211.160(a) (11).

This is very easy to understand and follow: Save the spreadsheet file or book an appointment with Scylla.

Spreadsheet Single Account and Password

One of the issues with spreadsheet security is that there is only a single account and password for a template. Once the developer has locked the spreadsheet (this can be individual worksheets as well as the whole template) with one or more passwords, what are you going to do? This is one case when you will write the password down and follow the steps below:

- The developer needs to write the spreadsheet name and version along with the passwords, and place the paper into an opaque envelope, write the spreadsheet and version name on the front, seal the envelope, then sign and date over the seal. This is to indicate if the envelope has been opened.

- The envelope should be placed in a secure location, typically a fireproof safe, until the spreadsheet needs to be updated.

- The envelope is unsealed, the spreadsheet maintained and revalidated, and the new passwords start the process over again.

- The whole process is governed by an SOP.

If you find this a bit of a pain, then get rid of the spreadsheet.

OK, There Are Some Exceptions

Having spent all this time saying that you should eliminate spreadsheets, I must acknowledge that there are some exceptions to this rule. There are situations where calculations across analyses cannot be performed by instrument data systems and, in the absence of a LIMS, a spreadsheet may be the only option for calculating results. However, do you want to input data manually, and what about tracking changes to data? Ideally, a validated spreadsheet should be used within an electronic lab oratory notebook (ELN) or equivalent environment. Here, data should be imported and loaded electronically with a validated process to eliminate transcription error checking, and any changes are monitored by the audit trail of the informatics application. Calculated results should be transferred electronically to the next part of the process for speed and to eliminate transcription error checking.

Summary

When it comes to persuading laboratories to use alternative ways of calculating other than spreadsheets, I feel like King Cnut, an 11th Century King of England and Denmark, who commanded the sea to retreat. This was an obvious exercise in abject failure. However, never mind regulatory risk. Look at your business processes and see what they look like; it may not be a pretty sight. Incorporate calculations into instrument data systems or use informatics applications such as ELN or LIMS to move away from spreadsheets into an electronic way of working. Slaughter spreadsheets, please. You know it makes sense.

References

- US Food and Drug Administration, Warning Letter Tismore Health and Wellness Pty Limited (FDA, Silver Spring, Maryland, 2019)

- Code of Federal Regulations (CFR), Part 211 (FDA, Silver Spring, Maryland, 2008).

- F. Hermans and E. Murphy-Hill, “Enron’s Spreadsheets and Related Emails: A Dataset and Analysis,” paper presented at the IEEE/ACM 37th IEEE International Conference on Software Engineering, Florence, Italy, 2015.

- R. Panko, Spreadsheet and Human Error, www.panko.com (accessed August 2020).

- ISPE, Good Automated Manufacturing Practice (GAMP) Guide, version 5 (International Society for Pharmaceutical Engineering, Tampa, Florida, 2008).

- R.D. McDowall, LCGC N. Am. 38(2), 82–88 (2020).

- WHO Technical Report Series No.996 Annex 5 Guidance on Good Data and Records Management Practices (World Health Organisation: Geneva, Switzerland, 2016).

- Code of Federal Regulations (CFR), Part 21.11 (Food and Drug Administration, Washington, DC, 1997).

- European Commission Health and Consumers Directorate-General, EudraLex: The Rules Governing Medicinal Porudtcs in the European Union. Volume 4 , Good Manufacturing Practice (GMP) Guidelines, Annex 11: Computerised Systems (Brussels, Belgium, 2011).

- M.E. Newton and R.D. McDowall, LCGC N. Am. 36(8), 527–529 (2018).

- US Food and Drug Administration, Guidance for Industry Data Integrity and Compliance With Drug CGMP Questions and Answers (FDA, Silver Spring, Maryland, 2018).

R.D. McDowall is the director of R.D. McDowall Limited and the editor of the “Questions of Quality” column for LCGC Europe, Spectroscopy’s sister magazine. Direct correspondence to: SpectroscopyEdit@MMHGroup.com